In Lebanon conspiracy theories are such a common occurrence that the

whole world but yourself is to blame for your ailment.

I usually dismiss them but the one in this post got on my nerves, and

moreover a quite simple experiment could finally shatter it and remove

it as an option from all conversations.

The conspiracy goes as follows:

When I visit Lebanon my data is consumed way faster on a lebanese carrier. They deny it and say that I am delusional, I guess they enjoy being screwed with.

A MB isn’t a MB in Lebanon

It pisses me off that it seems like data runs out much quicker in Lebanon, I am confident that they do not calculate this properly

The data consumption shown by operators in Lebanon isn’t the real data you are consuming, they’re playing with numbers

They screw people with crazy data consumption calculation! I think it has something to do with how they handle uploads.

Let’s first assess the plausibility of the scenario.

We known that Touch and Alfa, the operators in Lebanon, benefit from

a duopoly. From this we also know that they have full leverage on the

data prices. It is unlikely that with such control one would try to

cheat on the usage calculation, why go through a recent drop in data

prices then. Additionally, there’s the risk that people will notice the

discrepancy between the data consumed and what carriers show on their

websites. In this regard, we all have a feature in our phones that

do this.

Then why do people still believe in this conspiracy? I’m not sure but

let’s gather actual numbers that speak for themselves. I’ll limit myself

to Alfa as I don’t have a subscription with Touch.

The questions we want to answer:

-

Are the numbers shown on the Alfa website the same as the actual data being consumed?

-

Are upload and download considered the same in the mobile data consumption calculation?

What we’ll need:

- A machine that we have full control over with no data pollution

- Scripts to download and upload specific amounts of data

- A tool to monitor how much is actually used on the network as both download and upload

- A machine that is on a different network to check the consumption on Alfa website

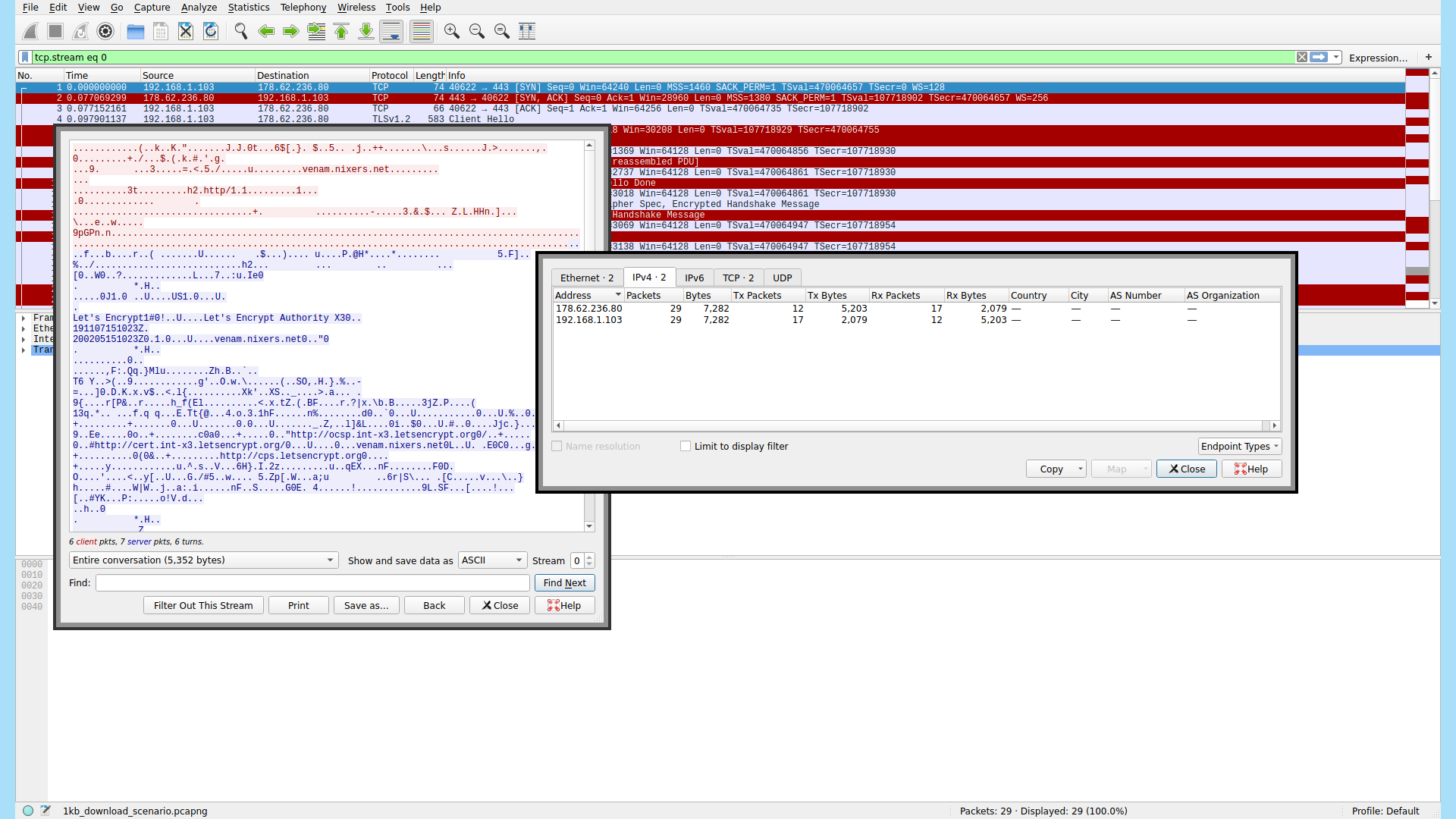

Let’s peek into the values we’re expecting, testing the monitoring tool. For this wireshark running on a Linux machine that has nothing else running will do the job.

We need a way to download and upload specific values. I’ve prepared

three files of different sizes for this, 1KB, 10KB, and 100KB. Let’s

remind readers that 1KB is 1024 bytes, and thus a 10KB files is composed

10240 bytes.

I’ve then added those files on my server for download and set a PHP

script for the upload test.

<?php

$size = $_FILES["fileToUpload"]["size"];

echo("up $size bytes");What does a download request look like:

> curl 'https://venam.net/alfa_consumption/1kb'

On wireshark we see:

TX: 2103, RX 5200 -> TOTAL 7300BTX stands for transmit and RX for receive. Overall 7KB both ways, 2KB upload, 5KB download.

That’s 7KB for a download of only 1KB, is something wrong? Nope, nothing’s wrong, you have to account for the client/server TCP handshake, the HTTP headers, and the exchange of certificates for TLS. This δ becomes negligible with the size of the request the bigger it is, and the longer we keep it open, the less we consume on transaction initialization.

The average numbers expected for different size:

> curl 'http://venam.net/alfa_consumption/10kb' TX:2278B RX:14KB -> TOTAL 16.3KB

> curl 'http://venam.net/alfa_consumption/100kb' TX:6286B RX:113KB -> TOTAL 119.2KB

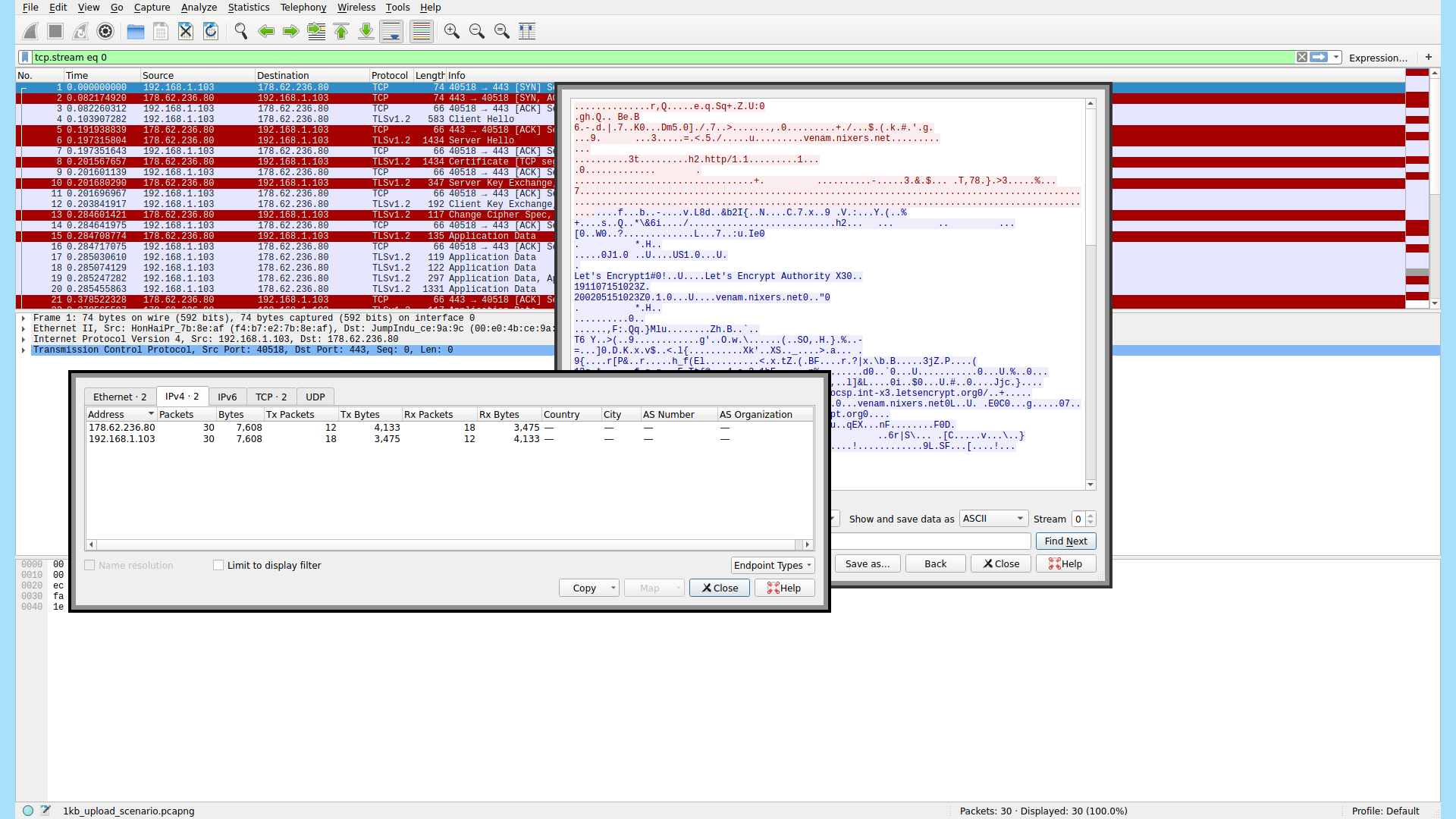

And regarding upload we have:

curl -F "fileToUpload=@1kb" 'https://venam.net/alfa_consumption/upload.php'TX: 3475, RX 4133 -> 7608B

curl -F "fileToUpload=@10kb" 'https://venam.net/alfa_consumption/upload.php'TX: 13KB, RX 4427 -> 17.4KB

curl -F "fileToUpload=@100kb" 'https://venam.net/alfa_consumption/upload.php'TX: 110KB, RX 6672 -> 116.5KB

Overall, it’s not a good idea to keep reconnecting when doing small requests. People should be aware that downloading something of 1KB doesn’t mean they’ll actually use 1KB on the network, regardless of the carrier.

In the past few years there have been campaigns to encrypt the web, make it secure. Things like letsencrypt free TLS certificate, and popular browsers flagging unsecure websites. According to Google transparency report 90% of the web now runs securely encrypted compared to only 50% in 2014. This encryption comes at a price, the small overhead when initiating the connection to a website.

Now we’ve got our expectations straight, the next step is to inspect how we’re going to appreciate our consumption on Alfa’s website.

After login at the dashboard page we can see a request akin to:

https://www.alfa.com.lb/en/account/getconsumption?_=1573381232217

Disregard the Unix timestamp in milliseconds on the right, it’s actually useless.

The response to this request:

{

"CurrentBalanceValue": "$ XX.XX",

"ExtensionData": {},

"MobileNumberValue": "71234567",

"OnNetCommitmentAccountValue": null,

"ResponseCode": 8090,

"SecurityWatch": null,

"ServiceInformationValue": [

{

"ExtensionData": {},

"ServiceDetailsInformationValue": [

{

"ConsumptionUnitValue": "MB",

"ConsumptionValue": "1880.17",

"DescriptionValue": "Mobile Internet",

"ExtensionData": {},

"ExtraConsumptionAmountUSDValue": "0",

"ExtraConsumptionUnitValue": "MB",

"ExtraConsumptionValue": "0",

"PackageUnitValue": "GB",

"PackageValue": "20",

"ValidityDateValue": "",

"ValidityValue": ""

}

],

"ServiceNameValue": "Shared Data Bundle"

}

],

"SubTypeValue": "Normal",

"TypeValue": "Prepaid",

"WafferValue": {

"ExtensionData": {},

"PendingAccountsValue": []

}

}Notice the ConsumptionValue which is shown in MB that’s as precise as

we can get. I’ll assume every hundredth decimal point is 10.24KB.

Setting this aside we’re missing the last element of our experiment: isolating the sim card and using it only on the machine that has the monitoring tool, gaining full control of what happens on the network.

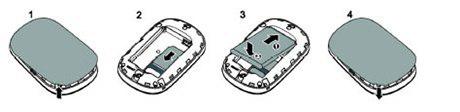

I’ve opted to use a Huawei mobile wifi E5220s model, a pocket-sized MiFi, initially used for Sodetel. This setup would let me use the device as a hotspot for 3G+ connection from the monitoring machine.

The device takes a 2FF sim card, so have a holder of this size at hand.

The device takes a 2FF sim card, so have a holder of this size at hand.

Unfortunately, the device came locked to the vendor, Sodetel, and I had

to do some reverse engineering to figure out how to configure the APN

settings for the Alfa card.

Long story short, parts of the documentation of the E5220s is available

online and it hinted me on what to do.

To add the new Alfa APN:

curl 'http://192.168.1.1/api/dialup/profiles' -H 'User-Agent: Mozilla/5.0

(X11; Linux x86_64; rv:69.0) Gecko/20100101 Firefox/69.0' -H 'Accept:

text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8' -H

'Authorization: Basic YWRtaW46YWRtaW4=' -H 'Connection: keep-alive' -d

'<request>

<Delete>0</Delete>

<SetDefault>1</SetDefault>

<Modify>1</Modify>

<Profile>

<Index></Index>

<IsValid>1</IsValid>

<Name>alfa</Name>

<ApnIsStatic>1</ApnIsStatic>

<ApnName>alfa</ApnName>

<DialupNum></DialupNum>

<Username></Username>

<Password></Password>

<AuthMode>0</AuthMode>

<IpIsStatic>0</IpIsStatic>

<IpAddress/>

<Ipv6Address/>

<DnsIsStatic>0</DnsIsStatic>

<PrimaryDns/>

<SecondaryDns/>

<PrimaryIpv6Dns/>

<SecondaryIpv6Dns/>

<ReadOnly>0</ReadOnly>

</Profile>

</request>'Remember to login on the web before, even though we’re sending basic authorization it won’t accept the request if not logged in.

A successful request returns:

<?xml version="1.0" encoding="UTF-8"?><response>OK</response>We then have to set the Alfa profile as default and disable the PIN1, as it is not required on Alfa sim cards.

Disable PIN:

curl 'http://192.168.1.1/api/pin/operate' -H 'User-Agent: Mozilla/5.0

(X11; Linux x86_64; rv:69.0) Gecko/20100101 Firefox/69.0' -H 'Accept:

text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8' -H

'Authorization: Basic YWRtaW46YWRtaW4=' -H 'Connection: keep-alive' -d

'<request>

<OperateType>2</OperateType>

<CurrentPin>0000</CurrentPin>

</request>'We’re all set, we can gather the data!

DOWNLOAD DATA:

24 requests of 1KB

Initial value:1835.06MB

Sent: 176KB total, RX 126KB, TX=50K

Expected value:

If TX/RX considered: 1835.23MB

If RX only considered: 1835.18MB

If TX only considered: 1835.11MB

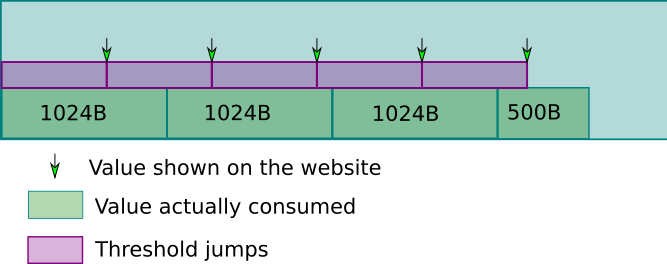

Consumption value on website: 1835.13The reason I executed 24 requests instead of a single one was that the

consumption on the website updates only when it reaches a threshold or

specific interval.

As you can see the value on the website is lower than the combination of

both download and upload together and it’s exactly because it updates

by threshold. This means that the value shown will always be less than

the one consumed unless you consume the exact threshold chunk.

For a visual explanation of what’s happening:

Here are more tests:

18 requests of 100KB

Initial value:1835.93MB

Sent: 2110KB total, RX 2013KB, TX=97KB

Expected value:

If TX/RX considered: 1837.96MB

If RX only considered: 1837.86MB

If TX only considered: 1836.02MB

Consumption value on website: 1837.8918 requests of 100KB

Initial value:1837.89MB

Sent: 2117KB total, RX 2015KB, TX=101KB

Expected value:

If TX/RX considered: 1839.92MB

If RX only considered: 1839.82MB

If TX only considered: 1836.99MB

Consumption value on website: 1839.84UPLOAD DATA:

18 requests of 100KB

Initial value:1839.84MB

Sent: 2101KB total, RX 120KB, TX=1981KB

Expected value:

If TX/RX considered: 1841.86MB

If RX only considered: 1839.96MB

If TX only considered: 1841.48MB

Consumption value on website: 1841.7918 requests of 100KB

Initial value:1841.79MB

Sent: 2101KB total, RX 120KB, TX=1981KB

Expected value:

If TX/RX considered: 1843.81MB

If RX only considered: 1841.91MB

If TX only considered: 1843.69MB

Consumption value on website: 1843.75So, Alfa actually shows a bit less than the value we are using, that’s the opposite of the conspiracy. What is happening, do we have an explanation.

The truth is that we give mobile carriers more credit than they need to. They aren’t all powerful omnipotent owners of the network, knowing and building it from scratch. The all powerful being is a theme that’s recurrent in conspiracy theories because it makes it seem like people are powerless in face of it. On the contrary, most mobile operators are not constituted of technical persons, they are, like the term implies, operating the instruments they buy, caring only if they do the job. Business is business.

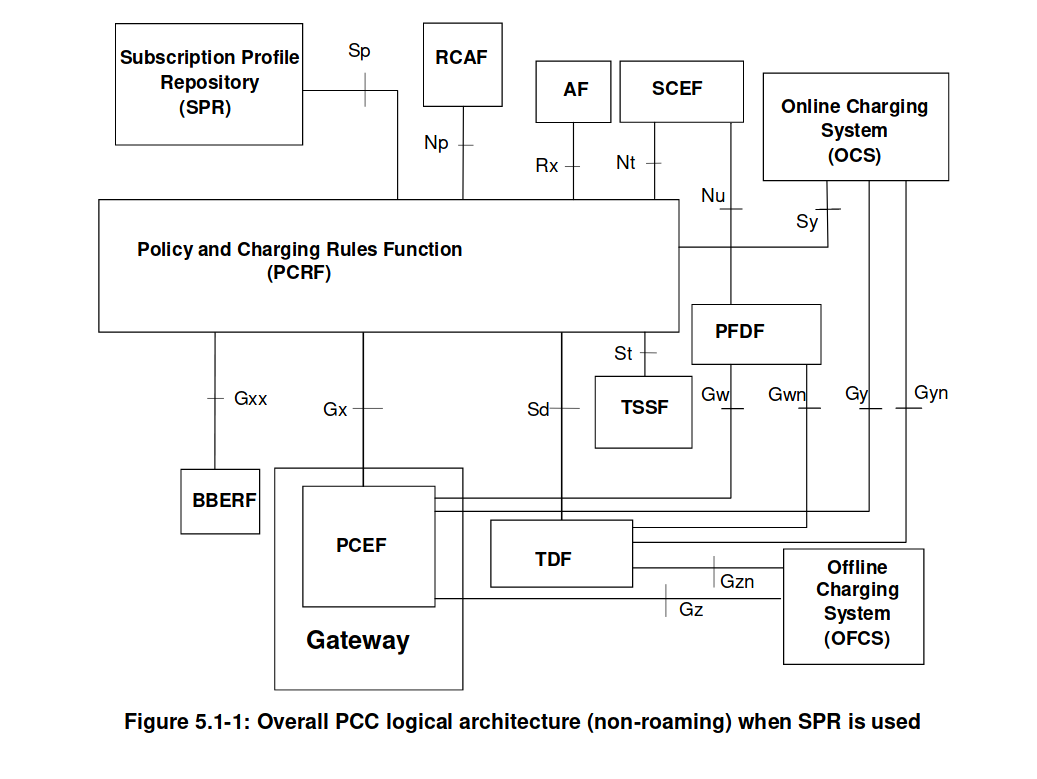

In the core network, one of these pieces of equipment is called a TDF/PCEF

or PCRF, the Traffic Detection Function + Policy and Charging Enforcement

Rules Function along with an implementation of a CPS system, Cost Per Sale system

also known as charging system.

The operator buys such hardware, they don’t know

much else about what they do, be it an Ericson charging

system,

or and Oracle CPS

system

, or another Oracle

CPS,

, or a Cisco

CPS

, or virtual ones (5G anyone) like

Allot.

Figure 5.1-1: Overall PCC logical architecture (non-roaming)

when SPR is used taken from ETSI TS 123 203 V15.5.0

(2019-10)

Figure 5.1-1: Overall PCC logical architecture (non-roaming)

when SPR is used taken from ETSI TS 123 203 V15.5.0

(2019-10)

An example scenario where Usage Monitoring Control is useful is when the operator wants to allow a subscriber a certain high (e.g. unrestricted) bandwidth for a certain maximum volume (say 2 gigabytes) per month. If the subscriber uses more than that during the month, the bandwidth is limited to a smaller value (say 0.5 Mbit/s) for the remainder of the month. Another example is when the operator wants to set a usage cap on traffic for certain services, e.g. to allow a certain maximum volume per month for a TV or movie-on-demand service.

That paragraph taken from this article or here.

So what are the reasons for all the conspiracy theories:

- Mysticism and lack of education on the topic

- Having an agenda (Confirmation bias)

- Being delusional

This doesn’t reduce the fact that people are genuinely feeling like

they’re using data quicker than what they are “really” using. The

truth is that we’ve moved in the recent years to a world where we’re

always connected to the web, and the web has morphed to be media

heavy. In the tech world this is not news anymore, we call it the

web-obesity crisis, websites are bloated with content, entangled in a

mess of dependencies.

As I’m posting this, Chrome is starting an endeavour to move

the web to a faster and lighter one, see this article for more

info

along with web.dev/fast.

This topic has been talked a lot these years,

The Average Webpage Is Now the Size of the Original Doom,

The average web page is 3MB. How much should we care?,

web-obesity,

more privacy and fast blogs, another blog about fast websites.

I’ve also been doing my part, making my blog lighter.

In sum, this is all about web-literacy.

Thanks for reading.

Attributions:

- Cesare d’Arpino [CC0]

- Pearson Scott Foresman [Public domain]

For more info on the core network there’s some good discussion in those links:

- http://www.tec.gov.in/pdf/Studypaper/PCRF%20Study%20Paper%20MAR%202014.pdf

- http://vipinksingh.blogspot.com/2016/02/sd-interface-overview-sd-reference.html

- https://stackoverflow.com/questions/54059134/what-is-difference-between-tdf-and-pcef.

If you want to have a more in depth discussion I'm always available by email or irc.

We can discuss and argue about what you like and dislike, about new ideas to consider, opinions, etc..

If you don't feel like "having a discussion" or are intimidated by emails

then you can simply say something small in the comment sections below

and/or share it with your friends.