Compilers, these wonderful and intricate pieces

of software that do so much and that so many know

little of. Similar to the previous article about computer

architecture,

I’ll take a look at another essential, but lesser known, CS topic:

Compilers.

I won’t actually dive into much details but I’ll keep it short to my notes,

definitions, and what I actually found intriguing and helpful.

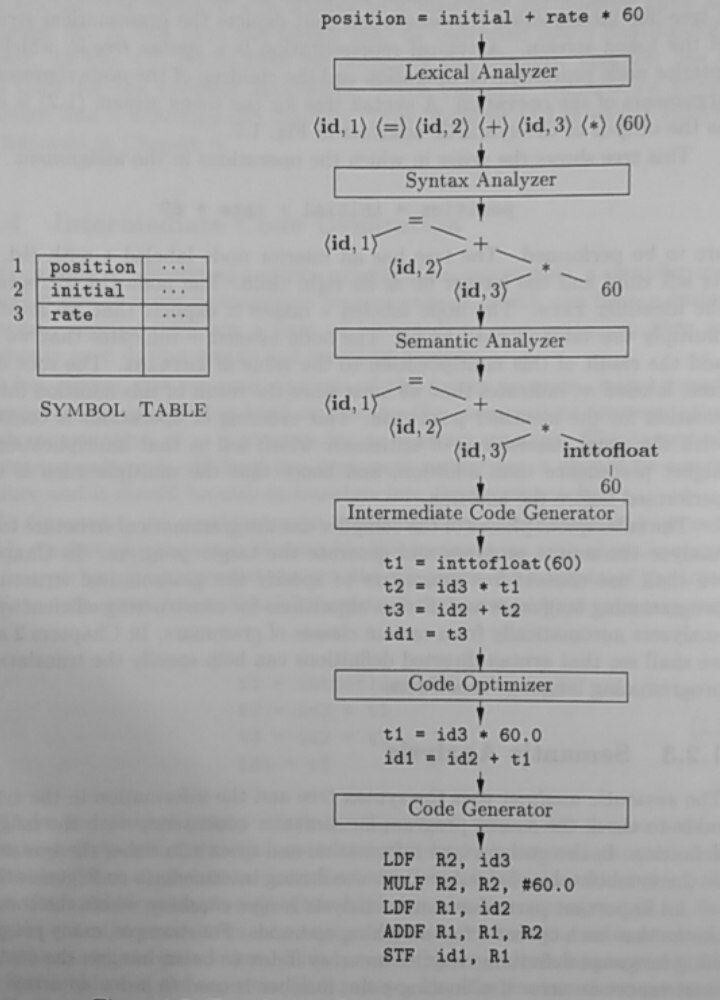

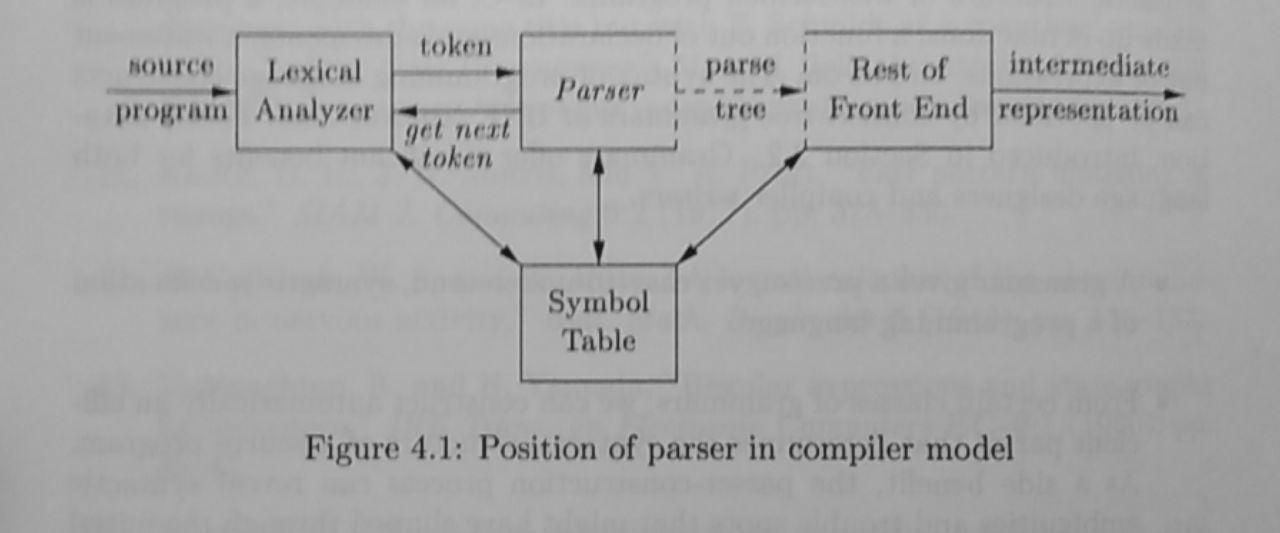

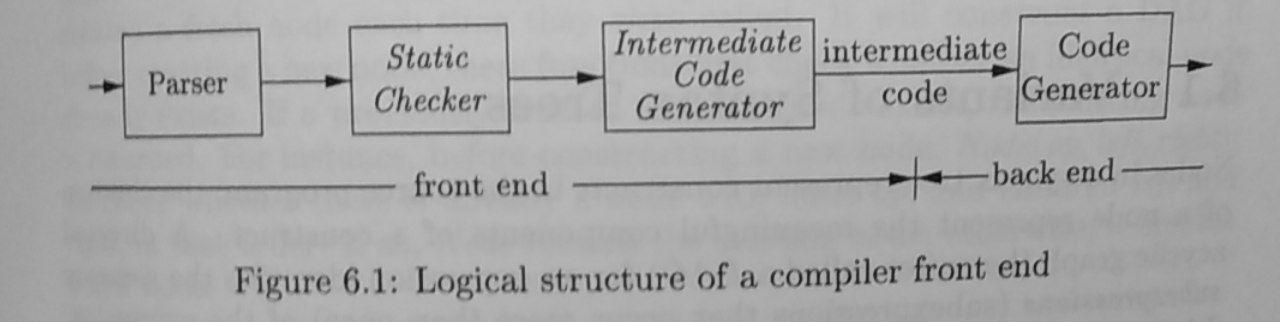

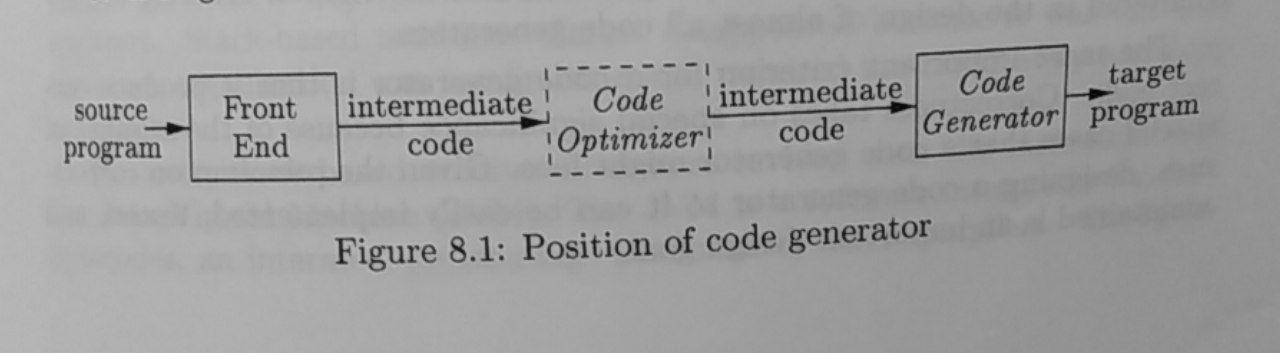

A compiler is divided into a frontend and a backend. The frontend role

is to parse the textual program, or whatever format the programmer uses

to input the code, verify it, and turn it into a representation that’s

easier to work with — an IR or Intermediary Representation.

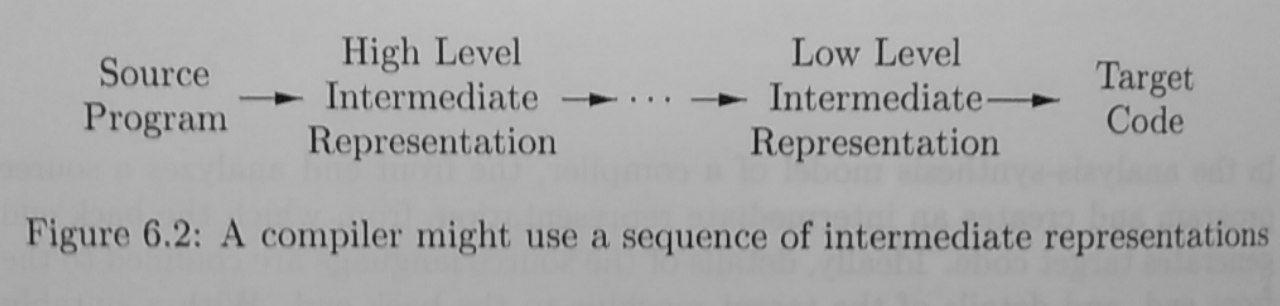

Anything after getting this intermediate representation, which is usually

either a tree or a three-address code, is the backend which role is to

optimize the code and generate an output. This output could be anything

ranging from another programming language, what’s called a transpiler,

to compiling into specific machine code instructions.

These days many programming languages rely on helpful tools to make

these steps easier. For example, most of them use Yacc and Lex to

build the front-end, and then use LLVM

to automatically have a backend. LLVM IR is a backend that could in

theory plug to any compiler frontend, thus any compiler relying on

it will necessarily benefit

from optimizations done in the LLVM IR.

Personally, I’ve found that the most interesting parts were in the

backend. While the frontend consist of gruesome parsing, things become

fascinating when you realizing everything can be turned into three-address

code, instructions that consist of maximum 3 operands and that have only

one operand on the left side for assignment and one operator on the right.

From this point on, you can apply every kind of optimizations possible,

like if loops over arrays can have their address represented by linear

functions, or if dependence between data allows to reposition the code,

of if following the lifetime of values help. In the backend you can

manage what the process will look like in memory, and you can also

implement garbage collection.

Overall, learning a bit about compilers doesn’t hurt. It gives insights

into the workings of the languages we use everyday, removing the magic

around them but keeping the awe and amazement.

So here are my rough notes and definitions I took while learning about

compilers, I hope these help someone going on the same path as there’s

a lot of jargon involved.

Definitions

-

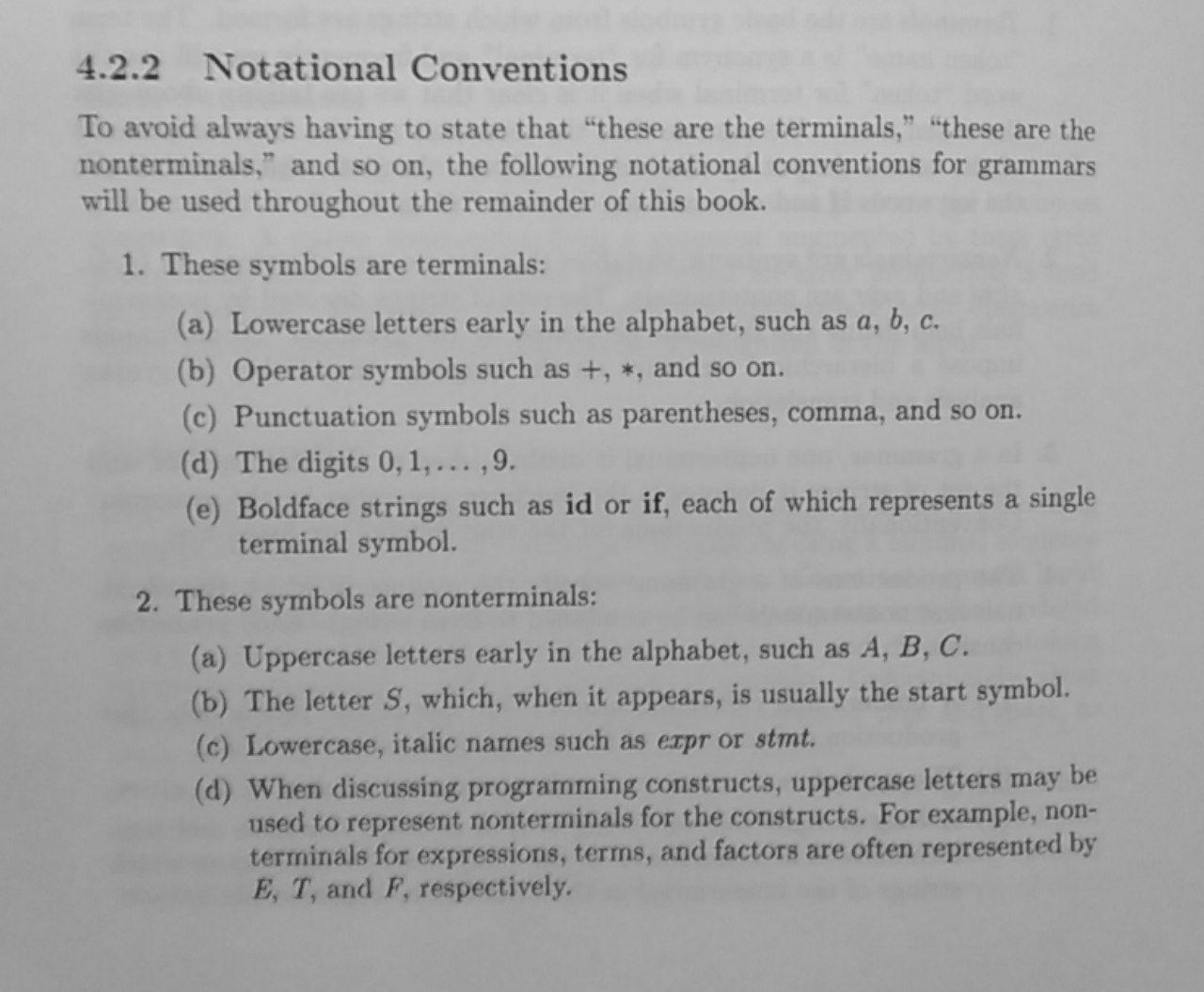

Terminals: Basic symbols from which strings are formed, also called token name.

-

Nonterminals: Syntactic variables that denote sets of strings. It helps define the language generated by the gammar, imposing a hierarchical structure on the language that is key to syntax analysis and translation.

-

Production: What nonterminals produce, the manner in which the terminals and nonterminals can be combind to form strings. They have a left/head side and a body/right side, separated by

->or sometimes::== -

Grammar: The combination of terminal symbols, nonterminal symbols, productions (nonterminals output)

-

Context free grammar: It has 4 components: terminal symbols/tokens, nonterminal symbols/syntactic variables (a string of terminals), productions (nonterminals called the head/left side + arrow + sequence of terminals and/or nonterminals the body or right side), and the designation of nonterminals as start symbol.

-

The language: The strings that we can derive from the grammar.

-

Parse Tree: Finding a tree that can be used to derive/yield a string in the language.

-

Parsing: The process of finding a parse tree for a given string of terminals.

-

Ambiguous grammar: A grammar that can have more than one parse tree that can generate a given string.

-

Associativity: The side to which the operator belongs to if the operator is within two tokens. Could be left-side associativity or right-side associativity. This is a way to assign and resolve the priority/precedence of operators.

-

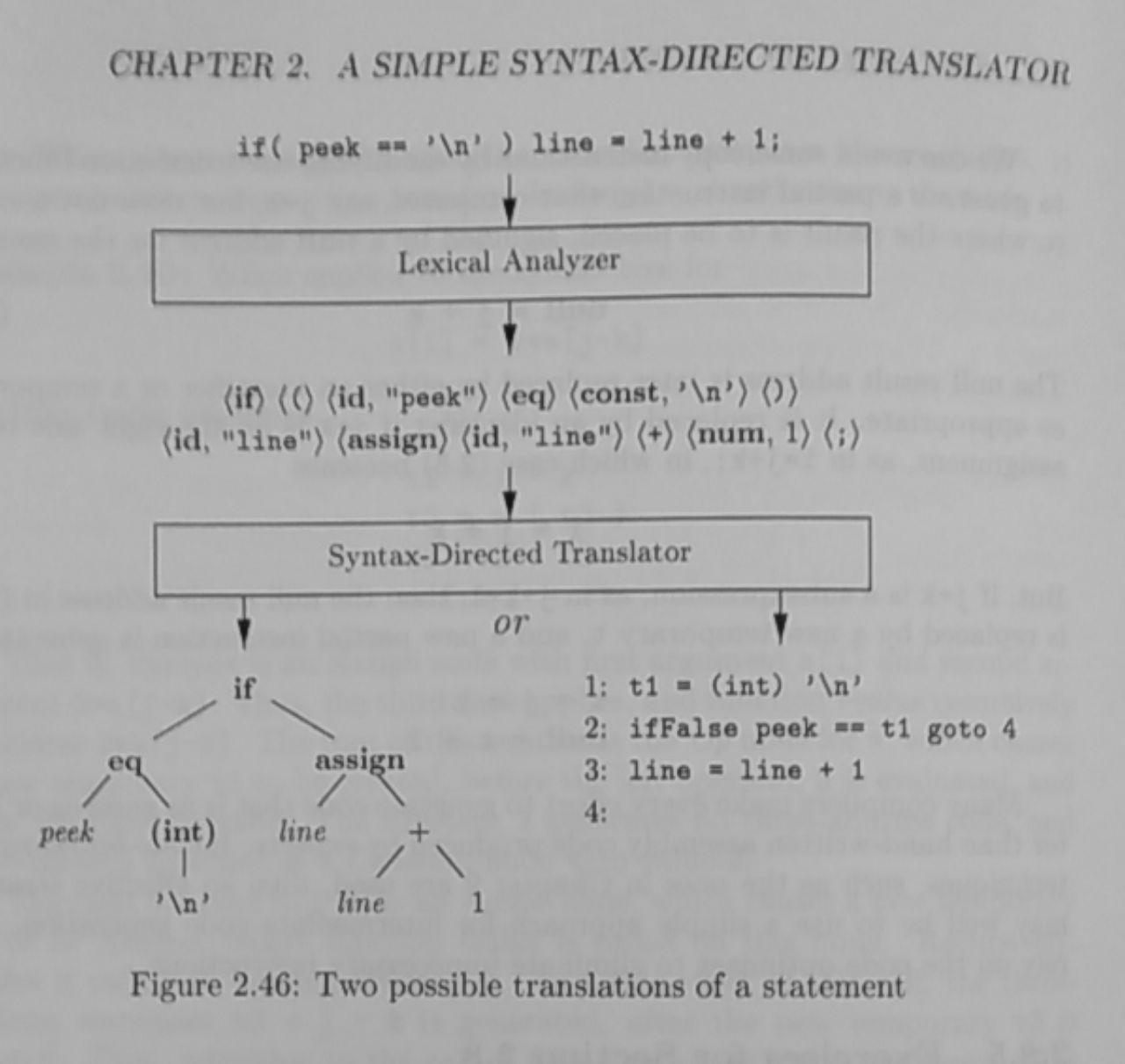

Syntax-directed translation scheme: Attaching rules (semantic rules) or program fragments to productions in a grammar. The output is the translated program.

-

Attributes: Any quantity associated with a programming construct.

-

Syntax tree: The tree generated from a syntax-directed translation.

-

Synthesized attributes: We can associate attributes with terminals and nonterminals, then also attach rules that dictate how to fill these attributes. This can be done in syntax-directed translation.

-

Semantic rules: When displaying a syntax-directed grammar, the semantic rules are the attached actions that need to be done to synthesized attributes (other than the usual production).

-

Tree traversal: How we visit each element of a tree, could be depth first, aka go to children first, or breadth first/top-down, aka root first.

-

Translation schemes: executing program fragments, semantic actions, instead of concatenating strings.

-

Top-down parsing: Start at the root/breadth first, the starting nonterminal, and repeatedly perform: select one production at that node and construct children, find next node at which the subtree is constructed. The selection involves trial and error.

-

lookahead symbol: The current or future terminal being scanned in the input. Typically, the leftmost terminal of the input string.

-

Recursive-descent parsing: a top-down method of syntax analysis in which you recursively try to process the input. There’s a set of procedures, one for each nonterminal.

void A() {

Choose an A-production, A->XaX2 ... Xk;

for (i = 1 to k) {

if (Xi is a nonterminal) {

call procedure Xi();

} else if ( i equals the current input symbol a) {

advance the input to the next symbol;

} else {

/* an error has occurred */

}

}

}-

Backtracking: Going backward in the input to parse them again using another production as the new choice.

-

Predictive parsing: A form of recursive-descent parsing in which the lookahead symbol unambiguously determines the flow of control through the procedure body of non-terminal. This implicitly defines a parse tree for the input and can also be used to build an explicit parse tree. The procedure does two things: It decides which production to use by examining the lookadhead symbol if it is in the

FIRST(a), The procedure mimics the body of the chosen production, it fakes execution until a terminal. -

FIRST(a): Function to return the set of terminals that appear as the first symbols of one or more strings of terminals generated froma.- If

Xisaterminal, thenFIRST(X) = {X}. - If

Xisanonterminal andX-> Y1Y2...Ykis a production, then placeainFIRST(X),ainFIRST(Yi)andεin all ofFIRST(Y1)...FIRST(Yi-1), that basically means thatX -> ε a. - If

X -> εis a production, then addεtoFIRST(X).

- If

FOLLOW: Function to return the rightmost symbols in the derivation sentential form.- Place

$inFOLLOW(S),Sis the start symbol - If there is a production

A-> aBbthen everything inFIRST(b)exceptεis inFOLLOW(B), so in sum any terminal that followsB - If there is a production

A -> aB, or a productionA -> aBb, whereFIRST(b)containsε, then everything inFOLLOW(A)is inFOLLOW(B).

- Place

- Left recursion: A recursive-descent parser could loop forever, we

need to avoid that. It can be eliminated by rewriting the offending

production. Example

A -> Aa | Bwhich is left recursive, can be rewriten asA -> BR, R -> aR | ε.

Algorithm to remove left recursion:

arrange the nonterminals in some order A1,A2,..., An

for (each i from 1 to n) {

for (each j from 1 to i-1) {

replace each production of them form Ai -> Aiy by the

productions Ai -> d1y | d2y| .. | dky, where

Aj -> d1 | d2 | ... | dk are all current Aj-productions

}

eliminate the immediate left recursion among the Ai-productions

}-

Left Factoring: When it’s ambiguous which production to select in

A -> aB1 | aB2, we can defer the selection to later by factoring it toA -> aA1andA1 -> B1 | B2. We factor by the most common prefix. -

Abstract syntax tree or syntax tree: A tree in which interior nodes represent an operator and children node represent operands of the operator. They differs from parse tree in the way that they have programming construct in interior nodes instead of non-terminals.

-

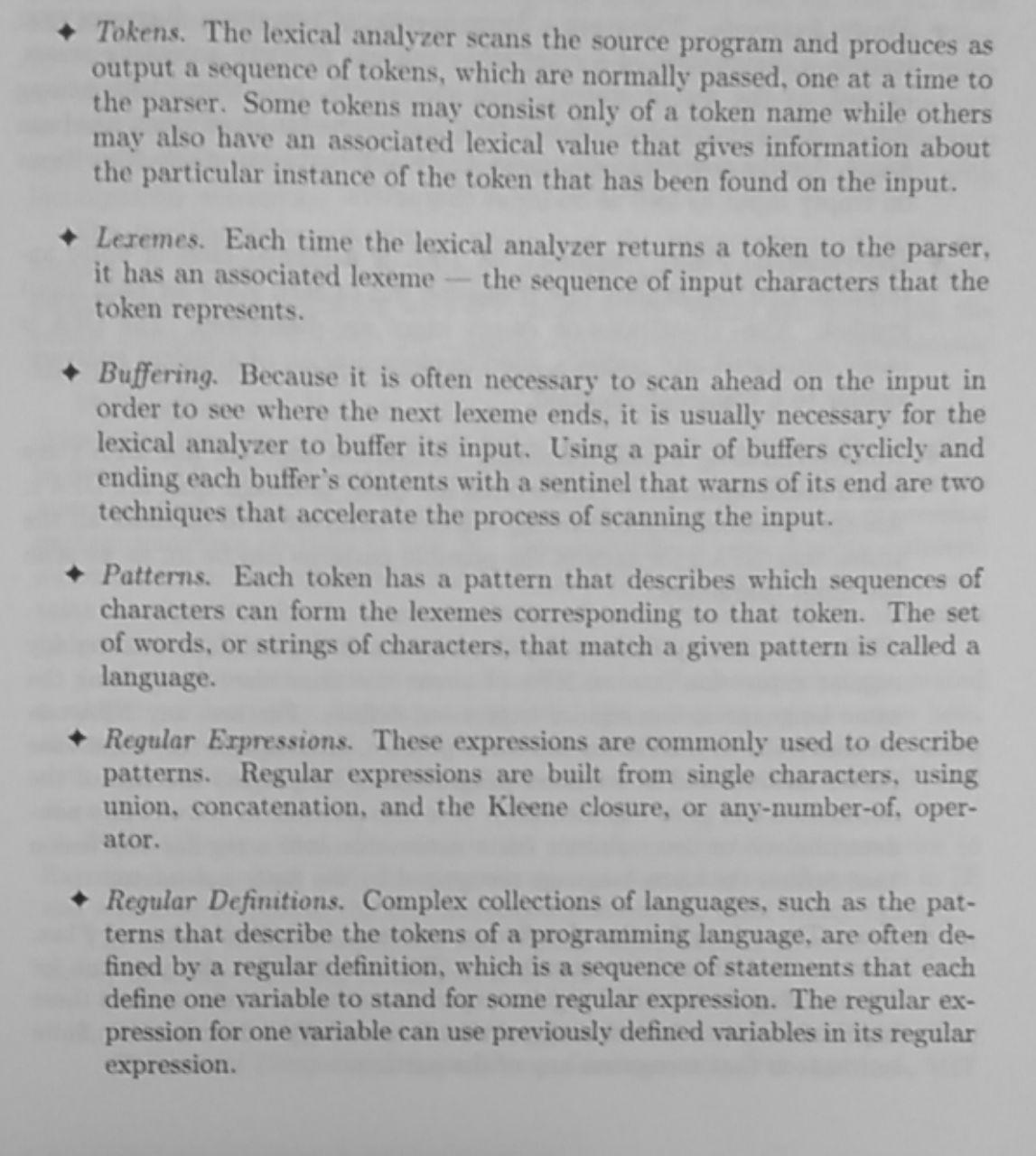

Token: Terminal with additional information, name and optional attribute value. The name is an abstract symbol representing a kind of lexical unit, be it a keyword, an identifier, etc.

-

Lexeme: sequence of characters from the source program that comprises a single token name. It’s an instance of that token.

-

Pattern: A description of the form that the lexeme of a token may take. A sequence of characters that form a keyword or form identifiers and other tokens, any more complex string structure that needs to be matched.

-

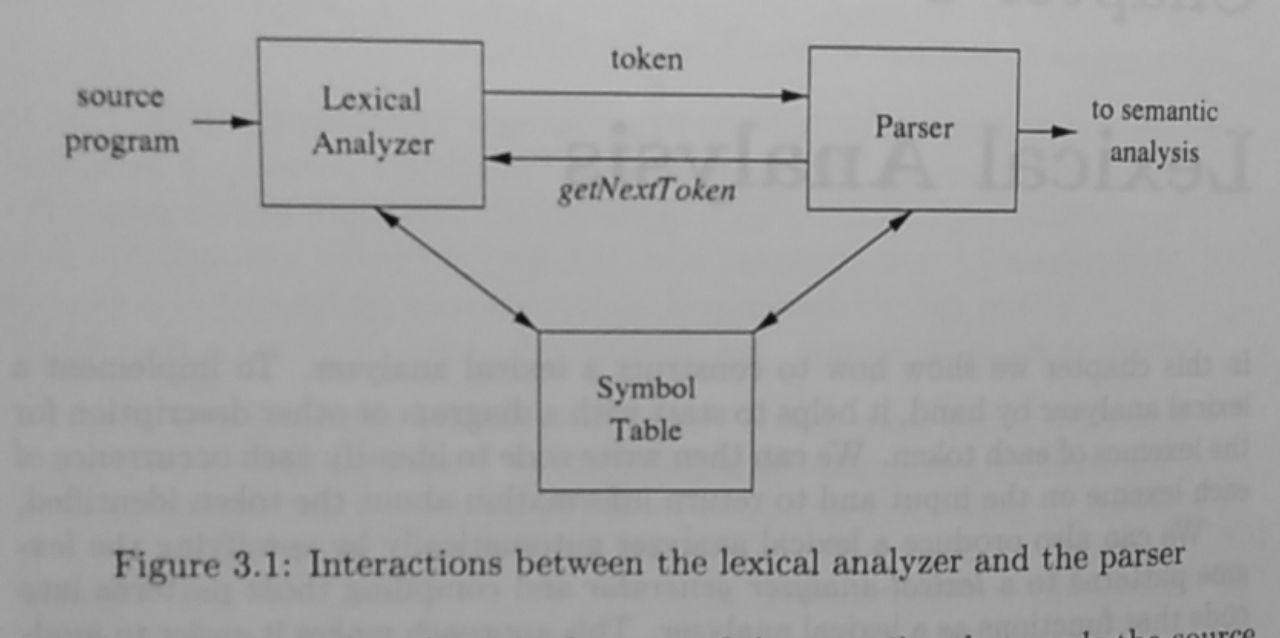

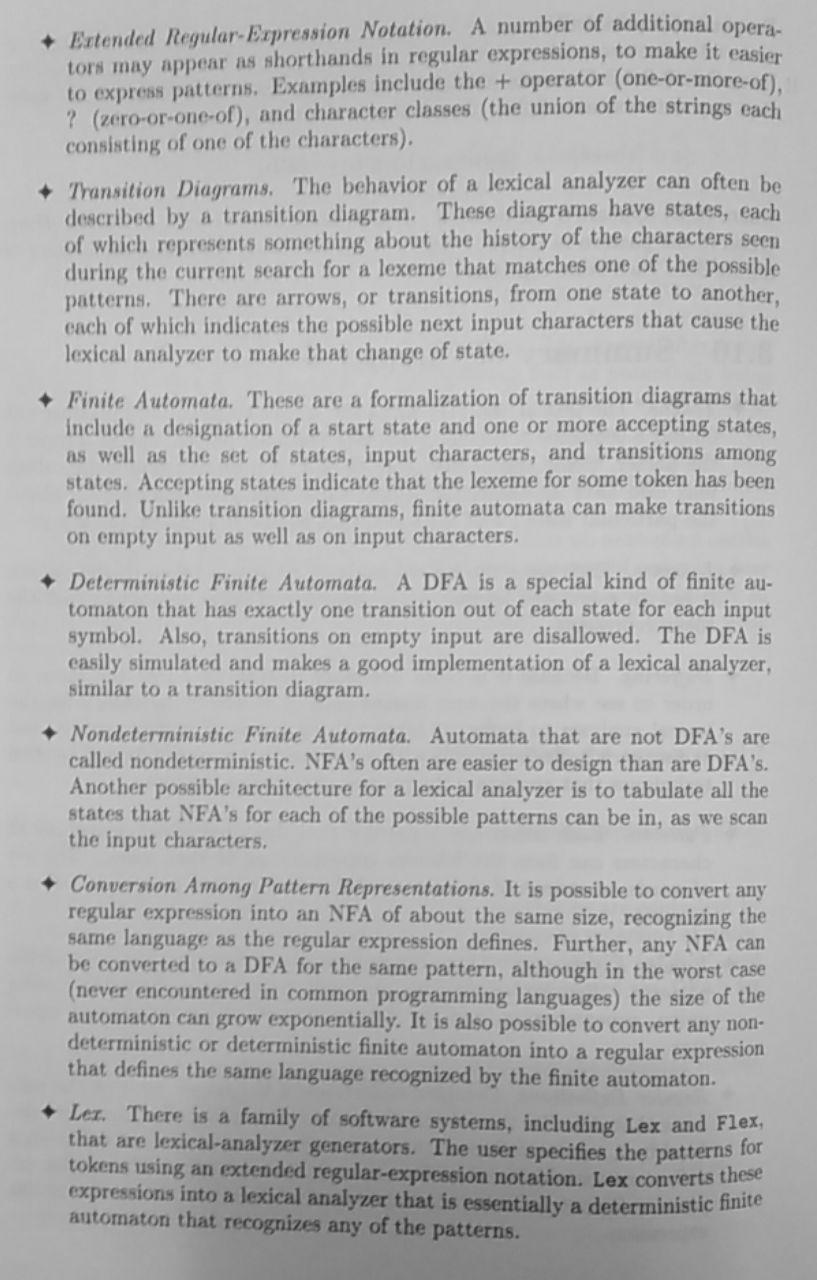

Lexical analysis/analyzer: a lexical analyzer reads characters from the input and groups them into “token objects”. Basically, it creates the tokens. It could be split into two parts, a scanning that consists of processing input by removing comments and compacting white spaces, and a proper lexical analysis that is the more complex portion that produces the token.

-

Reading Ahead: It’s useful to read future characters to decide if they are part of the same lexeme. A technique is to use an input buffer or a peek variable that holds the next character.

-

Input buffering: The best technique is to use a buffer pairs, 2 buffers of the size of a disk block so that reading is more efficient. We use a

lexemeBeginpointer and a forward pointer. To check if we are out of bound of a buffer or that reading is finished we can use “sentinels”, special characters that specify the end of file, if in the middle, or end of buffer, if at the end of the buffer. This character can beEOF. -

Keywords: character strings, lexeme, that identify constructs such as

if,for,do, etc. -

Identifier: also a character string, lexeme, that identify a named value.

-

Symbol Tables: Data structures used by compilers to hold information about source-program constructs. The info is collected incrementally by the analysis phase and used by the synthesis phases to generate the target code. Entries in it contain info about identifiers such as its character string (lexeme), its type, its position in storage, and any other relevant info. Each scope usually have their own symbol table. It gets filled during the analysis phase, the semantic action fills the symbol table, then for example

factor -> id,idtoken gets replaced by its symbol that was declared in the table. -

Intermediate Representations: The frontend generates an intermediate representation of the source program so that the backend can generate the target program. The two most important are: Trees (parse trees and abstract syntax trees), and linear representations (such as three-address code).

-

Static checking: The process of checking if the program follows syntactic and semantic rules of the source language. Assures that the program will compile successfully, and catches errors early. Contain: Syntactic checking: checks grammar, identifier declared, scope check, break statement at end of loops, and type checking.

-

Type Checking: Assures that an operator or function is applied the right number and type of operands, also handles the conversion if necessary aka “coercion”.

-

Strings and languages: A string is synonym for word or sentence. It’s a finite set of characters, its length measured as

|s|.ε, ore, is the string of length 0,|ε| = 0. Strings can be concatenated, if concatenated withεthey stay the same. We can define exponentiation of strings, as ins**0 = ε,s**1 = s,s**2 = ss,s**n = s**(n-1) s. Language is the countable set of strings over an alphabet, a set{ x y z }, empty set is 0 or{ ε }. -

Operations over language: We can perform union, concatenation, and closure, which are the most important operations. Union of two different languages is the same as in set theory. Concatenation is strings formed by taking strings from the first language and string from the second language. Closure aka kleene of

LorL*is a set of string you can get by doing concatenation ofLzero or more time.L+, positive closure is 1 or more time.

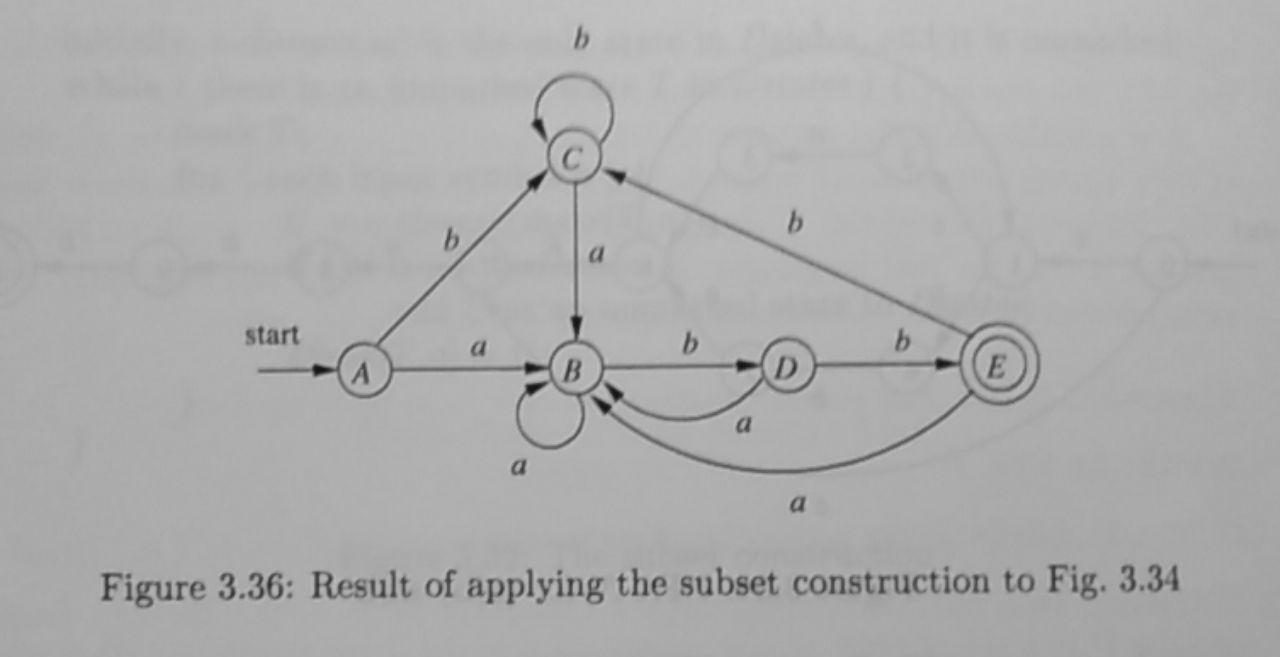

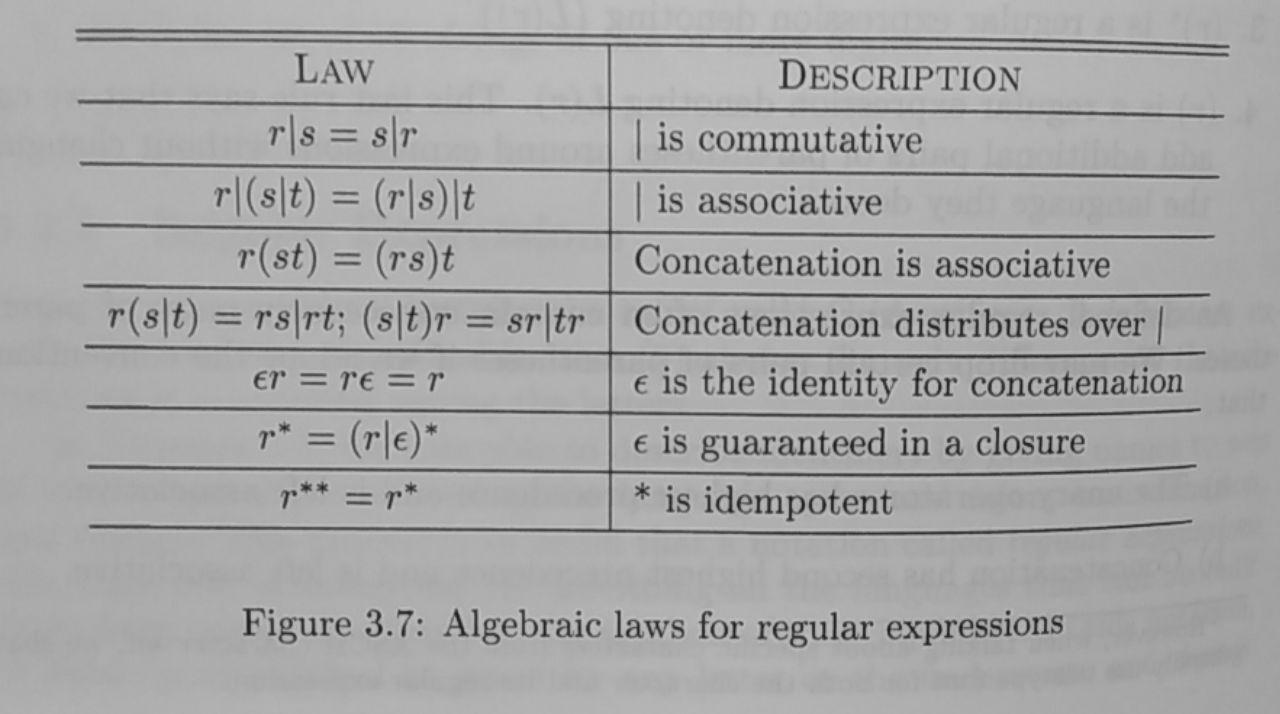

- Regular expressions aka regex: The joint of all operations over

language done in an expresive way. There are precedence priority

rules: All are left associative, the highest precedence goes to

*, then concatenation, then union|. A language that can be defined by regular expressions is called a regular set.

- Regular definitions: Like variables holding a regex for later use, to make it more readable.

- Aho-Corasick algorithm: Algorithm that permits to find the longuest

prefix that matched a single keyword

b1b2..bnthat is found as a prefix of a string. It defines a special transition diagram called a trie, it is a tree structured transition diagram. Define for every node of that tree a failure function which is the previous state that fits the prefix,f(s),sbeing the current position in the string we are trying to match. The seek pointer should be put back atb(f(s)+1)in case of error. There’s also the KMP algoritm to match the string.

Pseudo code for failure function:

t = 0;

f(1) = 0;

for (s = 1; s < n; s++) {

while (t > 0 && b(s+1) != b(t+1)) t = f(t);

if (b(s+1) == b(t+1)) {

t = t + 1;

f(s+1) = t;

} else {

f(s+1) = 0;

}

}- Conflict resolution in Lex:

- Always prefer a longer prefix to a shorter prefix.

- If the longest possible prefix matches two or more patterns, prefer the pattern listed first in the Lex program.

-

Constructing parse tree through derivation: Begin with the start symbol and then at each step replace a nonterminal by the body of one of its production. It’s a top-down construction of a parse tree. We use the

=>to denote “derives”. This proves that a certain terminal derives, in a number of steps, from a particular instance of an expression. If a form with no-nonterminals derives from the start symbol we can say that it is a sentential form of the grammar. The language of a grammar is the set of sentences. Some grammars can be equivalent, different path for same sentence, we denote leftmost derivation and rightmost/canonical derivation. -

LL(1) grammars: L for left to right scanning, L for a leftmost derivation, 1 for using one input symbol of lookahead at each step to make parsing action decisions.

-

Constructing parse trees through reduction: Reduction or bottom-up parsing, is the inverse of derivation, it consists of reducing terminals until the start symbol is found. A “handle” is a substring that matches the substring of the body of a production, it is reduced to the left-most/head of it.

-

LR(k) parsing: L for left to right scanning of the input, R for constructing the rightmost derivation in reverse, and the k for the number of input symbols of lookahead that are used in making parsing decisions.

-

Items in LR(0): States represent sets of “items”, it’s a production of G grammar with a dot at some position of the body indicating where we are in the parsing. For example:

A -> X . Y Z -

Augmented expression grammar in LR(0): a grammar with added initial state

S'that producesS, such as:S' -> S, we accept the state once everything is reduced toS'. -

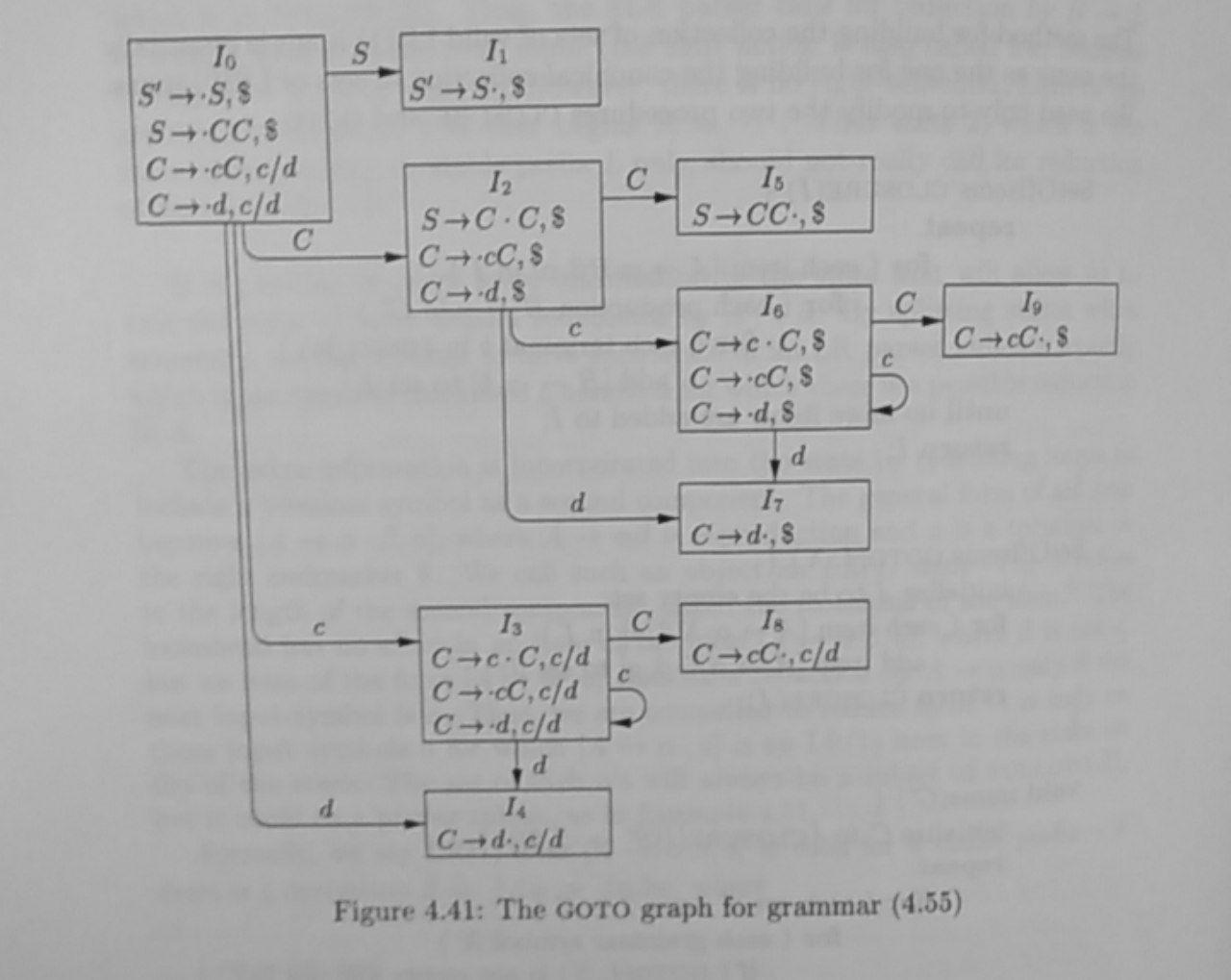

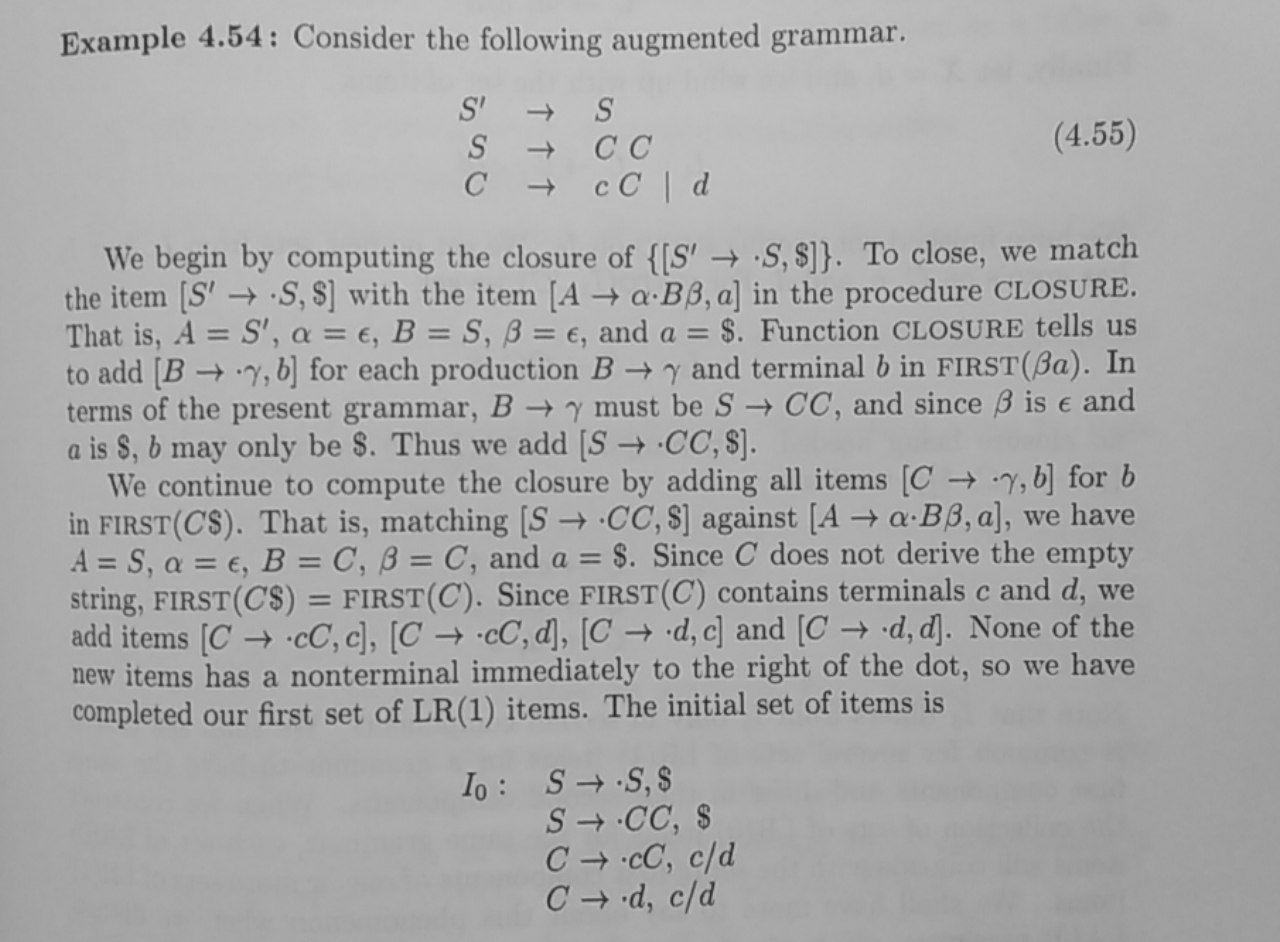

CLOSUREandGOTOin LR(0): TheCLOSUREof a set of itemsIfor a grammarGis constructed as follows: add every items inIto theCLOSURE(I), ifA -> a.Bbis inCLOSURE(I)then replaceBby whatBproduces, example:B -> y, then we addA -> a.ybwe do this until we cannot apply this rule anymore. We call the added ones nonkernel items, and the initial ones kernel items.

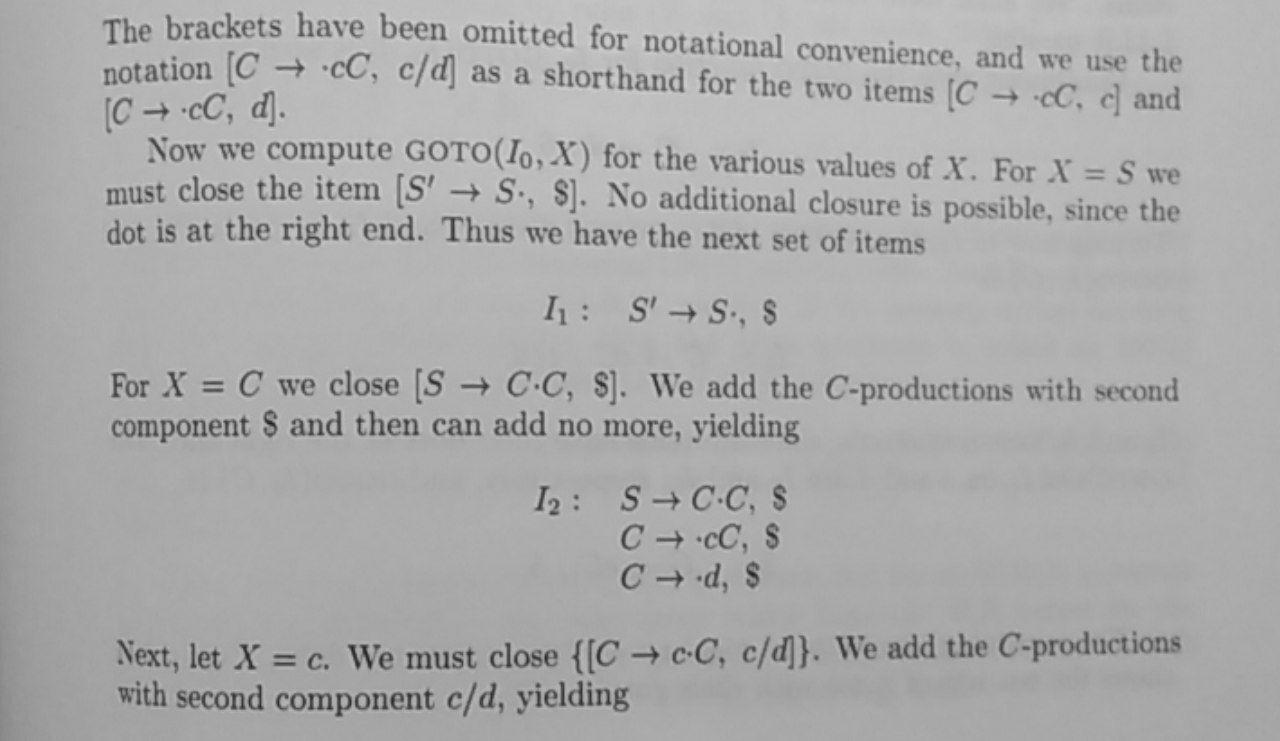

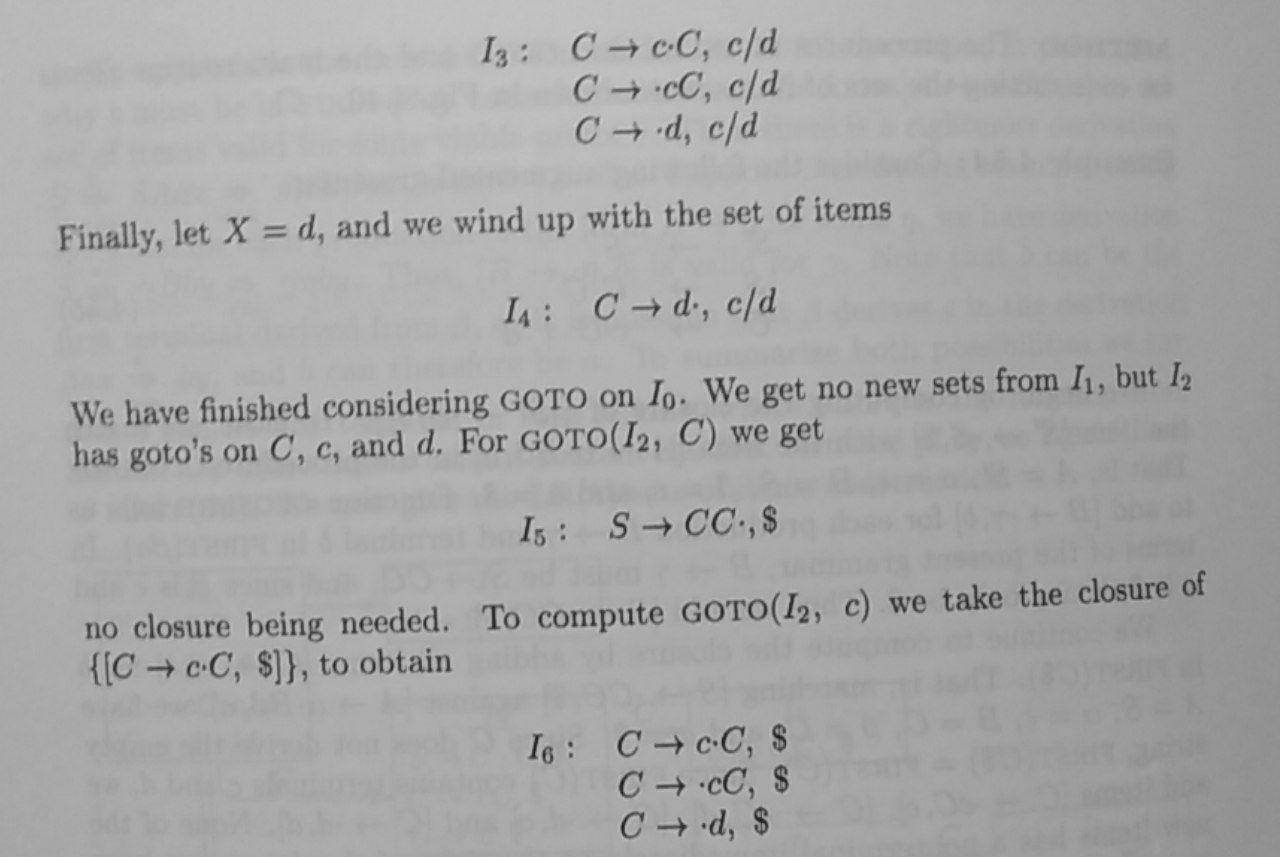

TheGOTO(I, X), whereIis the set of items andXa grammar symbol, defines the closure of the set of all items[ A -> a X. b ]such that[A -> a .X b]is inI. It defines the transitions in the LR(0) automaton for a grammar on inputX. -

CLOSURE and GOTO in LR(1): LR(1) is similar to LR(0) however it has one lookahead character, an item as the form:

[A -> a.B, a]where this production is valid only when the next input symbol isa.

SetOfItems CLOSURE(I) {

repeat

for (each item [A -> a.Bb, a] in I)

for (each production B -> y in G')

for (each terminal c in FIRST(ba)

add [B -> .y, c] to set I;

until no more items are added to I;

return I;

}

SetOfItems GOTO(I, X) {

initialize J to be the empty set;

for (each item [A -> a.XB, a] in I)

add item [A -> aX.B, a] to set J;

return CLOSURE(J);

}

void items(G') {

initialize C to {CLOSURE({|S' -> .S, $|})};

repeat

for (each set of items I in C)

for (each grammar symbol X)

if (GOTO(I, X) is not empty and not in C)

add GOTO(I,X) to C;

until no new sets of items are added to C;

}

-

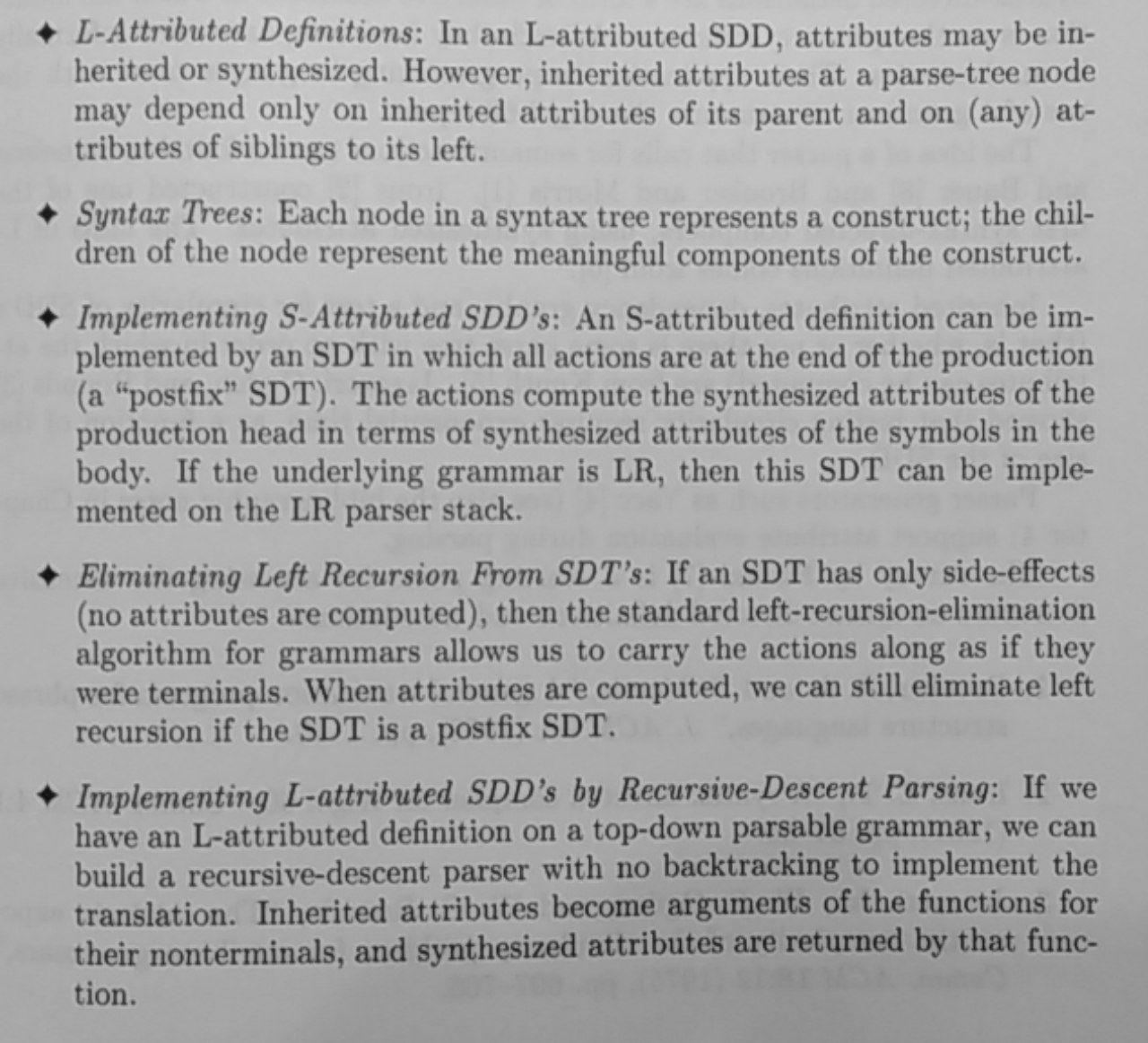

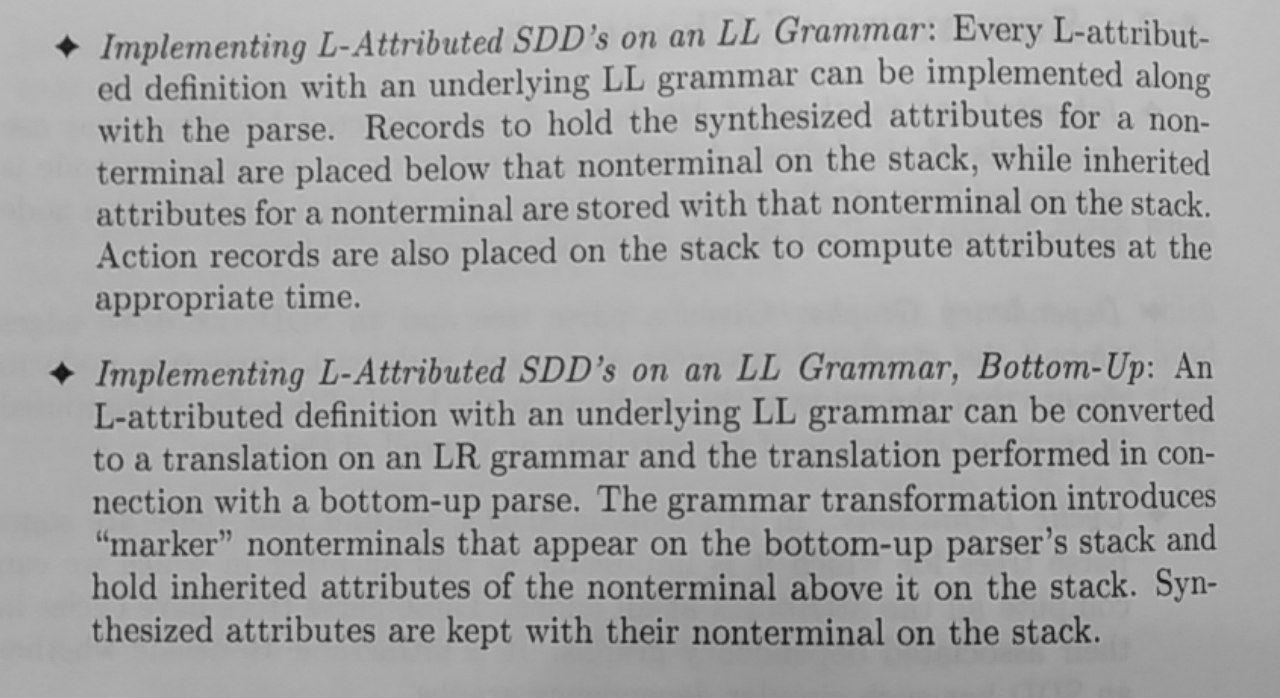

L-attributed translations: Class of syntax-directed translations (L for left-to-right), which encompass virtually all translations that can be performed during parsing.

-

SDD, syntax-directed definition: Context-free grammar together with attributes and rules. Attributes are associated with grammar symbols and rules are associated with productions. If

Xis a symbol,X.ashowsaas an attribute ofX. -

Synthesized and Inherited attributes: Synthesized attributes at node N for nonterminal

Aare computed from the semantic rules at that node, while inherited the attribute of the children are computed from the parent’s semantic rules. Terminals only have synthesized attributes. -

S-attributed SDD: A syntax directed definition that only contains synthesized attributes, that is the head attributes are computed from its production body at node N only (not parent).

-

L-attributed SDD: Where the inherited attributes are only defined by one of the attribute on the left or in the head of the production (left-to-right).

-

Attribute grammar: An SDD without side effects.

-

Annotated parse tree: A parse tree showing the value(s) of its attribute(s).

-

Dependency graph: A graph with arrows/edges pointing in the direction of the value that depends upon the other side of those arrows. It’s applicable for both synthesized attributes and inherited attributes.

-

Topological sort: a way of sorting the dependency graph in a way in which the attributes/node have to be processed. When there are loops in the dependency, topological sorts are not possible.

-

Syntax-directed translation (SDT) for L-Attributed Definition: A syntax directed translation where we put the action/semantic-rule right before the character that requires them, and put the semantic-rule of the head as the last rule.

- Directed acyclic graph (DAG): A way to convert a syntax-directed definition into a graph where leaves are unique/atomic operand, and interior nodes correspond to operators. A leaf node can have many parents. It expresses the syntax tree more succintly and can be used for generation of efficient code to evaluate expressions. Nodes can be stored in an array of records, where each row represents one node. Leaves have a field as lexical value and interior nodes have two fields for left and right children.

.--------------.

|1| id | ----|-> to entry for i

|2| num | 10 |

|3| + |1 | 2 |

|4| = |1 | 3 |

|5| .... |

.- = .

.' `.

: +

: .' `.

`. .' `

i 10

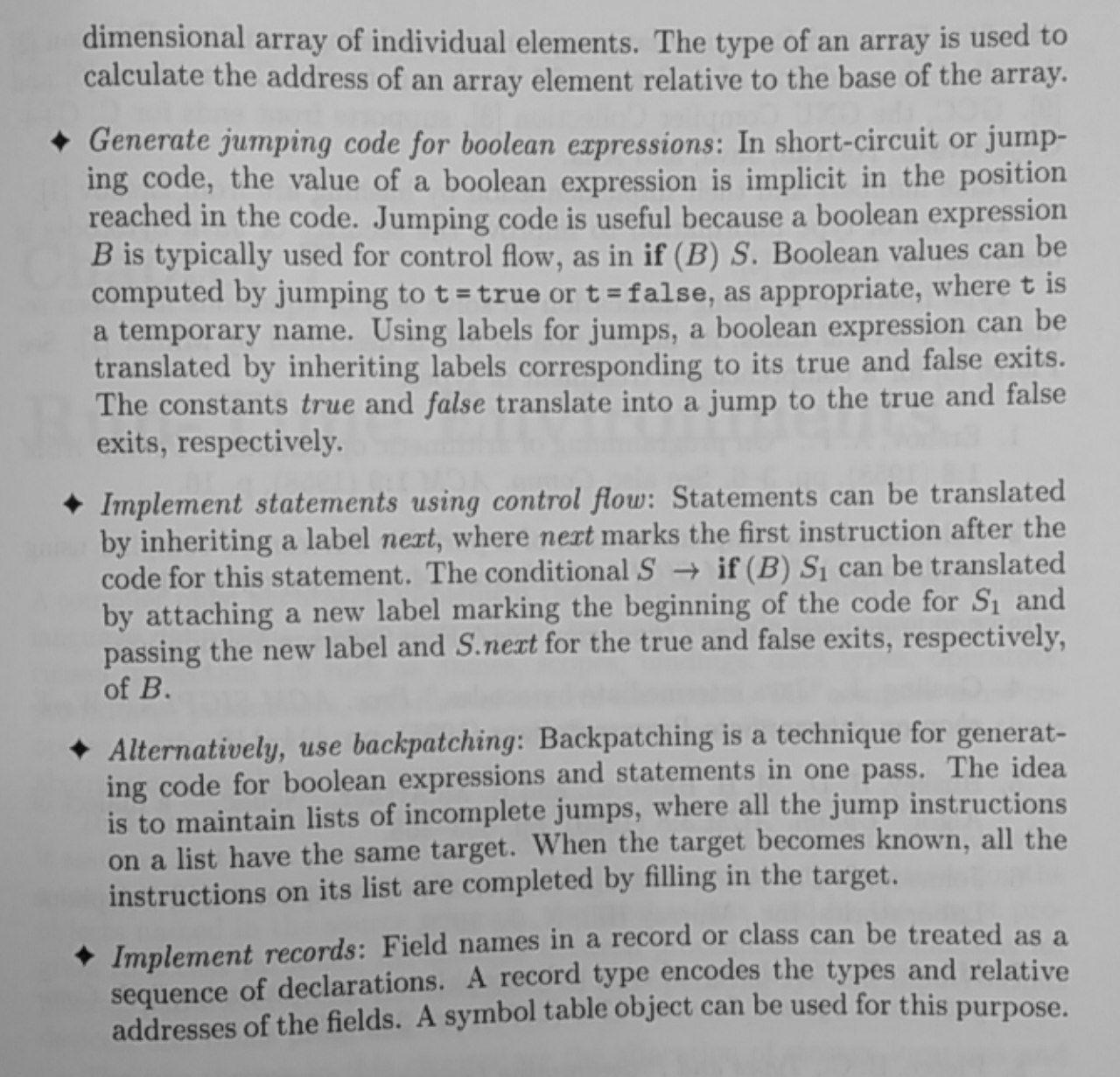

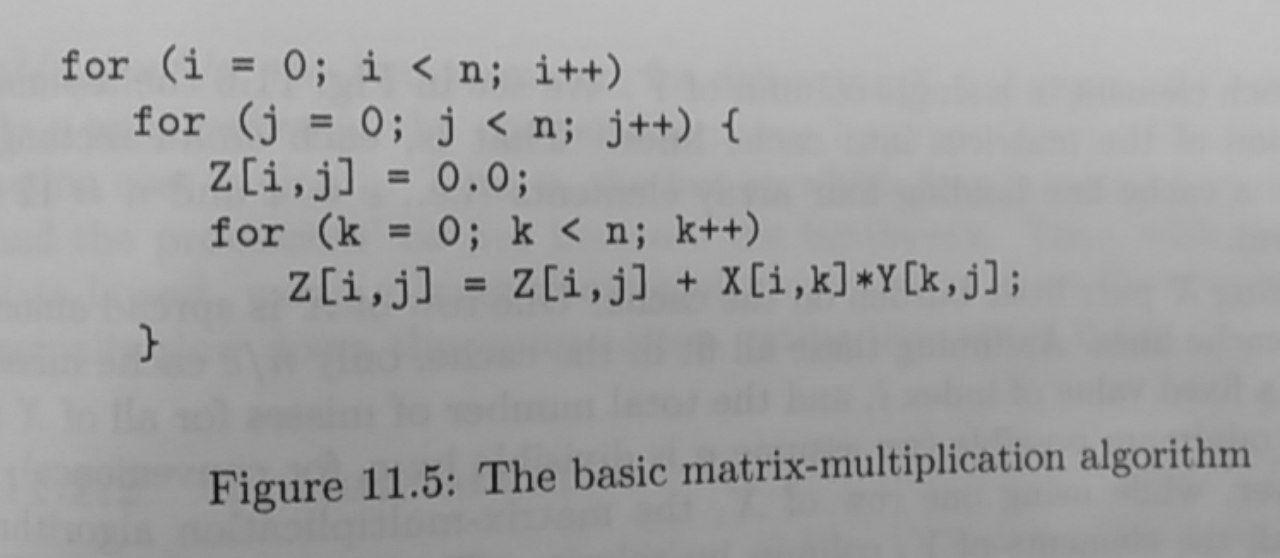

- Three-address code: Instructions where there are at most one operator

on the right side. It is a linear representation of a syntax tree or a

DAG in which explicit names correspond to the interior nodes of the graph.

Three-address code is composed of addresses and instructions. An address could

either be a name, a constant, or a compiler-generated temporary. Common

instructions used can be an assignment instruction

(x = y op z), unary operator assignment(x = op y), copy instruction of the formx = y, unconditional jumpgoto L, conditional jump of the formif x goto LandifFalse x goto L, conditional jump such asif x relop y goto Lrelopbeing a conditional operator, and procedure calls such asparam xfor parameters andcall p, nandy = call p, n(last n arguments) for procedures and function calls respectively, andreturn yybeing the returned value, indexed copy instructions of the formx = y[i]andx[i] = y, and address and pointer assignments of the formx = &yx = *yand*x = y.

-

Quadruples (in the context of three-address code): A table where we map 4 columns:

op,arg1,arg2,result. Unary operators don’t fillarg2,paramdon’t fillarg2nor result, and conditional jumps have the target label in result. -

Triples (in the context of three-address code): A table where we map 3 columns, similar to quadruples, but without the result. The result is referred to by its position only. They are one to one with syntax tree. Indirect triples are like triples but instead of pointing the result directly we point to the result position in a separate instruction table, and thus can move chunks of code independently.

-

Static single-assignment form (SSA): An intermediary representation similar to three-address code but where all assignments are to variables with distinct names. It uses ø-function to combine definitions of the same variable, returns the value of the asignment-statement corresponding to the control-flow path.

-

Translation applications: From the type of a name, the compiler can determine the type of storage (storage layout) that will be needed for that name at run time. Type information can be used to calculate addresses denoted in arrays for example.

Array layout is either row major or column major, as:base + (i-low)*wSome types could be left chosen by the output archicture, left as symbolic type width in the intermediate representation. -

Type checking: A method the compiler uses, with a type system, to assign type expression to each components of a source program to avoid inadvertent error and malicious misbehavior. A language is either strongly typed or not, meaning it needs all the types to be chosen explicitly.

Two forms: synthesis and inference, synthesis builds up the type of an expression from the type of its subexpressions. It requires names to be declared before they are used. ex:if f has type s -> t and x has type s, then expression f(x) has type t. Type inference determines the type of a language construct from the way it is used. ex:if f(x) is an expression, then for some a and b, f has type a -> b and x hs type a. -

Implicit and explicit type conversion: implicit conversion is when the compiler coerces the types, usually when widening types, explicit is when the programmer must write something to cause the conversion. Two semantic actions for checking

E -> E1 + E2one ismax(t1, t2)anotherwiden(a,t,w)which widen addressaof typetinto a value of typew.

Addr widen(Addr a, Type t, Type w) {

if (t = w) return a;

else if (t = integer and w = float) {

temp = new Temp();

gen(temp = '=' (float)' a);

return temp;

} else {

error;

}

}-

Polymorphic function: A type expression with a

∀stands “for any type” which the function can be applied to. Each time a polymorphic function is applied, its bound type variables can denote a different type. -

Unification: The problem of determining whether two expressions

sandtcan be made identical by substituting expressions for the variables insandt. -

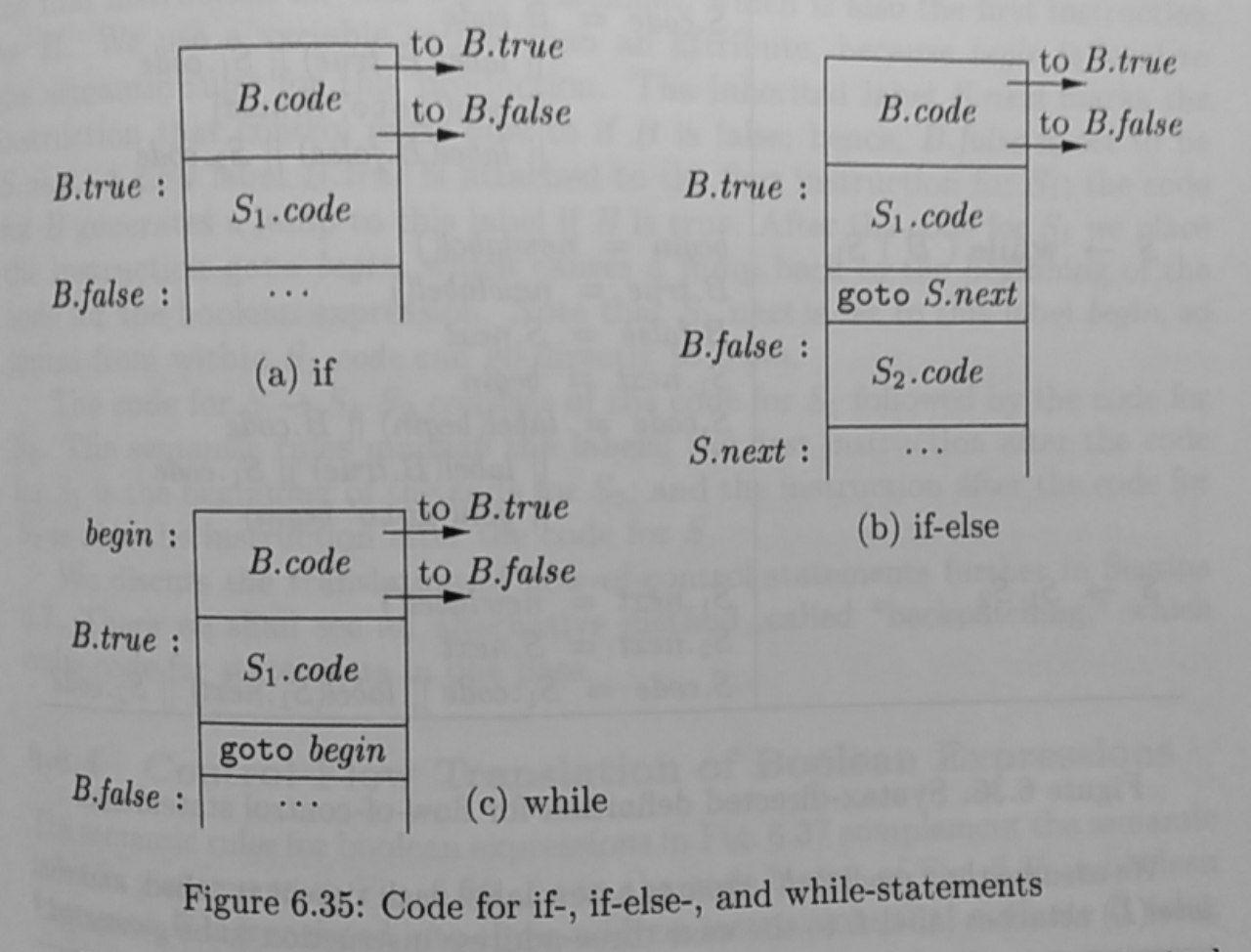

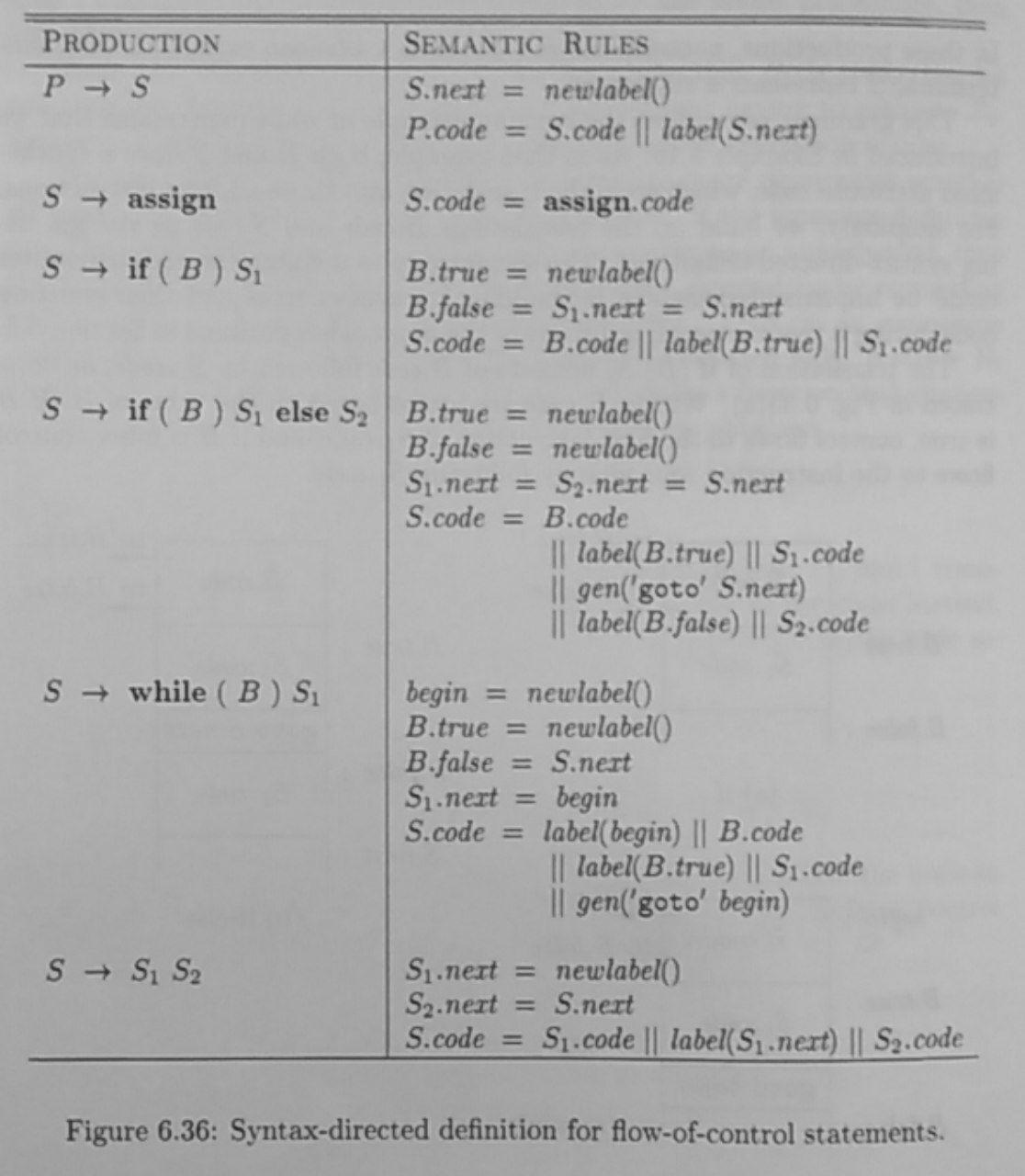

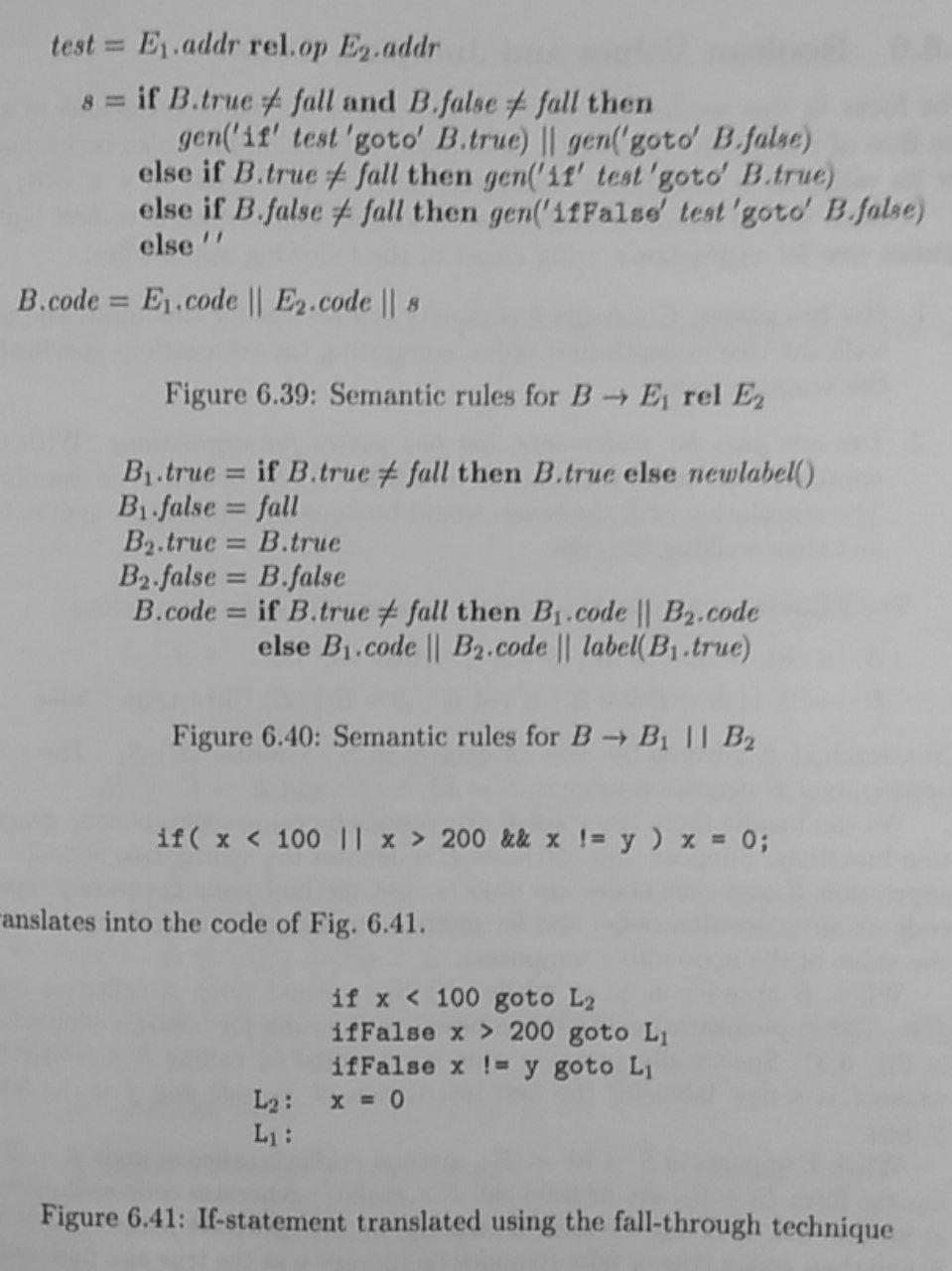

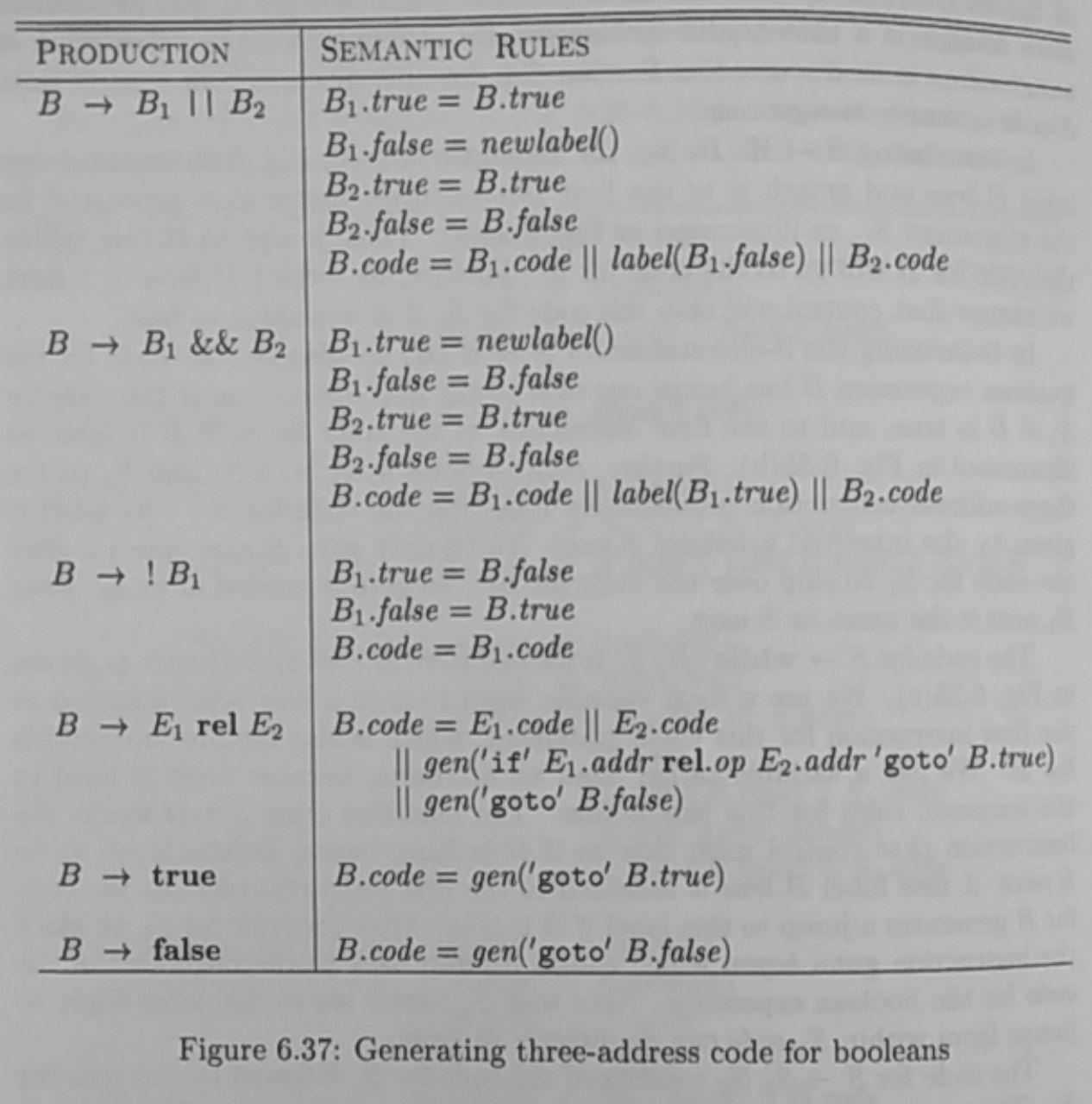

Boolean expressions: Either used to alter the flow of control or to compute logical.

Example:

B -> B || B | B && B | !B | (B) | E rel E | true | falseWe can short-circuit boolean operators, translating them into jumps:

if (x < 100 || x > 200 && x != y) x = 0;equivalent to:

if x < 100 goto L2

ifFalse x > 200 goto L1

ifFalse x != y goto L1

L2: x = 0

L1:- Backpatching: A method of generating labels for jumps in boolean

expression (ex:

if (B)) S) in one pass as synthesized attributes.

- Run-time environment: The environment provided by the operating system so that the program runs. Typically:

Code/Text

Static

Heap

|

V

Free memory

^

|

Stack

-

Stack vs Heap: Stack storage: for names local to a procedure. Heap storage: data that may outlive the call to the procedure that created it (we talk of virtual memory).

-

Memory Manager: A subsystem that allocates and deallocates space within the heap, it serves as an interface between application programs and the operating system. It performs two basic functions: allocation and deallocation.

A memory manager should be space efficient, minimizing the total heap space needed by a program, program efficient, it should allow the program to run faster by making use of the memory subsystem, and have a low overhead, because memory allocation and deallocation are frequent in many programs. -

Garbage collectors: A piece of code to reclaims chunks of storage that aren’t accessed anymore.

Things to consider: overall execution time, space usage, pause time, program locality.

Either we catch the transition when object become unreachable (like reference counting), or we periodically locate all the reachable objects and then infer that all the other objects are unreachable (trace-based). -

Mutator: A subsystem that is in charge of manipulating memory. It performs 4 basic operations: Object allocation, parameter passing and return values, reference assignments, procedure returns.

-

Root set: All the data that can be accessed directly by a program, without having to dereference any pointer.

-

Code generation: The process of generating machine instruction/target program (be it asm or other) from an intermediary representation.

-

Addresses in target code: The code found in a static area is used for, static for global constants, and the heap is the dynamic managed area during program execution, stack is dynamic for holding activation records as they are created and destroyed during calls and returns

-

Basic blocks and flow graphs: Dividing the code into sections called blocks, consisting of: flow that can only enter the basic block through the first instruction, no jump in the middle, and control will leave the block without halting or branching execpt possibly as the last instruction. The basic block becomes a node in a flow graph.

-

Live variable, and next-use: A variable that lives after one basic block, the next-use tell us when it’s going to be used

-

Optimizing the code: Optimization is based on multiple things including: cost of instruction, eliminate local common subexpressions, eliminate dead code, reorder statements that do not depend on one another, use algebraic laws to reorder operands of three-address instructions and sometimes simplify the computation

-

DAG for basic block: The basic block itself can be represented by a DAG, having as parents the operators and as leaves the operands. This is used for simplifications and to represent array references too.

-

Managing register and address descriptors: registers are limited and so we need an algorithm, using a

getReg()method to choose what to do with the registers. We need two structures, one to know what is currently in the registers, a register descriptor, and one to know where, in which addresses, the variables are currently found, an address descriptor. -

A register spill: When there’s no place in the current register to store the operand of an instruction and that register value needs to be stored on its own memory location.

-

Peephole optimization: Improving a known target code, a peephole, by replacing instruction sequences within it by a shorter or faster sequence. It usually consists of many passes. Examples: redundant-instruction elimination, flow-of-control optimizations, algebraic simplifications, use of machine idioms.

-

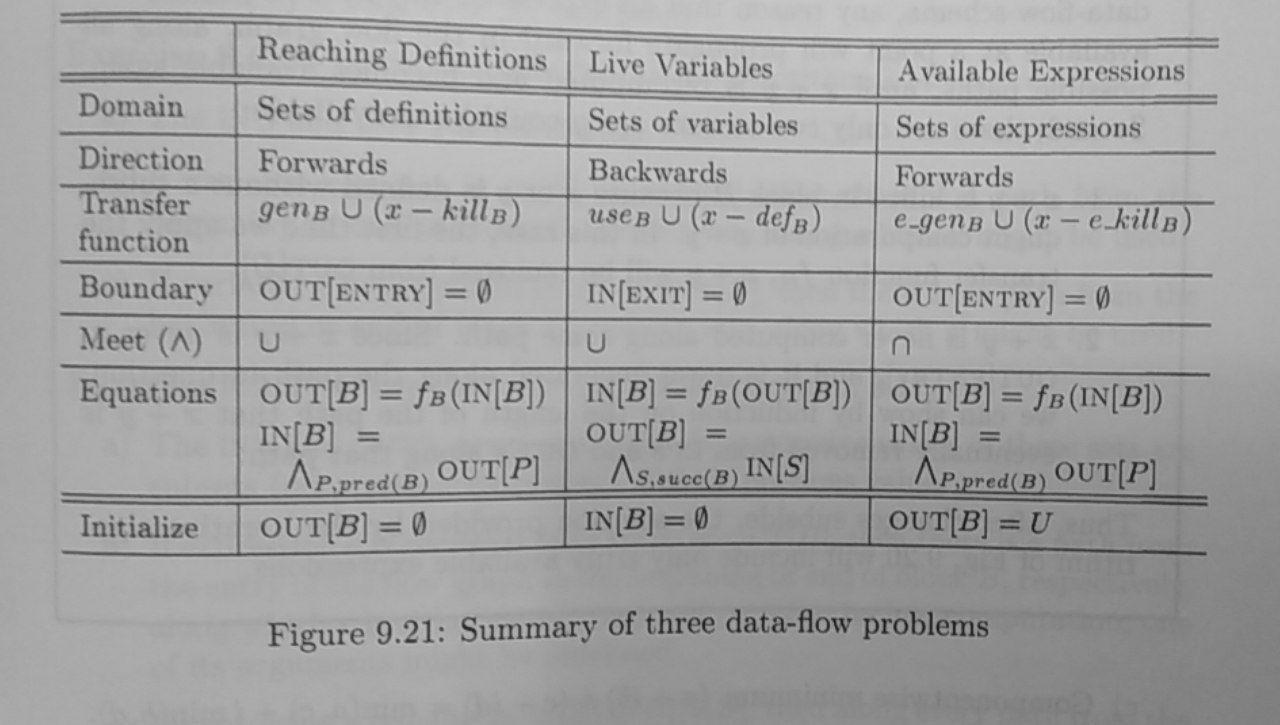

Data flow graph analysis: A way of drawing the flow of a program/blocks to optimize it. When iterative, it usually consists of parameters in a semi-lattice with a domain, direction (forward, backward), a transfer function which has results in the domain, a boundary (top and bottom), a meet operator ∧ (that follows ≤ properties), equations, and initialization. Such graph can be: reaching definitions, live variables, available expressions, constant propagation, partial redundancy, etc..

- Monotonicity: A function f on a partial order is monotonic if: if

x ≤ y then f(x) ≤ f(y)

-

MOP (meet-over-all-paths solution): Then the “best” possible solution to a dataflow problem for node n is given by computing the dataflow information for all possible paths from entry to n, and then combining them ø. in general there will be an infinite number of possible paths to n.

-

Very busy expressions: An expression

eis very busy at pointpif On every path fromp, expressioneis evaluated before the value ofeis changed -

Natural loop: Conditions: It must have a single-entry node, called the header. This entry node dominates all nodes in the loop. There must be a back edge that enters the loop header. Otherwise, it is not possible for the flow of control to return to the header directl from the “loop”.

-

Region based analysis: Instead of iterative, we start from a small scope, apply the transfer function, and wide the scope.

-

Hardware vs software ILP: Machine that let the software manage parallelism are called VLIW machines (Very Long instruction word), and those that use the hardware are called superscalar machines. See computer architecture article.

-

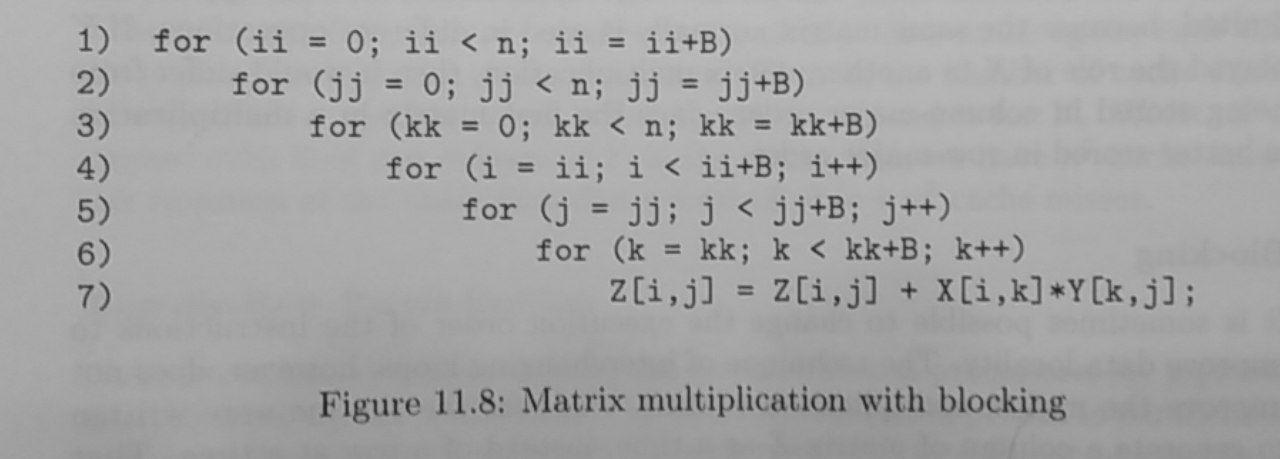

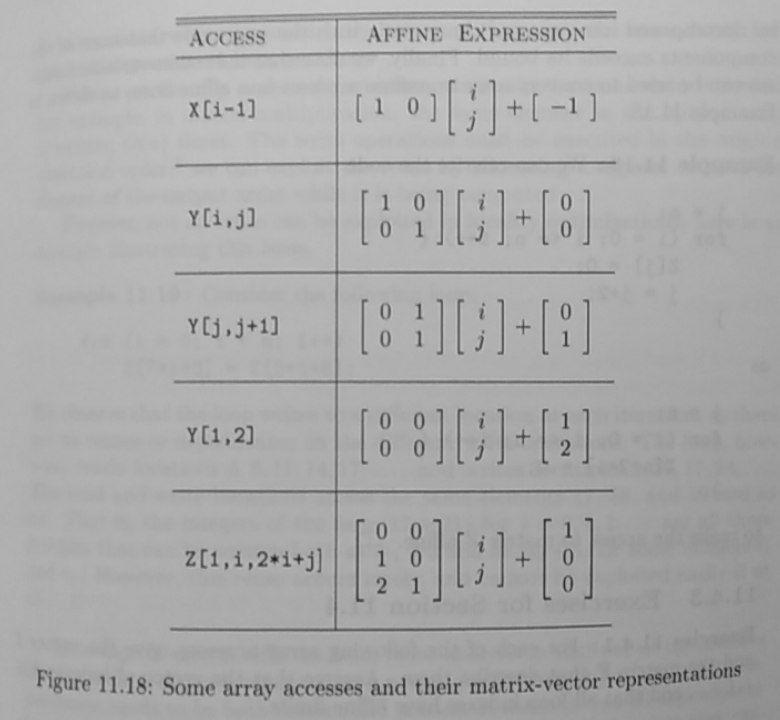

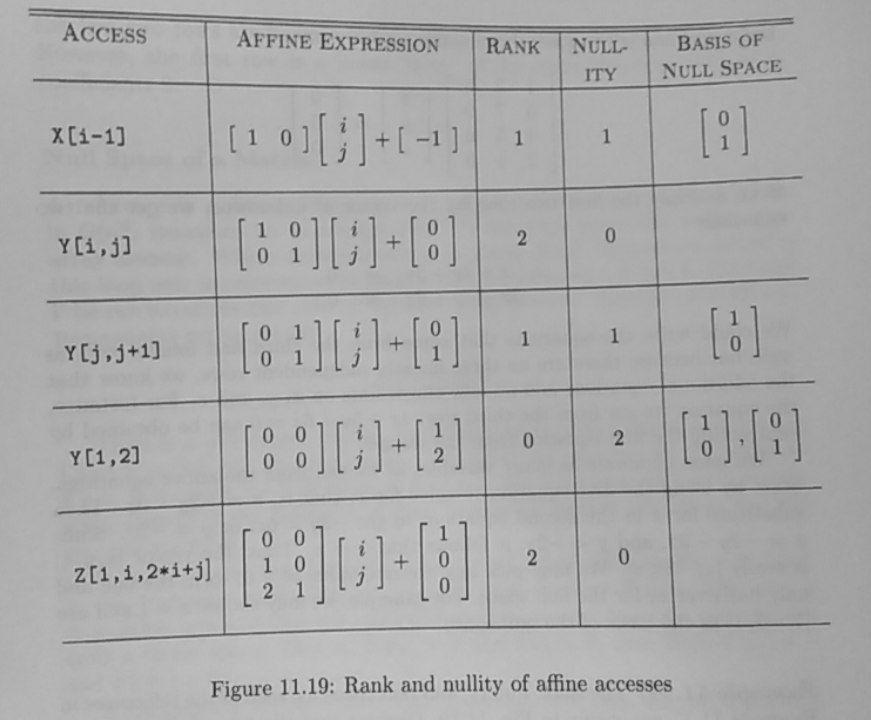

Array afine optimization: When you can express the indices of the array by an affine function, you can start applying types of optimization such as time based and space based optimization.

Further Reading

- Compilers Principles, Techniques, and Tools by Alfred V. Aho, Monica S. Lam, Ravi Sethi, Jeffrey D. Ullman

- Crafting Interpreters

- https://nicoleorchard.com/blog/compilers

- https://increment.com/programming-languages/crash-course-in-compilers/

- https://compilers.iecc.com/crenshaw/

- https://github.com/wildart/CSCI364

- http://dmitrysoshnikov.com/courses/parsing-algorithms/

Attributions:

Internet Archive Book Images / No restrictions

If you want to have a more in depth discussion I'm always available by email or irc.

We can discuss and argue about what you like and dislike, about new ideas to consider, opinions, etc..

If you don't feel like "having a discussion" or are intimidated by emails

then you can simply say something small in the comment sections below

and/or share it with your friends.