Table Of Content

Table Of Content

- Introduction

- Access control In Operating Systems

- Models

- Proving Who We Are

- System-Wide Access Control

- Putting in Boxes: Isolation and Constraints as Access Control

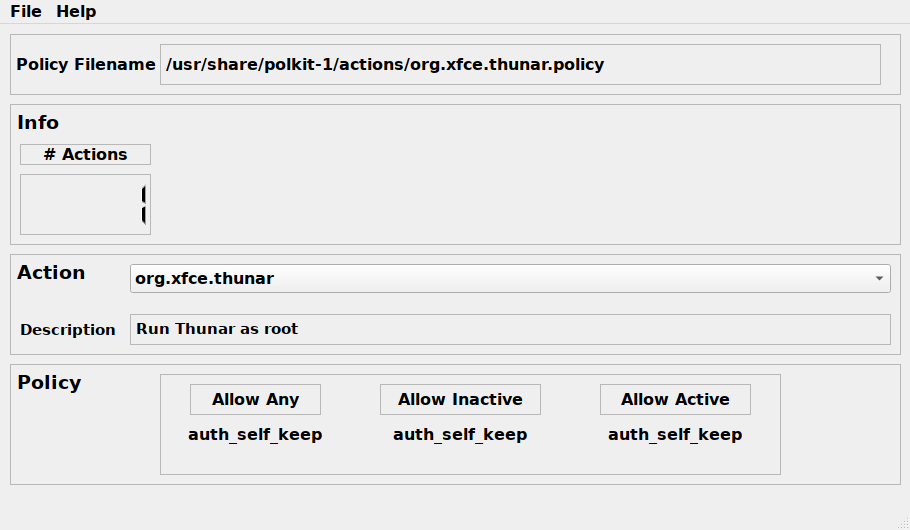

- Action-Based Access Control

- After the facts: Logging & Auditing

- General Security & Trusted Computing Base

- Conclusion

Introduction

Plenty of cheesy quotes often say that total security stands on the

opposite of total freedom.

Undeniably, in computers and operating systems this is a fact. However,

universal privilege used to be the norm, and restricting actions was

a concept that wasn’t part of the vocabulary. Today, this idea is a

must. Our machines are constantly interacting with the external world,

exchanging information, and deliberately fetching and executing pieces

of code and software from servers hosted in places we might never

visit. Meanwhile, we trust and intertwine our lives with these machines.

A system that is trustworthy is not the same as a system we must trust. This distinction is important because systems that need to be trusted are not necessarily trustworthy.

This article will focus on the topic of access control on Unix-like systems. Sit back and relax as it transports you on a journey of discovery. We’ll unfold the map, travel to different places, allowing to better understand this wide, often misunderstood, and messy territory. The goal of this article is to first and foremost describe what is present, allowing to move forward, especially with the countless possibilities already present. How can we better shape the future if we don’t know the past.

To facilitate the reading and skimming, every section ends with a quick summary labeled “What you need to remember”.

There are 8 parts to this article. In the first few ones we will go

over theories such as what security and access control mean, along

with different models of how to represent them. Then we have a section

on the subject, proving someone is who they say they are. Afterward

we move to practical access control with 3 sections: system-wide,

isolation/constraint, and action-based, which will be used to

categorize the mechanisms employed. Lastly, we’ll finish with

sections on auditing and logging, to see what happens after the facts,

and finally give generic OS and hardware security tips, because otherwise

all that was mentioned before would be useless.

You can think of the progression as a chronological one, from a user

proving who they are by authenticating, to interacting with the system,

and then leaving traces on it.

We’ll start our introduction by pondering on what security means, and afterward continue to the main topic.

In everyday talk, security and computer security are ill-defined abstract concepts. Even experts don’t all agree on what they mean. Additionally, as with anything scientific, what you can’t measure, quantify, and experiment doesn’t exist. Therefore, multiple standards, accreditations, definitions, jargon, guides, evaluation schemes, principles, best practices, and models have been created, not all coherent with one another.

For example, as a teaser, the following series of words could refer to different concepts depending on the context, which can lead to confusion.

- Protection

- Permissions

- Privileges

- Policies

- Capabilities

- Policies

- Trust

- Ownership

- Access

- Authentication

- Authorization

- Limits

- etc..

The standards and accreditations have separate ways to evaluate the levels of security of a system, each focusing on different aspects. Some popular ones include: NIST FIPS 140-2 security requirements for cryptographic modules, multiple ISO certifications such as ISO 27k for information security, the famous PCI DSS that is targeted at the payment card industry, the Common Criteria framework (aka ISO/IEC 15408), etc..

As far as access control standards go, the Common Criteria framework,

which replaced the older Orange Book (aka TSEC, Trusted Computer

System Evaluation Criteria), is the international de facto. The testing

laboratories, which evaluate the claims companies make about their

products, are scattered around the world and they mutually agree through

a treaty to recognize each others’ security assessment results. Common

Criteria is mandatory for software used within some government systems

and types of industries.

Let’s consider it a good base and extract the generic definition of a

secure system from the Orange Book (TSEC):

A secure system will control, through the use of specific security features, access to information such that only properly authorized individuals, or processes operating on their behalf, will have access to read, write, create, or delete information.

The evaluation schemes grade the level of security of systems based on how

they apply policies, which are rules and practices on the system. These

policies are used as the definition of security. For instance, the

classic principle of least privilege or the CIA triad (Confidentiality,

Integrity, Availability).

The principle of least privilege dictates that subjects should be given

just enough privileges to perform their tasks, it ensures failure will

do the least amount of harm. Privilege, sometimes also called permission

or rights, is loosely defined as the abstract ability to perform a task,

whichever form it takes; a key concept in access control. This is also

linked to the idea of compartmentalization, separating entities from

one another.

In CIA: Confidentiality, is a property of objects/information

only getting to where it’s supposed to, conserving privacy. Integrity

is the property of the object not being tampered by unauthorized

parties and how the data should reflect and maintain its consistency. Finally,

Availability is another property of objects that makes it accessible

and usable upon demand.

How these vague terms apply and are interpreted depends on the developed

policy description, one that would allow it to be measured and controlled.

The policies are then implemented, proved, and formalized using a security

model, The model could then be mandated, or not, within the scope of

the evaluation. A security model is used to determine and visualize

how security is applied, the relations between subjects and objects

access. This is also called access control theory by some.

We’ll take more time to dive into models later, but for now, an example

would be a state machine of who controls which resources along with

transitions, or markings/labels on objects, or a matrix of users and

resources on the system. More on this later..

Apart from the policy and models, TSEC and Common Criteria have additional requirements that should be included in a secure system:

- Accountability, how individual subjects (users and processes) are identified, along with related Auditing of their actions on the system.

- Assurance, in the form of hardware/software mechanism to enforce other requirements, along with continuous protection of the life-cycle of the system.

The systems are then graded in one of four divisions that represent the

strength of the certification. These can even go up to formally verified

systems; in the Orange Book they are: D, C, B, A, with A being the highest

security level, while in Common Criteria EAL1 is the lowest and EAL7 is

the highest.

When it comes to Unix-like systems, multiple versions of RHEL meet the

Common Criteria, some certified under BSI with Protection Profile at EAL4+

others by the NIAP (National Information Assurance Partnership).

As you can see, calling a system “secure” isn’t straight forward, nor is

measuring it. Certifications such as Common Criteria don’t really measure

the security of the system but simply state at what level the system was

tested and against which requirements.

Security is a moving target on an axis that is constantly

extending. Furthermore, even certifications like the ones mentioned

could be criticized to be security theaters, focusing essentially on

documentation and evidence rather than day to day operation.

Let’s move on from the abstract concept of security to the particular topic of operating system access control.

What you need to remember: Defining “security” is not straight forward, there are a lot of standards and specs, each with their own criteria and levels. However, one thing is in common: it’s about applying a policy of who has access to what and respecting it.

Access Control In Operating Systems

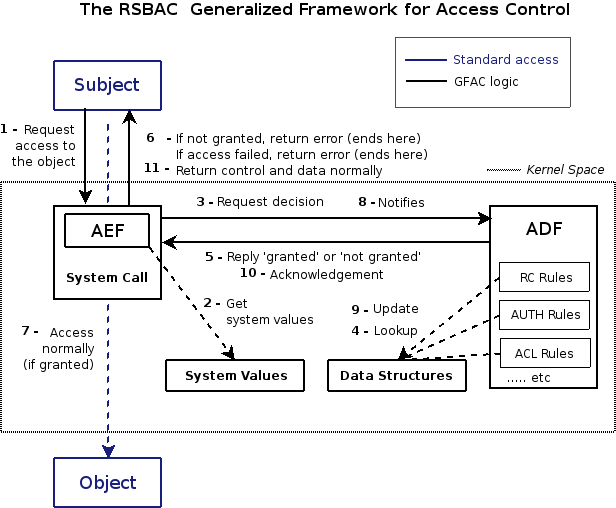

In this section we’ll get a better idea of what access control in operating systems means. The best way to understand it, in my opinion, is to think of the following three ideas: the goal, the entities in the equation, and the domain where they’re at play.

The part of security related to access control, as we’ve seen, emphasizes

on making sure the right subject is able to perform the actions they

need on the right object; indirectly it also means blocking access to

those who shouldn’t.

This is the Goal: to prevent malicious misuses of the system by applying

the right policy.

Access control is defined by the National Institute of Standards and Technology (NIST) as the set of procedures and/or processes that only allow access to information in accordance with pre-established policies and rules (among multiple other similar definitions).

There exist multiple entities that join together to achieve this goal. There are what we call subjects, or sometimes called “principal”, something doing an action, which is usually a user, group, role, or a process/program. On the other side there are objects, usually passive entities on which the action is applied, these can be system resources, files, devices, programs, and even other users or the set of actions themselves.

These two interact through a mechanism or facility, the entity that allows the action and gives it form. This mechanism is surrounded or includes ways to enforce rules, the guiding policies. Often these are described using models to explain the flow and prove that the mechanism functions properly.

Mechanisms determine how something is done; policies dictate what is done. Flexibility requires the separation of policy and method.

The mechanism and its inner workings should follow what’s called the

principles of protection, it’s the mindset used to perceive the overall

system access control and good practices, basically how it applies the

policy. It can contain the concept of privilege or permission: being able

to do some task or not. The privileges a subject has, or is able to get,

are its access rights.

Additionally, the mechanism could also include rules on the modes

of operations which would be defined according to the types of

objects/resources on which the action is done.

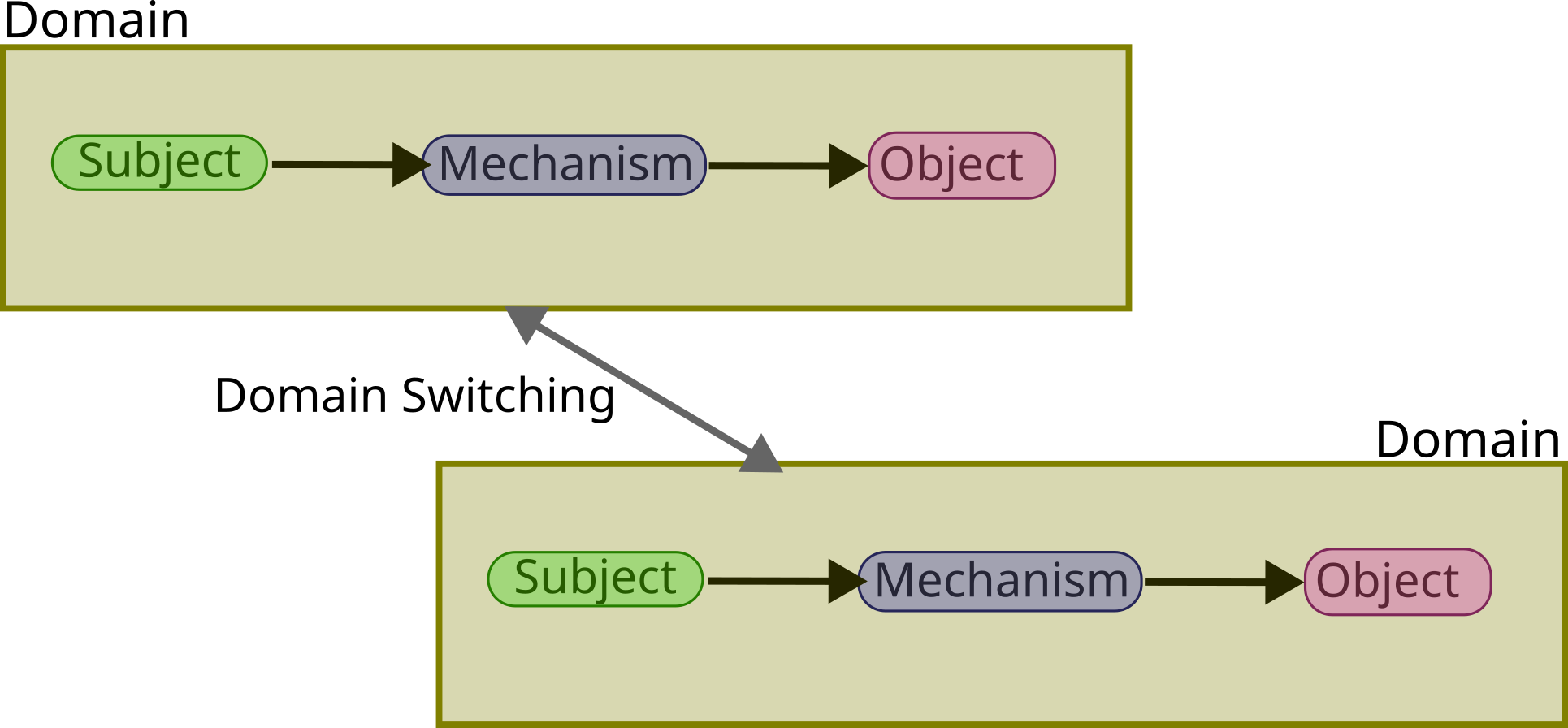

All of these: subjects, objects, and mechanisms, are part of a domain

of protection; The “Who”, “What”, “Where”, “When”, etc.. Policies are

applied within each domain, which could possibly be different. In that

case, we could have higher-level mechanisms that would allow switching

between domains.

Sometimes, in literature, subjects are called domains. They could live

and pick their own rules, under their control, in a dynamic way. Switching

user could be said to be switching domain, think of setuid/setgid, which

we’ll come back to later. This is in contrast with a more static

application of policies such as the standard Unix permission model, or

the more rigid Mandatory Access Control, which we’ll also dive deeply

into later.

These three ideas are abstract: the goal, the entities, mechanisms along with how to imagine them in a domain, yet they make it much simpler to understand all the access control content that will follow in this article so keep them in mind.

What you need to remember: Access control in OS has the goal of preventing malicious usage through a policy, the actors are the subject, objects, and they interface together through a mechanism/facility. These all exist within a domain, changing domain would mean changing some of these params.

Models

In this section we’ll discuss, with big brush strokes, a couple of

security models.

Models are used to make sense of the policies and their implementations.

They describe how the mechanism/facility protects the system by

juggling the interaction between subjects, objects, and other possible

entities. It’s a more formal description of what’s going on in the system.

However, keep in mind that the map is not the territory, none of these

representations are perfect, nor address all security issues.

For now, don’t worry about the actual programming aspect, we’ll come

back to it in the next sections. You can still imagine how each model

would take concrete form, it’s a painful read but a rewarding one. Often

multiple models can be used to describe the same system. Sometimes the

implementation exists previous to the model, and sometimes it’s the

opposite, the model impacts how the mechanism of protection is conceived.

We’ll walk through a couple of examples, covering as much ground as

possible.

Let’s start with one of the most intuitive model, the access control

matrix model.

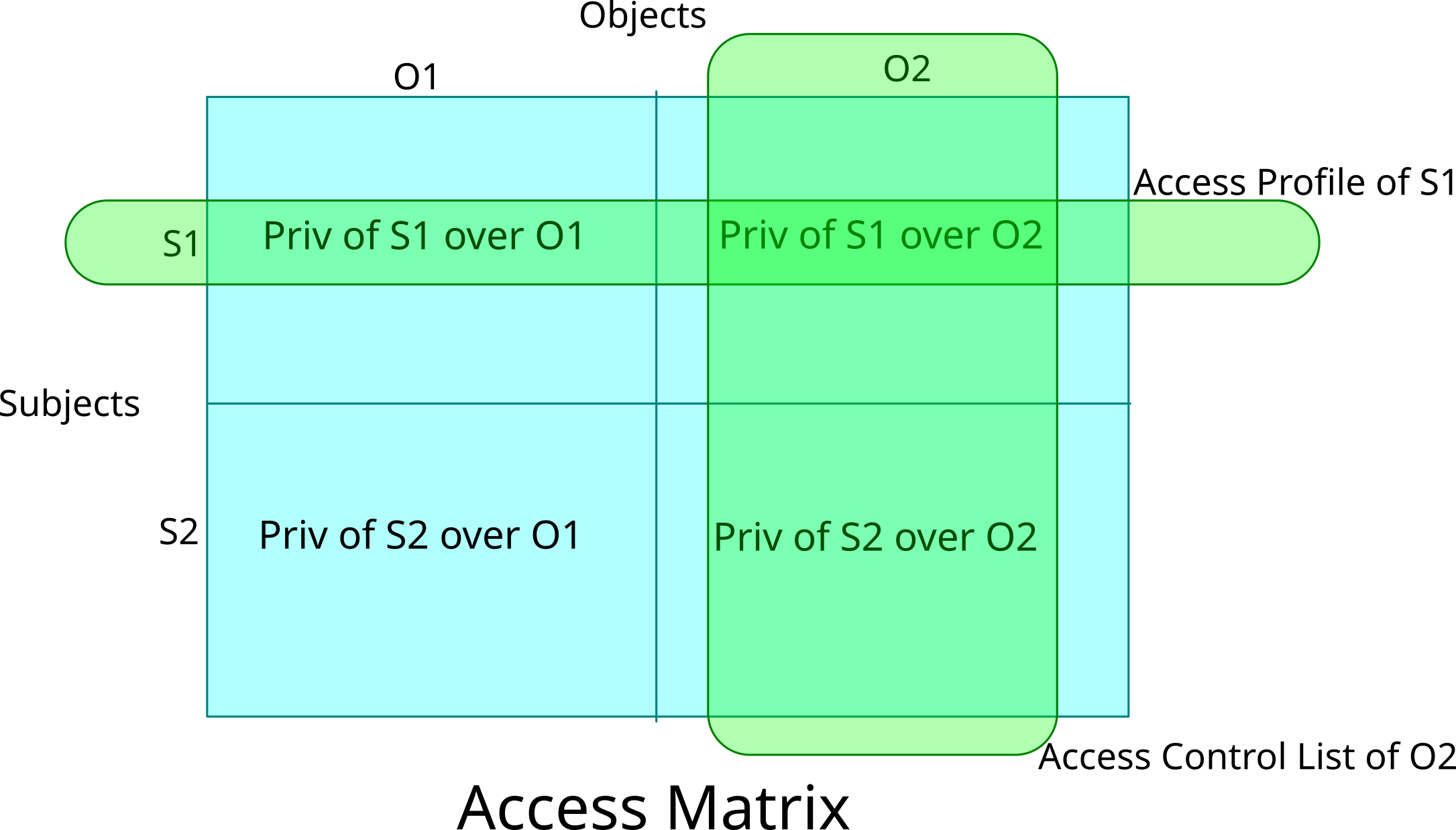

As the name implies, it’s a matrix, column and rows. The columns

represent the objects, the rows the subjects, and the entries in the

matrix indicate the privilege/permission/rights that the subject can

exercise on the object. So far that’s simple enough.

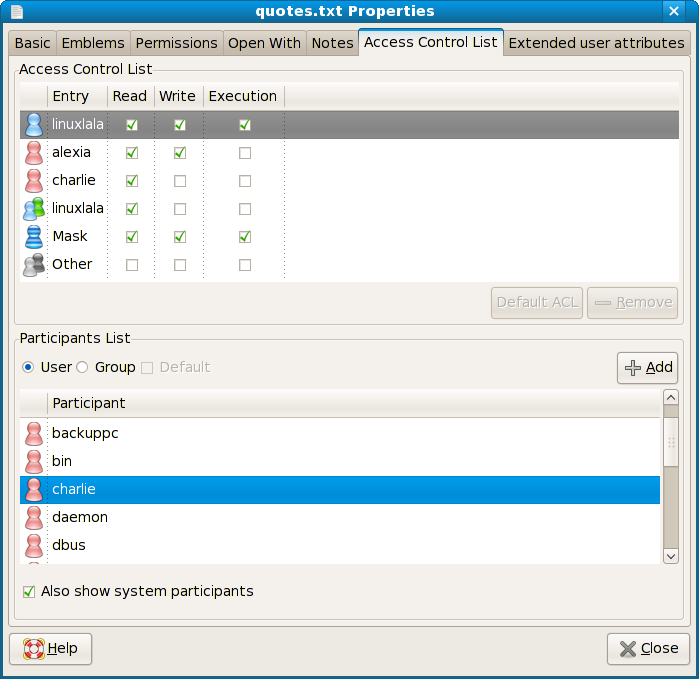

The model gives two views, if you look at it column-wise we can see what’s called an access control list for the object: who can do what on the object. If we look at it row-wise we can see what’s called an access profile for the subject (or capability list): what a subject has the ability to do on which object.

Concretely, it could get translated in a programming implementation that

stores the access matrix as a bitmask, or any other kind of structure,

on every file in the system and it would contain the possible conceptions

of subjects (user, group, roles, etc..) along with their privileges,

the access control list (ex: standard POSIX permission, or POSIX.1e ACL,

if you think about them in this novel way it can be mind-bending).

Or it could be a system-wide storage matrix, or one existing in the

subjects themselves, that would contain all objects that the subjects

currently have access to, the access profile (ex: capability-based

security). We’ll come back to this topic.

Extending on those, subjects in the matrix could be allowed to dynamically switch role with another subject, sometimes called domain switching. There’s also the possibility to have a rights-transferring mechanism, copying them from one subject to another, which can include restriction on the propagation of the rights (how far and for how long they can propagate). In these cases, the matrix becomes dynamic.

In general an access matrix is considered an incomplete description of security policy as the model doesn’t really enforce rules but simply describes the current state.

A model that is more formal and expands on the access matrix is the Graham

Denning (G-D) model. Similar to the typical access matrix, rows are

subjects and columns are objects, and the entries/elements are the rights

of the subject on the object.

However, the model adds a set of 8 protection “rules” to the mix, which

in this model actually means “actions”, so that the description of the

policy becomes more complete. Along, it also defines normal subjects as

“users” and a new special subject role called “controller”, which is a user

that has ownership over other users.

Rules, which are about how to perform actions, are associated with preconditions to make sure the rights are respected. When a rule is executed, for example creating an object, the matrix is changed accordingly. The rules are as follows:

- Create an object.

- Create a subject.

- Delete an object.

- Delete a subject.

- Provide the read access right.

- Provide the grant access right.

- Provide the delete access right.

- Provide the transfer access right.

After Graham Denning model, another model went further on these ideas,

the Harrison-Ruzzo-Ullman (HRU) model. It dissected the rules into

more primitive operations (called commands, of which there are 6) and

conditions, making it more like the ACID of database transactions.

It is described formally using mathematical procedures.

- Subjects:

S - Objects:

O(Scan also be considered anO) - Rights:

R - Commands:

C - Access Matrix:

P

The current system then exists in a “configuration” defined by a tuple

(S, O, P) and can change through commands with preconditions.

These two models, G-D and HRU, are better than a plain access-matrix at visualizing whether or not the system stays in a secure state and can later on help thinking of the algorithms to use when doing a software implementation.

Another category of models are state machine models, based on finite-state machines. These models emphasize the transition between states based on action and secrecy. It’s a more dynamic view where permission/privilege is always on the move depending on the state of the system. In these models we think in terms of levels/layers of access, gained or revoked rights, and classification of subjects and objects (aka non-discretionary or mandatory control, explained later). When every state transition, from booting to power off, is proven to be secure then, by induction, the whole system is secure. That’s the notion of “secure state”.

There are many ways to visualize a finite-state machine, usually it’s better that it’s shown as deterministic (DFA). This can be done in a table showing all transitions, or as a graphical representation. You could go back to your CS course on automata for a refresher.

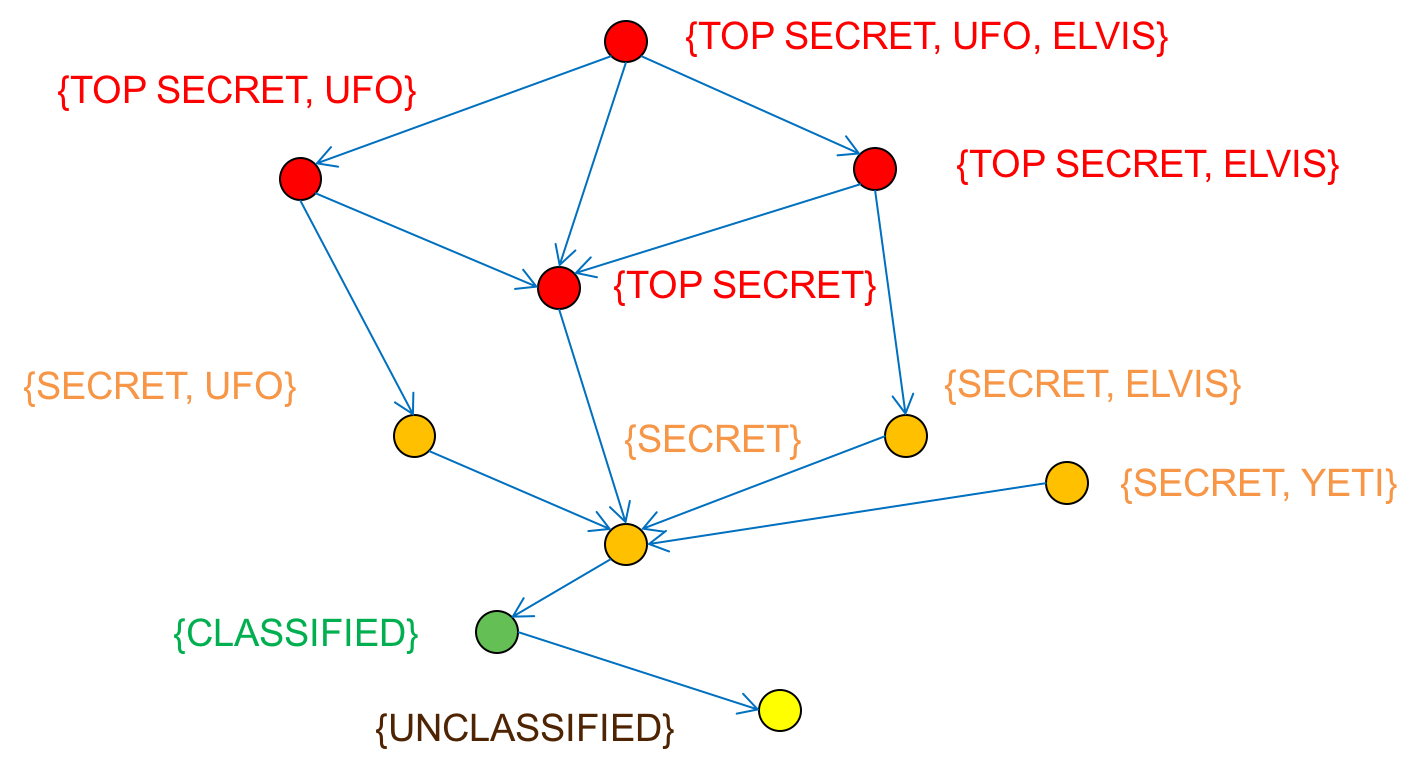

Yet, as with the access matrix, a state machine on itself isn’t a very complete description of a security policy. A more formal category that extends from the state machine model are information flow models, also called latticed-based information flow models. This includes, among others, the Biba model and the Bell-LaPadula model.

The information flow models consist of objects, state transitions,

and flow policy states. Basically, it’s a state machine that makes sure

the information can’t flow in the wrong direction, avoiding unauthorized

access. This is done by governing how subjects can get access to objects

by verifying if the security level criteria matches.

Additionally, these flow models can live along other models and other

systems, interoperating with them through the use of a guard in between,

which is yet another fancy name for any sort of mechanism/facility that

decides if something is allowed or not.

Source: Access Control — Thinking about Security, Paul Krzyzanowski from January 31, 2022

Source: Access Control — Thinking about Security, Paul Krzyzanowski from January 31, 2022

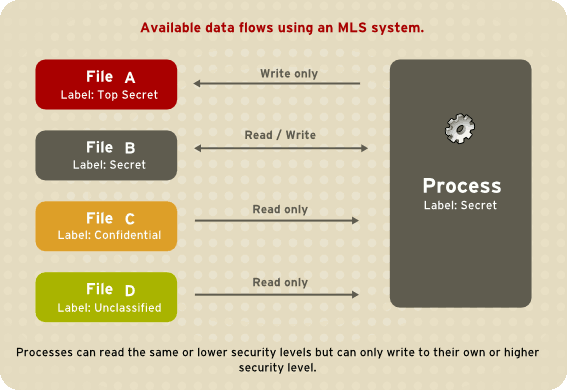

One of these models is the Bell-LaPadula model. It’s a model that focuses

on information confidentiality. Information is perceived as an object

that is tagged/labeled in categories. Users are also tagged/labeled in

categories, and both of them are used to classify how the information

can be accessed based on its sensitivity.

Example of classifications:

- Sensitive but unclassified

- Confidential

- Secret

- Top secret

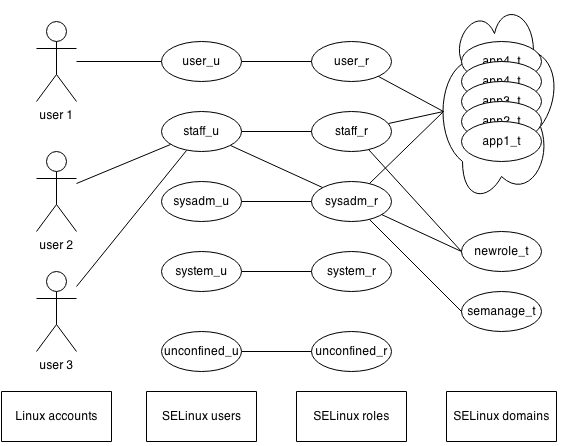

The flow of information always validate the security clearance so that the subject level is allowed to access the object/information level. This is why the Bell-LaPadula model is often mentioned as a formalization of Multi-Level Security (MLS).

The Bell-LaPadula model is particular in that its authors, David Bell and Leonard LaPadula were the ones that started formalizing the idea of secure system state and models. Their work directly influenced the TCSEC/Orange book which we mentioned earlier, and it was initially made to evaluate criteria of MLS systems.

The term MLS can refer to two things, either the security environment/mode, the original definition, or as a capability. The environment is one in which the community has multiple levels of security and needs clearance to access information. The capability, on the other hand, is about the system itself supporting mechanism to implement and enforce an MLS model.

Back to the Bell-LaPadula model, the information flow rules property that we can apply to subjects and objects with labels are the three following:

- The Simple Security Property (

ssproperty): A subject at one level of confidentiality can’t read information at a higher level of confidentiality. Aka “no read up”. - The Star Security Property (

*property): A subject at one level of confidentiality is not allowed to write information to a lower level of confidentiality (thus the level of confidentiality never goes down). Aka “no write down”. - The Strong Star Security Property (Strong

*property): A subject at one level of confidentiality is not allowed to neither read or write information of higher or lower confidentiality.

Furthermore, Bell-LaPadula model enforces that subjects and objects can’t change their levels of classification while they are being referred to. This is called the tranquility principle, which can be weak or strong.

The model has its limit, it didn’t make a difference between the idea of general security and protection of data integrity, it emphasizes confidentiality and controlled access in MLS environment only.

Another model that also relies on the information flow paradigm is the Biba model. It emphasizes the policy of integrity so that data remains internally and externally consistent, there’s no unauthorized changes, and the information/process given the same input produces the expected output.

In the Biba model (also called low water-mark policy), similar to

Bell-LaPadula, data and subjects are categorized, this time into

levels of integrity. The design is such that if something is at a higher

level it cannot get corrupted by a lower level subject.

The properties that could be applied for the flow of information to

ensure the data integrity are as follows:

- The Simple Integrity Property: A subject at one level of integrity can’t read information at a lower level of integrity. Aka “no read down”.

- The Star Integrity Property (

*property): A subject at one level of integrity can’t write information to a higher level of integrity. Aka “no write up” (What is higher keeps its integrity at that high level). - The Invocation Property (Strong

*property): a subject at one level of integrity can’t invoke/request a subject at a higher level of integrity. Aka a subject can’t be promoted to a higher level, only to equal or demoted to lower level.

As you can notice, the first two properties are the reverse of the Bell-LaPadula model. For integrity we had: “no read down, no write up” and for confidentiality: “no read up, no write down”.

Someone came up with a good metaphor for Biba:

After a long journey on your search for Shangri-La and true security awareness, you arrive at a Tibetan monastery. You discover the monks are huge fans of the Biba model and as such, have defined certain rules that you, the commoner, must abide by.

- A Tibetan monk may write a prayer book that can be read by commoners, but not one to be read by a high priest.

- A Tibetan monk may read a book written by the high priest, but may not read down to a pamphlet written by a commoner.

The Clark-Wilson model is another model also focused on addressing the

goal of integrity. However, it’s using a more holistic abstract approach

than the information flow. It tries to formalize what information

integrity is, how the data items in the system should be kept valid.

The model uses security labels to grant access to objects via

transformation procedures and a restricted interface model. It adds to

Biba an enforcement of separation of duties: subjects must access data

through applications, and auditing of their actions is required. More

elements are defined as part of the model: users, applications, duties,

etc..

Clark-Wilson achieves this through access control triplet. The access

control triplet is composed of the user, transformational procedure,

and the constrained data item.

Authorized users/subjects cannot change data in an inappropriate

way (we’ll dive later into what authorization is in the next

section, for now keep in mind it means making

sure we know who we claimed we are). Subjects are restricted to their

own domain, a subject at one level of access can read one set of data,

whereas a subject at another level of access has access to a different

set of data.

Modification in that model only happens through a small set of

programs. These programs perform well-formed transactions, which are

the transitions keeping the system consistent.

Multiple roles in the models are assigned to achieve this. To keep

the internal consistency there is a concept of Integrity Verification

Procedure (IVPs). The data is change through a Transformation Procedure

(TP, sort of like relational db ACID that we mentioned in HRU), on which

the data integrity is checked for Constrained Data Items (CID), and there

could be items/objects outside the model seen as Unconstrained Data Items

(UDI).

This model is interesting as a lot of implementations employ this mindset

of only allowing change by passing through a set of specific applications

(see action-base access control section).

There are a lot of other models to discover such as the Take-Grant model,

the Brewer Nash model (Chinese Wall model), and the NIST RBAC model,

but we’ll keep it at that for now.

As you can see there exists a lot of different ways to visualizing access

control. Let’s move on to one of the pieces of the equation: subjects.

What you need to remember: There exists a lot of models which are used to describe how the security policy (a definition of security) is implemented and prove it’s working as expected. Two main categories exist: the matrix and the information flow. The information flow focus on levels of access while the matrix focuses on rights. Understanding models can help visualize the implementation.

Proving Who We Are

We’ve mentioned subjects before, however we haven’t dived into who they

are and how to make sure of who they say they are. This is what we’ll

tackle in this section.

To know who a subject is and let them pass the gates leading to a system,

we need to discuss identification, authentication, and authorization.

Identification is the process of being able to indicate the identity of a person or a thing: what makes it unique. This is a generic term, that is more human than computer-related.

Authentication (authN) is the act of proving to a certain degree of confidence

an assertion, verifying that something is what it claims to be: the

identity of a subject or any other assertion.

There exists plenty of ways to achieve this, we talk of authentication

factors. They are the following: the knowledge factors (ex: password, pin)

the ownership factors (ex: security token, ID card), the inherence factors

(ex: fingerprint, voice, DNA, retinal pattern), and the geotemporal factors

(ex: place and time). In common parlance it’s the “something you know,

something you have, something you are, and when/where you are” (the

last one often omitted from text books).

The more of these you can mix, the more multi-factor the authentication,

the more it is considered strong; A single-factor authentication is

weak. Additionally, the authentication process can either happen once

or continuously, asking again from time-to-time.

While authentication is the process of verifying that “you are who

you say you are”, authorization (authZ) is the process of verifying that “you

are permitted to do what you are trying to do”. Often, authorization

happens immediately after authentication (ex: upon login), but this does

not mean authorization presupposes authentication: an anonymous subject

could be authorized to some limited privileges.

To sum it up, we got the list of subjects (identity), asserting that they

are who they claim to be (authentication), and finally checking if they

have access, granting them privileges (authorization). Access control

is about having a system only used by those authorized and detect and

exclude unauthorized usage, as we said before.

On Unix-like systems there’s a couple of ways to achieve the above, from

passwd file, login.conf and login.defs, to BSD Auth and PAM, passing

by su/sudo/doas along with special identity management solutions.

What you need to remember: Identification is a generic word relating to proving the identity of something, its uniqueness. Authentication is the assertion of a claim, usually an identity claim. Authorization is about checking if the subject has enough privileges

The Password And Group Files

We can start with the classic username-/group-password combination

provided by POSIX. This is the classic way to describe the set of user

accounts and groups on a system through the /etc/passwd and /etc/group

files. The passwd file contains a list of users with info and the group

file similarly contains the groups with their info. These are the basic

subjects that can interact in a system: users and groups. A user can

act either as itself or as the group it is part of.

Most Unix-like systems have them (/etc/passwd and /etc/group)

and they have a typical layout representing a standard structure from

POSIX that should be returned when using the functions to read them:

getgrent for struct group and getpwent for struct passwd.

The files are textual and contain rows (records) that have fields

separated by colon :. The /etc/passwd file has the following fields:

- Username aka login. The login name is usually a small string that starts with a letter and consists of letters, numbers, dashes and underscores. In general it’s a bad idea to have a dash (‘-‘) at the beginning, and it’s better to avoid uppercase characters and dots within the username so that it doesn’t mess with the behavior of certain programs and the shell.

- Encrypted password (if present)

- User ID (UID, a number in decimal)

- Principal group ID (primary GID, also a number)

- GECOS field (General Electric Comprehensive Operating System field). A

deprecated field that is used these days for comment and random user

information. It can be used by utilities such as

finger(1). - Home directory

- Login shell to use

And the /etc/group file has the following fields:

- Group name. Should follow a similar convention as the user name.

- Encrypted password (if present)

- Group ID (GID, a number in decimal)

- A comma-separated list of user names (users who are in this group)

The username, UID, group name, and GID are theoretically unique values and can be used as reference to the identity of the subjects. However, on some systems it is still possible to have multiple entries with the same values, but it is considered a logical mistake to do so and can lead to several security issues.

When a user executes processes, they inherit the same UID as the one of the user. That is true unless privilege is dropped (as we’ll see in the super-user section), or if some special mechanism allows changing it (as we’ll see in the setuid section).

By convention, certain UIDs have special meaning. For example, the Linux

Standard Base Core Specification says that the values between 0 and

99 should be allocated by the system and not created by applications,

while UIDs ranging from 100 to 499 should be reserved for dynamic

allocation. Other systems and daemons have different conventions,

Debian uses the 100 to 999 as dynamically allocated system users and

group range. As for FreeBSD, the range that should be used by package

porters is 50 to 999, and macOS start allocating new UID starting

from 500. There’s really nothing standardized across systems.

Apart from this, specific UIDs could mean particular things, such as

negative ones which are often used to specify unallowed or blackhole

users, such as -1 that is unallowed, and -2 often used for the

nobody user.

While these files and their formats are simple in themselves, they are

also world-readable and this causes a lot of vulnerabilities. In the

past it was hard to crack passwords, but these days having access to a

hash will eventually lead to it being cracked. That is why today, most

Unix-like systems don’t store the passwords as-is in the files but have

them in a separate place that can only be read with elevated privileges

(super-user/root), this additional file also has new configurations that

are useful for password policies and features.

What these files are is system-dependent and isn’t mandated by POSIX.

The password in the files /etc/passwd and /etc/group have been

replaced with any character that isn’t a valid hash, such as x, !,

and *. It is then interpreted, or not, as having special meaning. If

the character isn’t valid and can’t be interpreted then the user is

locked.

Note that an empty password field means that the user or group can be

used without entering a password (if no other mechanism on top is in

place to disallow empty passwords, such as PAM as we’ll see).

Practically, Solaris uses the /etc/shadow file to store the passwords

of users along with configurations related to password.

The same is true for Linux, which copied Solaris, it uses /etc/shadow

for securely storing user passwords, and /etc/gshadow for group

passwords. It also contains information such as the age of the password,

the last login, inactivity, expiration, etc.. These can be manipulated

with the configuration file in /etc/login.defs, the shadow password

suite configuration, or through command line utilities such as chage

to change the password expiry information for example.

There exists a couple of scripts to convert to-and-from shadow

passwords and groups such as pwconv, pwunconv, grpconv, and

grpunconv. However, these days, everything is directly stored

in secure shadow files, so there’s no need to convert back and forth.

The login.defs configuration file of the shadow password suite is

important as it contains control knobs for the behavior of most of the

utilities related to passwords and accounting. It is a text file with

key/value entries.

For example, ENCRYPT_METHOD specifies which algorithm to use to encrypt

the password. FAIL_DELAY create a delay before allowing another

attempt between login failure, LOGIN_RETRIES is the maximum number

of bad password retries, PASS_MAX_DAYS is the maximum age of password

before being forced to be changed, etc..

On BSD systems, we have something similar to the separation done on

Solaris and Linux that is achieved through the files /etc/master.passwd,

/etc/pwd.db and /etc/spwd.db. The policy and behavior is

controlled through the configurations in /etc/login.conf.

The master.passwd file is where all the passwords and user related

information is stored and it is then used to generate two files using

pwd_mkdb(8): one in a secure and the other an insecure database

format, /etc/spwd.db and /etc/pwd.db. The insecure database file in

/etc/passwd is generated at the same time, removing fields such as

the encrypted password, replacing them with asterisk *.

master.passwd is readable only using elevated privileged and it is a

text files containing colon-separated records with the following fields,

which are in extension of /etc/passwd:

- name: User’s login name.

- password: User’s encrypted password.

- uid: User’s login user ID.

- gid: User’s login group ID.

- class: User’s general classification (we’ll dive into it later).

- change: Password change time.

- expire: Account expiration time.

- gecos: General information about the user.

- home dir: User’s home directory.

- shell: User’s login shell.

Also, like Linux, the behavior of the tools used to manipulate user

accounts and their password policies are controlled in a config file,

this time it’s /etc/login.conf (system-wide) and ~/.login_conf

(local), the login class capability database.

This file contains more than this, as we’ll see in a bit with BSD Auth. As

far as password control goes, it can also set the password cipher to use

localcipher, the passwordtime used for expiry date, idletime

as maximum idle time before automatic logout, and much more. It even

contains a way to only allow specific hosts to login using specific

users, which pertains to our previous discussion of the “when/where” of

authentication (similar to postgresql pg_hba.conf for those familiar).

In general, there are many more password related configs in BSD

login.conf than there are in login.defs. Yet, that doesn’t make much

a difference, because login.conf is used for BSD Auth and not only as a

shadow password file, its real counterpart is PAM and not login.defs

as we’ll see in the next section. Keep

in mind that these files are not the only way to list users and groups

in a system.

In general the passwd and other specific files used to defer encryption

should never be edited directly but should only be accessed through

command line utilities. For /etc/passwd and /etc/group there are

vipw and vigr respectively. To verify their integrity after editing

them there are the pwck and grpck commands. These script do the

appropriate locking, processing, and consistency checks on the entries

so that they aren’t mangled or corrupted.

Other utilities are used to change the passwords, create, and edit users

or groups, such as chpasswd(8), passwd(1), useradd(8), usermod(8),

userdel(8), and gpasswd(1). On OpenBSD we also find another way to

change the user database information through chpass(1) with its many

other aliases and functional equivalents such as login_lchpass(8).

NB: passwd sometimes offer the --lock option to add a ‘!’ at

the start of the password of the user, indirectly locking it.

Let’s move to the more advanced and modern management of authentication, instead of relying on a single authentication scheme we could rely on a range of varied ones that are pluggable to many external methods ranging from LDAP/AD, Oauth2, HSM and hardware keys, kerberos, certificates, and more.

What you need to remember: The password and group files

are classic and simple files to store user and group info along

with password. However, today this isn’t secure to store as

publicly accessible, thus different solutions exists to store the

password encrypted in a separate place that is only accessible by the

super-user. These include the shadow password suite on Solaris and Linux

and the master.passwd on BSD. The new mechanisms also offer a couple

of options to configure password policies.

Dynamic/Pluggable Authentication

BSD Auth

BSD Authentication is a mechanism initially created by the now-defunct

BSD/OS to support dynamic authentication “styles”, it is predominantly

used in OpenBSD. It consists of stand-alone processes that communicate

over a narrowly defined IPC API to dictate how the authentication will

happen. This separation of programs and scripts follows the principle of

least privilege, not getting the same power as the parent process but

only what it needs, it’s a way to do privilege separation.

The modules/scripts are configured through login.conf as methods of

authentication.

We mentioned /etc/login.conf and ~/.login_conf, the login class

capability databases, earlier but didn’t explain its format (getcap). As

the name implies it consists of a list of “classes” along with specific

features and configuration related to them. A class is simply an

annotation to categorize users, independent of their groups. If you go

back to the last section you’ll notice

that the class a user is in is mentioned in the master.passwd file. If

it isn’t in there then the class used will be the one named default

(root account will always use the root entry regardless if present

or not).

In login.conf, you’ll find all sorts of things referred to as

capabilities, such as resources constraints and quotas (we’ll go into

that in the isolation section seeing also ulimit and cgroups),

the password format and expiry, session accounting, user

environment settings, and much much more.

It’s a textual file with the name of the class followed by a colon :

and then a list of capability entries that are also separated by colons.

Example:

default:\

:localcipher=bcrypt:\

:copyright=/etc/COPYRIGHT:\

:welcome=/etc/motd:\

:path=/sbin /bin /usr/sbin /usr/bin /usr/games /usr/local/sbin /usr/local/bin ~/bin:\

:nologin=/var/run/nologin:\

:filesize=unlimited:\

:maxproc=unlimited:\

:umask=022:\

:auth=skey,radius,passwd:

Any edit to the file will require it to be rebuilt into the system using

cap_mkdb.

What we’ll pay attention to in this section are the auth and

auth-<type> list attributes which allow the user to be authenticated

through the dynamic authentication “styles”. By default auth only

contains the passwd entry, however there are many more available.

OpenBSD lists the following modules, each having their own separate

manpage documenting how to set them up (login_<style>).

- passwd

- reject (doesn’t allow login)

- activ

- chpass

- crypto

- lchpass

- radius

- skey

- snk

- token

- yubikey

These are found in /usr/libexec/auth/login_<style>. Obviously, there’s

also a way to write your own local authentication as a separate script,

but it’s recommended to give them names starting with - to avoid

collision with existing ones.

We won’t dive into how to write such script but let’s mention that the

IPC protocol is based on file descriptors where strings are written

(authorize, reject, challenge, etc..).

When a program needs to authenticate something it asks for a “service”

which can either be login, challenge, response. In most cases,

the login service is the one asked for.

You might also wonder how to specify the authentication method you want

to use upon login when there’s a whole list enabled for a user. That’s

done by adding after the username a colon : and the name of the auth

method afterward. Example:

login: username:skey

otp-md5 95 psid06473

S/Key Password:

What you need to remember: BSD Auth is a way to dynamically

associate classes with different types/styles of authentication

methods. Users are assigned to classes and classes are defined in

login.conf, the auth entry contains the list of enabled authentication

for that class of users.

PAM

PAM, the Pluggable Authentication Module, not to be confused with Privileged Access Management — a generic term for corporate infrastructure sensitive data security (something used with IdM) — plays a similar role as the BSD Auth styles, that is to delegate the authentication mechanism to a wide-array of different technologies.

It achieves this through a suite of shared libraries allowing the admin

to pick how applications authenticate users (in contrast with separate

programs communicating via IPC of BSD Auth). If an application is

PAM-aware, if it is using the PAM library to perform authentication,

then the mechanism can be switched on the fly without touching the

application itself.

Instead of relying on /etc/passwd, /etc/group, or the shadow password

suite to map identifiers, PAM will handle and often override this mapping

and multiple of other features provided by them. PAM is now the default

authentication mechanism on most systems, including Linux and FreeBSD,

that means that the default login interface to the system will use it

(login).

The PAM modules are not only limited to authentication but also include

standard programming interfaces for session management, accounting,

and password management.

One big caveat is that there isn’t a single PAM implementation but

multiples: OpenPAM, Solaris PAM, and Linux-PAM.

OpenPAM is a continuity of Solaris PAM pushed forward by FreeBSD as

part of a USA DARPA-CHATS research contract program, the lib is used

by FreeBSD, PC-BSD, Dragonfly BSD, NetBSD, macOS, IBM AIX, and some Linux

distributions. On Linux, Linux-PAM is almost the default authentication

everywhere, now being a base meta package dependency.

Both of them are very similar in their workings and only differ on a

few points. PAM was somehow indirectly standardized in POSIX, from what

I understood, as part of a sub-specs within another spec in the X/Open

Single Sign-on (XSSO) standard. That particular specification having

a scope englobing more than PAM itself (single sign-on). But it’s also

standardized in OSF-RFC 86.0 (the Open Software Foundation later merge

to become The Open Group): Unified Login With Pluggable Authentication

Modules (PAM).

When it comes to differences, Linux-PAM has a wider range of modules

available, linking these modules is more dynamic than OpenPAM which

is more rigorous. The location of the modules and headers is different

(macOS has them in <pam/pam_appl.h> while most systems have them

in <security/pam_appl.h>) , even modules doing similar functionalities

could take different parameters and be named differently between OpenPAM

and Linux-PAM. Code-wise when implementing modules, the API syntax and how

it’s used is inconsistent, they each have specific structures and could

have their own header files. While OpenPAM says that it follows the PAM

specs to the letter, when taking a closer look it’s not really true. As

for Linux-PAM, it contains a lot of extensions to the specs. Another

difference is that Linux-PAM has more community support and documentation

than OpenPAM.

PAM, like so many other project, makes life harder by using its own

vocabulary, which is ill-defined across implementations, but somewhat

makes sense if someone takes the time to explain it.

An account is the set of credentials/identifiers an applicant wants

to get access to from the arbitrator. The applicant is the entity

requesting it, and the arbitrator the entity that has the ability

to grant/deny and verify that request. The applicant performs the

request through a client, an application that is PAM aware, toward

a server, the module or piece of code acting on behalf of the

arbitrator. The server can be grouped into services providing the

same functionality, usually a service has the same name as the program

(ex: ssh using ssh service). This request asks for a facility

which is one of the predefined function in the API categories provided

by the PAM library: authentication, accounting, session management,

and password management. The request will launch a chain, which is

a sequence of ordered modules that will handle the request. Multiple

requests over the usage of the application create a transaction or

conversation. The client usually needs to use its token, which

could be a password or any other piece of information to prove its

identity. The session to use the account is what is returned to the

client after its successful request. Finally, the set of all rules and

configurations statements that handle a particular request are called

its policy.

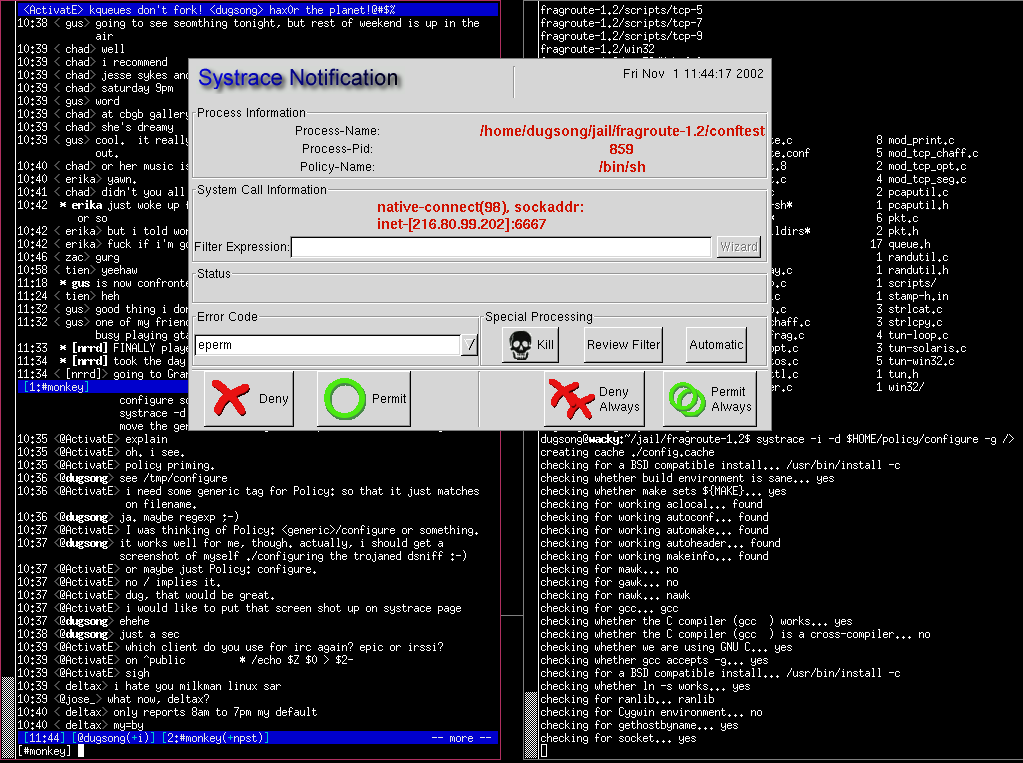

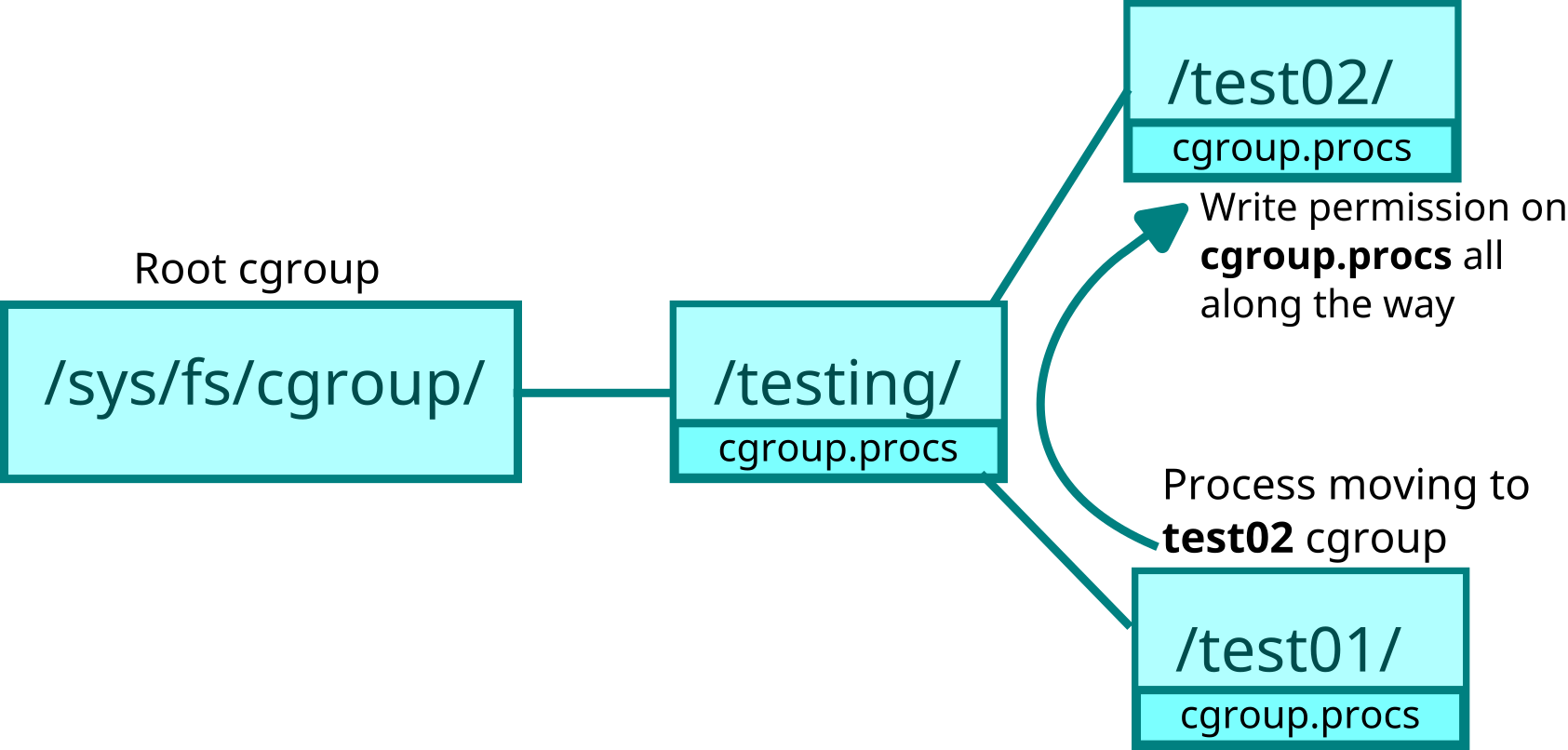

This figure from the Linux-PAM documentation makes things much clearer than the above mumbo-jumbo.

+————————————————+

| application: X |

+————————————————+ / +——————————+ +================+

| authentication—[————>——\——] |——<——| PAM config file|

| + [————<——/——] PAM | |================|

|[conversation()][——+ \ | | | X auth .. a.so |

+————————————————+ | / +—n——n—————+ | X auth .. b.so |

| | | __| | | _____/

| service user | A | | |____,—————'

| | | V A

+————————————————+ +——————|—————|—————————+ —————+——————+

+———u—————u————+ | | |

| auth.... |——[ a ]——[ b ]——[ c ]

+——————————————+

| acct.... |——[ b ]——[ d ]

+——————————————+

| password |——[ b ]——[ c ]

+——————————————+

| session |——[ e ]——[ c ]

+——————————————+

In practice a good way to understand how PAM works is to take a look at the files provided by its package, how to configure them (syntax), and then take a look at a few example flows.

There are three categories of locations that the package installs files at: the configuration files for the services and modules, the module libraries, and the documentation.

The PAM configurations are found in either a single file /etc/pam.conf

or in a series of configuration files named after every service in

/etc/pam.d. The main difference is that if all the services are in a

single file, then every lines related to that service will need to be

prepended with its name, meanwhile in /etc/pam.d, the name of the file

is the name of the service (ex: passwd). For extra services that

are outside the base system, the package itself will need to install

its policy file in these locations.

Specific modules configurations are found in /etc/security. Every

module can have its own syntax and settings, either when used in the

PAM configuration or from a separate module-specific config file (ex:

faillock.conf).

The modules, the .so dynamic libraries, are installed in either

one of these locations: /lib/security or /lib64/security, or in

/usr/lib/pam for OpenPAM. The default installation should come with

a good set of useful modules but you can always create your own, which

we won’t dive into.

The PAM documentation comes in a set of man pages (pam(8), pam.d(5))

and for Linux-PAM an extensive built-in administration guide as HTML

pages in /usr/share/doc. Additionally, every module comes with its

own man page that starts with pam_, for example pam_faillock(8).

Now that this is out of the way, we can dive into how to configure the

policy (set of all rules) of a service. The first rule is that if no

service file or entry matches, the other service will be used as a

black hole. The other service usually contains a denial policy along

with warnings.

Within every service policy file we find sequential rule lines calling

modules to perform a feature of one of the API facility (a task

category in the API management: authentication, accounting, session,

and password), furthermore we could also find include or substack

entries that allow stacking other configuration files as dependencies.

The syntax goes as follows:

service type control module-path module-arguments

The service part should be omitted if using the /etc/pam.d style

of configuration. The types is the facility we’ve been talking about,

asking for a certain API category in a module, one of these:

account: For non-auth related account managementauth: Two aspects of user auth, find who the user is by prompting password, and grant group membership and privilegespassword: For updating auth token associated with usersession: For doing things that need to be done before/after giving access to a service, including logging, opening data exchange, etc..

NB; The type can be prepended with a - to silence logging on missing

library errors.

The module-path can either be the full filename or relative path of the

.so file.

The module-arguments are a space-separated list of arguments to modify

the behavior of the module. Every module manages this themselves,

and you can usually find what the argument means in their man page

(pam_<module>). Moreover, as we said before, this can also be configured

in /etc/security if the module offers a configuration file there (ex:

/etc/security/limits.conf).

Lastly, the most flexible part of the rule is the one before the module,

the control part which allows control flow based on the success or

failure of the module: stopping processing, continuing, jumping a few

lines, etc..

There are two syntax for that, either a simple word, or square-bracket of

value=action. Here’s the list of words:

required: failure leads to PAM-API returning failure, but only after the other modules have been invokedrequisite: like required but returns directly or superior stacksufficient: if module succeed and no prior module failed, it will return successfullyoptional: success or failure is not importantinclude: include all the lines from the config file specifiedsubstack: include line of the config file as arg. It differs from include in that the action in the substack doesn’t skip the rest of the complete module stack.

Otherwise, it will use the advanced syntax: [value1=action1

value2=action2 ...], the values are what is returned by the module and

the actions can either be a predefined ones such as ok, ignore,

die, or a digit which will indicate how many lines/rules to skip in

the policy. Example from Linux-PAM calling pam_unix.so module:

auth [success=1 default=bad] pam_unix.so try_first_pass nullok

This means that on success of pam_unix the next line will be skipped.

That’s about all there is to PAM, let’s have a look at a few modules as examples.

The pam_unix module is one that will use the standard Unix

authentication we’ve seen before, relying on the shadow password

suite. On Linux-PAM, it can take some additional arguments that are

interesting such as remember which will remember the last N password

on change, the specific encryption algorithm to use for the password,

the minimum password length, and more. Most Unix-like systems have a

similar module, either with the same name or split into different ones

(Solaris has pam_unix_auth, pam_unix_account, and pam_unix_session,

as separate modules).

On Linux-PAM, the pam_nologin module prevents non-root users from login

to the system when /var/run/nologin or /etc/nologin file exists.

Let’s mention other modules important for access control:

pam_group: grant group membership dynamically according to a specific syntax.pam_limits: Limit the system resources that can be obtained in the user-session.pam_setquota: Limit the disk quotas on session start.pam_access: Control who can access the system based on login name, host or domain names, IP, and more- etc..

Furthermore, there are many modules related to using devices such

as PKCS#11 enabled, HSMs, LDAP, etc. to authenticate the user to a

system. And there are also multiple modules that have logging and audit

options (We’ll have a full section on auditing too, as this is a must).

Most distros package managers offer hundreds of different modules for

countless types of integrations, ranging from captchas to time-based

one-time password (ex: Google Authenticator).

Overall, PAM alleviate the weight from applications by taking it upon itself. The admin then has a centralized place to configure each service authentication, accounting, and password management. It’s especially useful since PAM offers a lot of modules, much more than BSD Auth, and a high-level of flexibility.

What you need to remember: PAM is the most popular way to

dynamically perform authentication through modules, it’s used on

systems such as FreeBSD, macOS, and Linux. There exists multiple

PAM implementations that differ in a couple of ways but not in the PAM

configurations: there’s OpenPAM and Linux-PAM. There are countless PAM

modules, each have their own man page and can be configured independently

in /etc/security. The PAM configuration file (/etc/pam.d) is

per-module and consist of a series of entries read sequentially, each

calling a module for a purpose. The important part of the config is

the control flow which allows to take actions based on the response of

the module.

Super-User and Switching Subject/Domain/User

We’ve seen how we can prove who we are, but sometimes there’s a need

to swap subject, to become someone else. This was mentioned briefly

as switching domain dynamically in a previous section. That switch can

either be temporary, delegating authority to run a command, or permanent

by continuing running the session as another subject.

Before initiating the discussion, let’s have a meaningful detour to talk

about the concept of super-user, it will come in handy when uncovering

the rest of the topic.

Super-User Concept

A super-user is a generic computing concept of a subject that has

administrator privileges on the system, that is, they bypass all the

rest of the security features and can carry all the possible actions,

absolute power over the system. This applies in all modes, single- and

multi-user, and ranges from the ability to change permissions of other

users, to using low-numbered ports (or whatever is configured as such),

to manipulating raw devices.

This could be a unique user or a role/group or any other mean to associate

an identity with this feature.

On Unix-like systems, it is the user with UID zero (uid=0) that gets

this privilege. Historically, this account’s name is root in relation to

the / root of the file system and the user who owns it, but the actual

name is irrelevant. Some systems such as FreeBSD even provide additional

alternative super-user called toor (with a non-standard shell).

Because of this, on Unix we often refer to super-user as root, but that

is not very precise, so we can talk of root privileges instead.

As we mentioned previously, it’s a security risk to have multiple users

with the same UID, however on most systems it’s still technically feasible

to have these entries in the passwd file.

You can check your own user id by doing:

> echo $UID

Only the root user has the ability to change its UID to that of another

user but once it does, there’s no way back. This drop in privileges, as

a security measure, keeps the integrity. No real widely-used command-line

utility exists to do this root drop apart from Bernstein’s setuidgid and

derivatives

script (Part of daemontools) and Linux’s runuser from util-linux

(also used for daemons privilege drop).

As we’ll see in the capability and access-control list sections later,

there’s many more ways to define a super user, and many more identifiers

(real and effective user and group ids). We can note on Linux that the

super-user is also a Linux’s capability role defined as CAP_SYS_ADMIN

or TrustedBSD’s CAP_ALL_ON.

After reading this we can clearly say, based on the principle of least

privilege, that the super-user should not be used to perform daily

tasks and should be restricted to specific scenarios only as otherwise

it could lead to disastrous damages with no safety net. Yet, by default

Unix-like systems are made in such a way that ordinary users don’t have

access to most part of the systems, so it can be tempting to unnecessarily

rely on the root account and its derivatives.

For that reason, it is preferable to rely on a middle-man, a mechanism

or facility as we’ve come to call them, that would intermediate between

user switching. That’s what we’re going to see.

Yet, we’ll need another preamble to introduce these tools, because as

we said: Only root privilege allows to change between UIDs. The trick

to allow normal users to do this are found in the following two terms:

setuid and setgid.

What you need to remember: A super-user is one with full

privileges. On Unix-like system that’s a user with UID=0, the user name

root doesn’t matter, it could be anything else. This is a possible issue

with double entries with UID=0 in /etc/passwd. Only the super-user

can change its UID.

setuid and setgid

The setuid and setgid bits, short for set user identity and set group identity, are special access rights flags that can be attached to files. As we’ll see later, files have a series of other access rights attached to them (read, write, execute), and are owned by a group and a user.

The special flags, if set on an executable, allow the user running it to gain the privilege of the owner of the file, user or group depending on the flag set. This means we can bypass the rule saying that none other than root can switch user, at least we can bypass it temporarily for the time the executable is running. In many instances of Unix-like Os, for security reasons, the process gaining privilege is stopped from self-modifying its own process memory, otherwise that would lead to privilege escalation.

When set on a directory, the files and directories created underneath will inherit the permission set in these special bits. However, not all Unix-like systems will do this for setuid, as far as I know only FreeBSD allows to configure setuid to work similar to setgid when set on directories.

To add setuid on a file we can do:

> chmod +s ./executable

We’ll see how to set all kinds of flags on files and more later, but now we’re armed with the knowledge required to understand the rest of this section. For now, just keep in mind the trick that allows us to switch user through an executable owned by them.

One thing we need to add here is that setuid and setgid are not

only bits used on files, but also exist as functions specified by POSIX

(often implemented as system calls). Along with them we have another set

of functions called seteuid and setegid, for set effective UID and

set effective GID. Additionally, there’s a combination of both previous

ones found in setreuid and setregid, for set real and effective

user and group IDs. Furthermore, there’s even a third and fourth set of

functions setresuid and setresgid for setting the previous ones along

with the saved user ID and group ID, and setfsuid and getfsuid

to set the filesystem IDs.

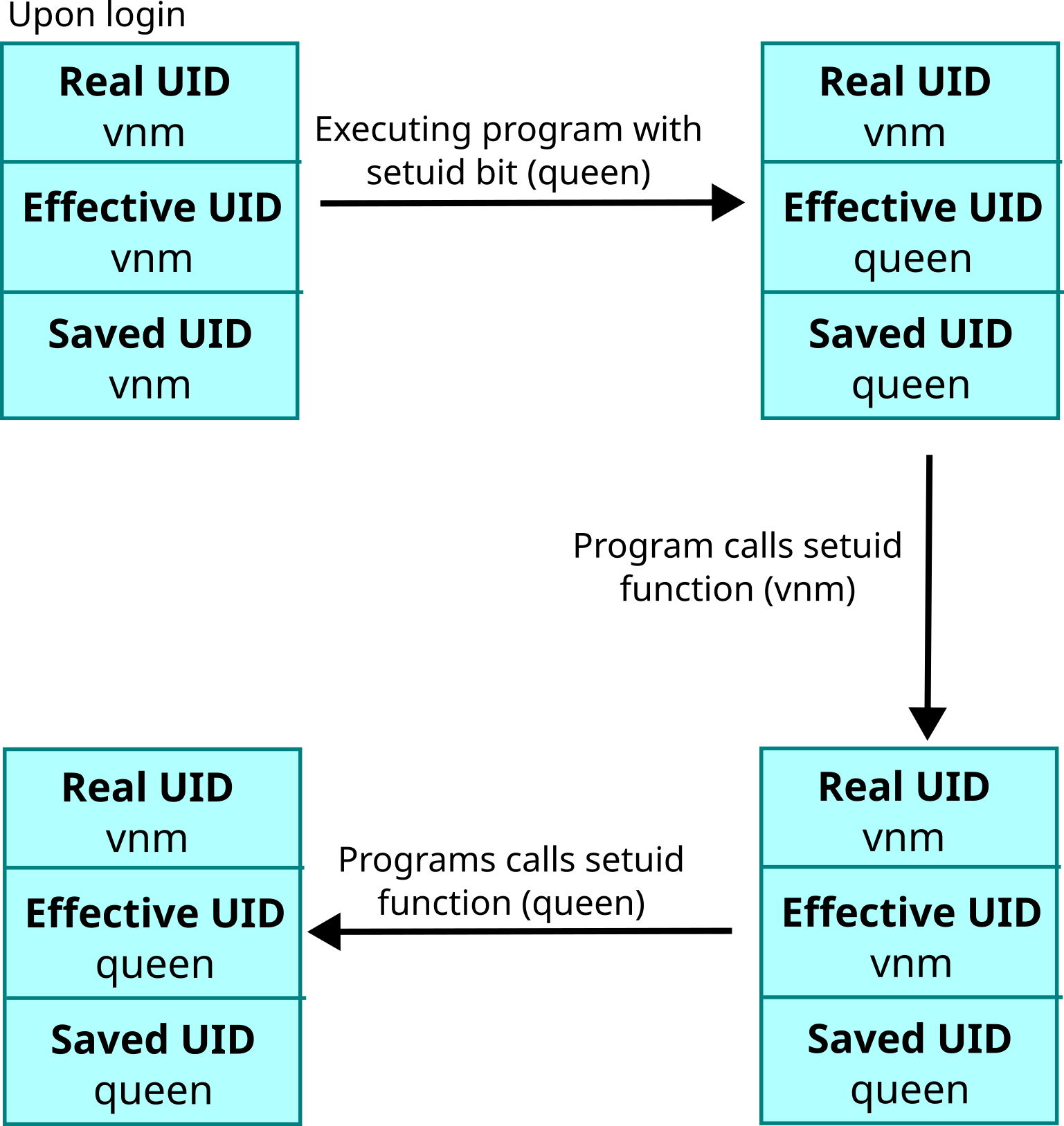

We’ve seen how it made sense to have bits such as setuid and setgid

on a file, which would allow the process to be run as the UID of the owner

of that file, but what about a calling a function programmatically in

a process: who can perform them, how, and what’s the difference between

the real, effective, saved, and filesystem IDs.

Remember when we said that after root drops privileges there’s no way

to gain it back, well euid, the effective user id, file system user id,

and saved set-user ID are tricks to bypass that.

When a process is executed it gets “credentials”, an identity allowing

it to perform tasks. These include the process identifier, the parent

process identifier, the session id, but more importantly for us: the

real and effective user and group ID.

As you can imagine, upon login, a user gets associated with the IDs it

has in the password file we’ve seen, these are its real user and group

identifiers. Yet at the same time, in the background all the other

identifiers, effective, saved are also set to these values. You can

fetch your user ID using getuid(2) function call for instance.

The processes spawned by a user inherit these IDs, and thus in most

cases a process real and effective user and group id are the same,

making them redundant.

However, these fall into place when executing one of the setuid bit

program we’ve mentioned, such as ping for example. At this moment the

process will change its effective user or group id to the one of the

file owner. The kernel will use the effective IDs to make most privilege

decisions (with some exceptions), thus allowing the behavior we’ve seen.

So far, it means that the real user ID is who is actually owning the

process, and the effective user ID is the one the OS looks at to make

decisions.

The reason the real user ID is stored is to allow to switch back to it. To make this happen, the effective user ID is backed up in a temporary place called the saved-set user ID, and even when the real-user ID is swapped with the effective user ID, we we can get it back. This mechanism allows a super-user to drop its privilege to a normal user, and then switch back, thus keeping intact the least-privilege principle.

The exceptions to the privileges allowed by the effective IDs depend

on the Unix-like system’s implementation. On most systems, it allows

accessing the file system as the effective UID, however on Linux this is

done through the file system ID (fsuid) instead, which is usually equal

to the effective user ID unless explicitly set otherwise (setfsuid).

Additionally, depending on the semantic, the creation of files might

or might not inherit the effective ID. For instance, on BSD Unix the

group ownership of files created under a directory is inherited from

the parent directory, while on AT&T UNIX and Linux the files created

inherit the effective group ID.

The effective user ID can be propagated, for example when spawning a

new shell, but that depends on the shell used and the parameters passed.

Let’s also note that a process can be killed if either the real or effective UID match, this allows stopping a process that a user starts as setuid.

The above functions mentioned should make more sense, but why have

so many of them.

On most systems the behavior of setuid depends on the user, for

super-user everything is allowed and all IDs are set to the one passed,

while for normal user it will only set the real user ID and it will be

allowed depending on the effective and saved-set user ID (On Linux there’s

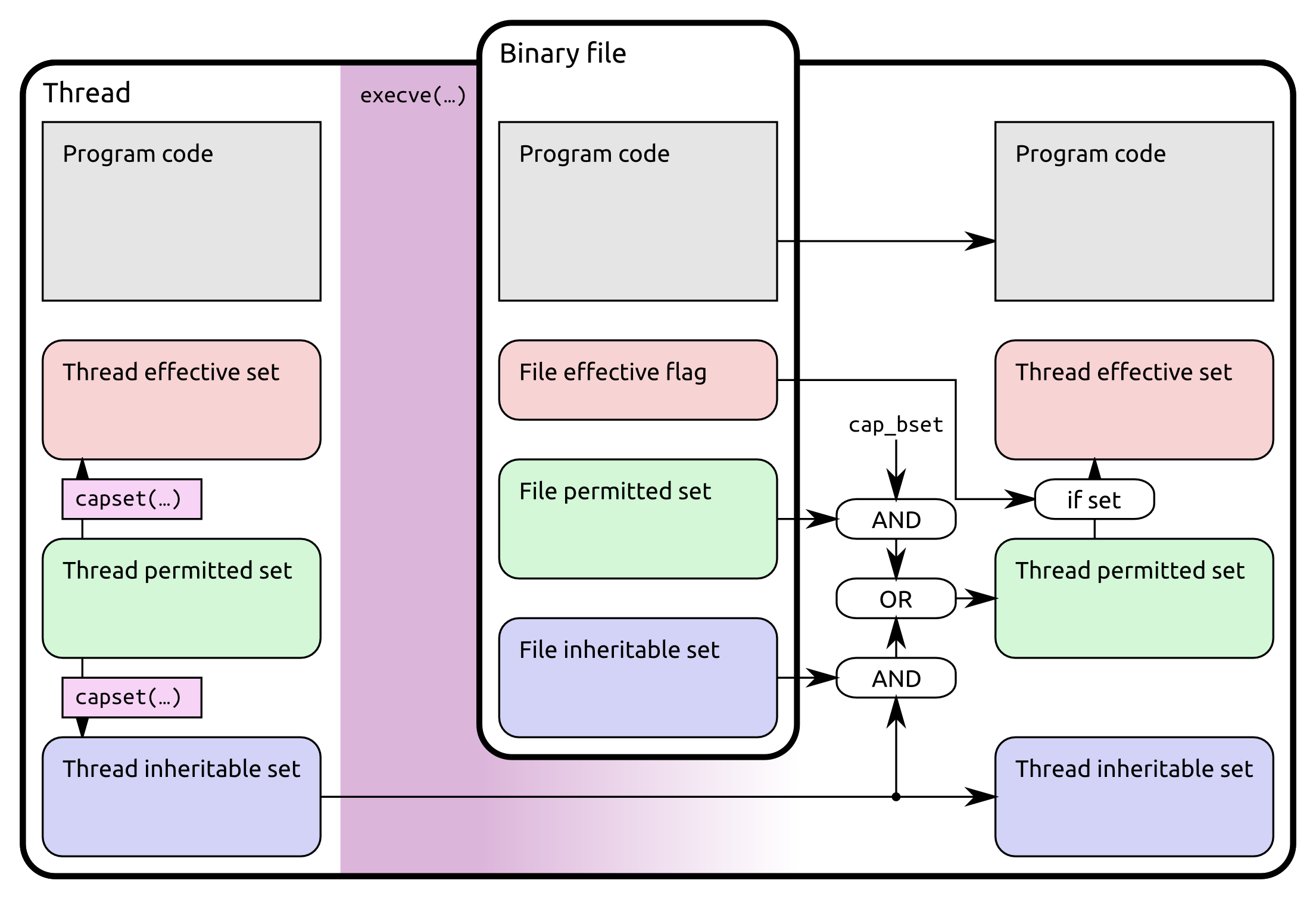

a special capability called CAP_SETUID which we’ll see in the POSIX Capabilities section).

The seteuid will only set the effective user ID, and let you perform

this call if the ID passed is either the saved-set ID or the real user ID.

The setreuid sets both of them, it will also change the saved-set user

ID to the new effective user ID.

// for Linux example getresuid

#define _GNU_SOURCE

#include <stdio.h>

#include <unistd.h>

// 1000 is vnm

// 996 is queen

int main() {

// all UID are 1000 on login

// this is setuid bit to user queen 996

int ruid, euid, suid;

// this is not POSIX

getresuid(&ruid, &euid, &suid);

printf("real uid: %d\n", getuid());

printf("effective uid: %d\n", geteuid());

printf("saved uid: %d\n\n", suid);

// effective and saved uid are now 996

// real uid is 1000

setuid(1000);

printf("real uid: %d\n", getuid());

printf("effective uid: %d\n", geteuid());

getresuid(&ruid, &euid, &suid);

printf("saved uid: %d\n\n", suid);

// effective and real UID are now 1000

// saved UID is still 996

// allowed because saved

setuid(996);

printf("real uid: %d\n", getuid());

printf("effective uid: %d\n", geteuid());

getresuid(&ruid, &euid, &suid);

printf("saved uid: %d\n\n", suid);

// effective and save UID are now 996

// real UID is still 1000

}

This surge of information is dizzying with its plethora of IDs but will come handy in the next few sections.

What you need to remember: setuid and setgid allow someone to gain the privilege of the user or group owning an executable when calling it. There’s a dance of real, effective, and other types of IDs allowing this to happen. That’s a way to bypass the restriction saying that only root can change its UID and that there’s no way to switch back and forth.

su and newgrp

Setuid and setgid are what makes administrations tools that we’ve seen

before work such as the ones manipulating the shadow password suite

configuration files, ex: changing your password as a normal user.

They also allow the creation of generic tools to switch between users

and groups: su, substitute user, and newgrp substitute/change group.

Both of these are straight forward, doing what is intended, switching to

the user or group but asking the user or group password beforehand. If

user vnm wants to become user queen after issuing su - queen they’ll

have to enter queen’s password. Meanwhile to switch to another group

with newgrp secret they’ll have to enter the secret group’s password

su is more advanced than newgrp, allowing to run interactively

or non-interactive shell, to pick the shell that is going to be used

(-s), set the environment variables and whether to start as a login

shell, to change the directory to the home of the user or not (-),

to run a command and exits afterward (-c), etc.. su also has the

possibility to switch group (-g and -G) but this is only allowed for

the root user, which isn’t as practical as newgrp.

By default su without arguments switches to root and without changing

the directory.

On systems using PAM, su can have its own policy file, allowing

special authentication behavior, and further auditing and logging (apart

from the default logging upon login behavior) of who has used the command.

For example, on FreeBSD installations it is common to have a rule that

only allows users part of the wheel group to use su, this is done

through the pam_group module restrictions in the su PAM policy file.

Moreover, on BSD systems the su utility can be used to switch between

login classes by precising it as an additional -c option. Weirdly,

this is the same option used to run commands so you have to precise it

twice to make it work.

For instance, to switch to the staff login class of login.conf:

su -c staff bin -c 'makewhatis /usr/local/man'

There are countless reasons why you’d want to switch from one login class to another, one of them is to raise your resource limits, but as we’ll see later it can also be used in an Mandatory Access Control system to raise or lower privileges.

While the setuid and setgid flags solve a lot of system management issues they also open the door to an astonishing number of security risks. It is the source of the so called “confused deputy” problem: One in which one program of higher privilege is tricked by another lower privilege program into doing something it wasn’t supposed to do, misusing its authority on the system (capability-based security is one of the solutions to this problem, that we’ll dive into later).

For this reason, a common recommendation is to only use the -c option

with su to exits immediately after the execution of the command.

Another issue is that su doesn’t create a new

pseudo-tty for the session, which can lead to

escalation, thus the -P option should be passed to achieve this.

Yet, this isn’t enough, FreeBSD even disables the newgrp command

by default by not assigning the setuid flag to it, considering it too

insecure and discouraging it.

The alternative to all these is to use either a completely different

mindset or to use a relatively more configurable versions of the previous

tools: sudo and doas.

What you need to remember: su and newgrp are tools that rely

on setuid to substitute the user and group. To perform the command the

user needs to be aware of the password of the subject they’ll switch to.

doas and sudo

The tools sudo, substitute user and do, and doas, literally “do

as” someone else, offer the same functionality as su and newgrp,

allowing it through the same setuid trick (changing all the IDs real

and effective), but differ in some minor but important theoretical and

practical ways.

First and foremost, while su and newgrp require the password of

the subject we’ll switch to, both of sudo and doas don’t, instead

they require the current user’s password, the one invoking the command,

or having a rule in the configuration file allowing such action without

authentication.

Second of all, sudo and doas have more granular configurations,

allowing a range of things that aren’t possible with su such as only

allowing particular commands or certain hosts, logging and auditing,

and more.

Thirdly, both of these tools follow the mindset of executing a single

command and exiting afterward, which we talked about earlier as a good

practice.

Lastly, these tool have a “persist” feature which allows configuring

a timeout to not re-ask for the password after authenticating.

For the actual authentication part, similar to su, sudo usually

relies on PAM library and doas, which is usually on OpenBSD, relies

on BSD Auth. However, there exists a portable version of doas, OpenDoas

which works with PAM.

With doas, because it relies on BSD Auth, you can specify the

authentication style to use when authenticating with the -a argument.

By default, if you call sudo or doas with only the command you want

to run, it’ll execute it as the root user (UID=0).

> sudo cmd

> doas cmd

They also allow running it as another user through the -u argument,

and sudo also allows running the command as another group with the

-g argument.

One particularity with sudo is that when invoking the command it

will set two environment variable with the invoking user’s values:

$SUDO_USER, $SUDO_UID, $SUDO_COMMAND, and others. This can be

useful to keep track of who has initially called sudo.

Let’s take a look at the rules we can configure for both tools. sudo

has its configuration files in /etc/sudoers or /etc/sudoers.d along

with sudo.conf, while doas has a single simple configuration in

/etc/doas.conf.

We can start with doas since it’s simpler. There’s no specific tool

to edit doas.conf however you can double check everything is OK in

the configuration by issuing doas -C /etc/doas.conf.

The file contains a series of line with rules used to match what is allowed. They have this format:

permit|deny [options] identity [as target] [cmd command [args ...]]

This is pretty straight forward, it either permits a command issued by

someone to be executed as someone else, or not.

The identity can be either a username or a group, to specify it as group

you have to prepend it with a colon (:). The target is the user we’ll

be substituted with, or if not present all of them are allowed.

Additionally, the rule can be restricted to only allow a particular

command with or without certain arguments (args). In the options

part, there can be a couple of things such as setting or keeping

environment variables, making the rule not require a password, use the

“persist” option, and more.

Here are two examples:

To allow users in the group wheel to run any command as any other user:

permit :wheel

To allow members of the test group to run helloworld without password

as root:

permit nopass :test as root cmd /usr/bin/helloworld

Let’s move on to sudo’s configurations, which consist of the same

idea as doas: lines with rules. To edit the sudoers file (or

/etc/sudoers.d set of configurations), which is the equivalent

of doas.conf rules, it is recommended to rely on the visudo

tool. Furthermore, sudo offers a nifty option to debug the rules

applying to the current user: sudo -ll which will display whatever

applies at the moment.

sudo also has an additional configuration file for its “frontend”,

unrelated to the rules but to display, plugins, logging, and debugging

(sudo.conf). Some plugins can even allow storing the rules remotely,

such as in LDAP (See sudoers.ldap man page).

> sudo -ll

Matching Defaults entries for vnm on identity:

passwd_tries=100

User vnm may run the following commands on identity:

Sudoers entry:

RunAsUsers: ALL

Commands:

ALL

Sudoers entry:

RunAsUsers: root

Options: !authenticate

Commands:

/usr/bin/pacman ^[a-zA-Z0-9 -_'"]+$

Sudoers entry:

RunAsUsers: root

Options: !authenticate