Take a look at your process tree, it’s likely that you might notice a

new service: rtkit-daemon, the RealtimeKit Daemon. It seems nobody

on the internet is talking about it, so let’s explain what it’s about

in this article.

A Refresher on Multitasking

First, let’s refresh our minds about multitasking OSes.

In a multitasking OS, we have many “tasks” that share the same

resources. One of these resources is the CPU bandwidth, aka time; We

call that time-sharing. The OS CPU scheduler is the piece of software that

dictates the scheduling policy: it selects which task runs at what time,

for how long, and how the CPU should be relinquished to allow switching

to another task. It goes to say that this program is one of the most

important in the OS, without it nothing happens. Furthermore, this has

to be calibrated properly since switching between tasks can be quite

expensive, it requires the whole execution context to be loaded again

each time.

When we mention the word “task” we generally think of processes and threads, what’s the difference on Linux?

This vocabulary comes from the theory and philosophy of OS. In those,

we talk of processes, instances of programs under execution, and threads,

parallel units of work that share the resources and memory of the process.

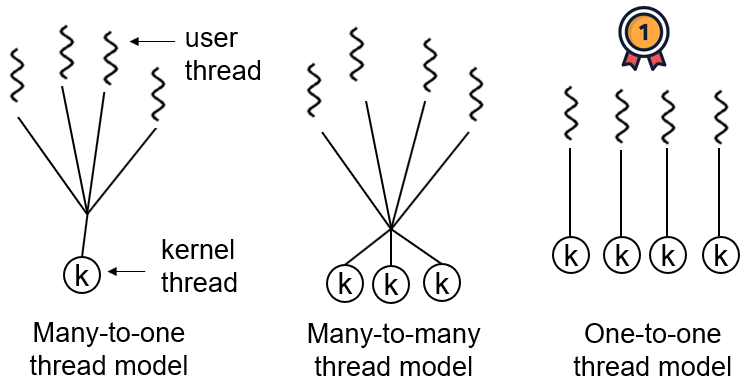

Theoretically, there are different models of how the threading

mechanism is implemented. The difference mostly lies in a distinction

between user-level threads and kernel-level threads and how they’re

mapped to one another. Kernel-level threads are thought of as what is

actually executed and handled by the OS, virtual processor entities,

and user-level threads are what the user actually wants to run. These

can be mapped one-to-one, many-to-one, or many-to-many, depending on

the implementation.

Fortunately for us, on Linux (at least since 2.6 with NPTL) it’s simple:

all tasks are equal from the kernel’s perspective, it’s a one-to-one

mapping. Indeed, they all rely on the same structure within the kernel,

task_struct, and are scheduled by the same unified scheduler. They

are all viewed as scheduling entities.

Hence, on Linux, there’s no real concept of thread from the kernel

perspective, this is only a user-space concept, and instead each thread

is viewed by the kernel as a separate process/task with its own flow

of execution.

Yet, Linux does differentiate between tasks based on their resources and context. It has the notion of processes, lightweight processes, and kernel threads (different from the theoretical kernel thread we mentioned earlier).

A process is what you think it is: the execution of a program. Meanwhile,

a lightweight process, aka LWP, is what you’d generally call a thread:

it’s a process that shares some of its context, address space, process

ID, and resources with another process. Lastly, kernel threads, in the

context of Linux, are processes that the kernel launches, manages,

and that share the context and resources of the kernel space, as in

they don’t have a user address space nor user stacks. These last ones

can only be created by either the kernel, or its privileged components

such as drivers, and they serve system functions.

Again, keep in mind that all these processes are scheduled by the same

scheduler.

A look at the process tree is helpful in understanding the distinction

and learning a few things. To do this, there’s our good ol’ friend ps.

In its manpage we can catch a few interesting definitions:

- PID (process ID) and TGID (thread group ID) are identical.

- LWP (lightweight process ID), TID (thread ID), SPID (shared process ID) are also identitcal. They are the sub-identifier for lightweight processes.

- NLWP, number of lightweight processes, more or less equivalent to the number of conceptual “threads” for a given process.

Let’s check that in ps -eLf, you can also notice how kernel threads

are usually within square brackets:

-e: all processes-f: full-format listing-L: show threads

UID PID PPID LWP C NLWP STIME TTY TIME CMD

root 1 0 1 0 1 Mar04 ? 00:00:11 /sbin/init

root 2 0 2 0 1 Mar04 ? 00:00:00 [kthreadd]

root 18 2 18 0 1 Mar04 ? 00:00:35 [rcu_preempt]

vnm 995 960 995 0 1 Mar04 ? 00:00:02 2bwm

vnm 1069 677 1069 0 5 Mar04 ? 00:00:09 /usr/bin/dunst

vnm 1069 677 1072 0 5 Mar04 ? 00:00:00 /usr/bin/dunst

vnm 1069 677 1073 0 5 Mar04 ? 00:00:00 /usr/bin/dunst

vnm 1069 677 1075 0 5 Mar04 ? 00:00:00 /usr/bin/dunst

vnm 1069 677 1080 0 5 Mar04 ? 00:00:00 /usr/bin/dunst

In this example, dunst has 5 lightweight processes, each sharing the

PID 1069. Furthermore, if you’re a careful observer, you’d notice that

a single-threaded process will have the same PID and LWP values.

So, we refreshed our minds about what multitasking is about, let’s also briefly do an overview of the concept of a process’ lifecycle.

In user-space, when we think of process creation we usually think

of the fork and execve combo, but on Linux fork is a wrapper

over clone(2) which allows for more control on how a process is

created. Different flags can be passed to clone to pick which resources

are shared with the parent process. These flags are what allows us to

create lightweight processes.

pid_t pid = clone(childFunc, stackTop,

SIGCHLD | CLONE_VM | CLONE_FS | CLONE_FILES | CLONE_SIGHAND,

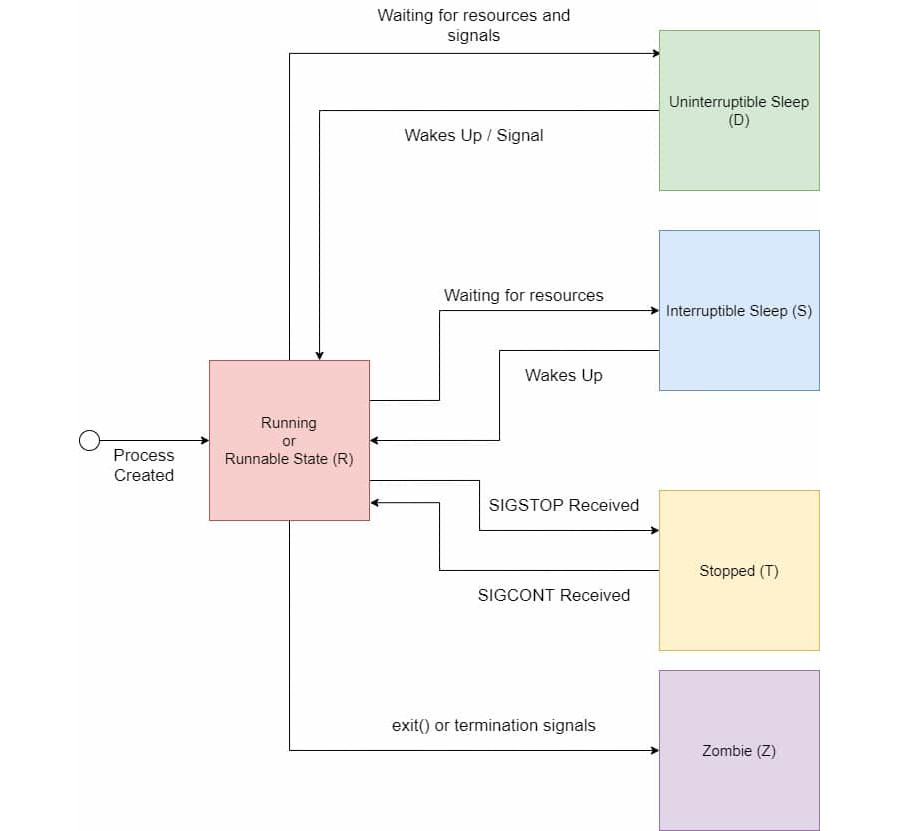

NULL);Once a process is created it then stays in one of the states seen in this pic.

The Unified Scheduler

The Linux’ CPU scheduler is a unified scheduler, it’s the same scheduler for

all tasks in the system, it’s a centralized orchestrator. Furthermore,

it’s a preemptive scheduler, in opposition to cooperative schedulers. This

means that it doesn’t wait for tasks to voluntarily yield back the

CPU they’ve been running on when they’ve finished, but rather forcibly

interrupts them to leave the resource to other processes.

Since 6.12, with CONFIG_PREEMPT_RT enabled, this also applies to most

of the kernel code too that previously used to run without interruption,

which makes scheduling more predictable and deterministic (to the limit of

the acceptable). This is important for real-time scheduling as we’ll see.

That being said, we also need to keep in mind that most systems today are

multicore, which means that multiple tasks can be running in parallel

on separate processors. The scheduler decides on which core the tasks

run, and that’s not a random choice. Indeed, it tries to take the

most optimized decision for performance based on a process “affinity”

towards a CPU, which can be set programmatically (sched_setaffinity(2)),

based on cache affinity, that is if some data is already loaded in a

particular CPU cache level, load balancing, and other factors.

The scheduler is unified in the sense that all tasks pass through it, but it doesn’t mean that it’s using the same scheduling rules for all tasks. Actually, there is a split between two distinct scheduling concepts: the fair scheduling and real-time scheduling policies.

These two types of scheduling differ in their end-goal, what they’re

optimizing for.

Fair scheduling is about adapting to dynamic situations in a way that

prioritizes the current activities and is fair to all tasks, it’s about

overall system efficiency. We use the word “niceness” to describe how

much a task lets other tasks take CPU resources, making it more or less

favorable to the scheduler.

Meanwhile, real-time scheduling is about ensuring tasks meet strict

timing constraints and determinism, prioritizing timely and predictable

execution over fairness. Each task is assigned a fixed portion of CPU

bandwidth and monopolizes it for a granular allocated period. Hence,

they have a guarantee of running, at least, for a certain amount of

time. We use the word “priority” or “precedence”, along with cpu time,

to describe which task comes before the next and runs for how long.

So how does the scheduler juggle between tasks that are scheduled fairly and other tasks that are scheduled in a real-time manner? Furthermore, what do we actually mean by those, what are the concrete policies in use?

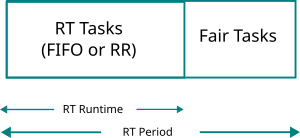

In general, real-time tasks are prioritized over fair ones. For a given

amount of time, the unified scheduler will allocate most of it for

real-time tasks in the order of their priorities and precedence, and then whenever no

real-time task runs, the “other” fair tasks will share the allocated

time that is left.

Here’s a concrete example from the docs:

Let’s consider an example: a frame fixed realtime renderer must deliver 25 frames a second, which yields a period of

0.04sper frame. Now say it will also have to play some music and respond to input, leaving it with around 80% CPU time dedicated for the graphics. We can then give this group a run time of0.8 * 0.04s = 0.032s.This way the graphics group will have a

0.04speriod with a0.032srun time limit. Now if the audio thread needs to refill the DMA buffer every0.005s, but needs only about 3% CPU time to do so, it can do with a0.03 * 0.005s = 0.00015s. So this group can be scheduled with a period of0.005sand a run time of0.00015s.The remaining CPU time will be used for user input and other tasks. Because realtime tasks have explicitly allocated the CPU time they need to perform their tasks, buffer underruns in the graphics or audio can be eliminated.

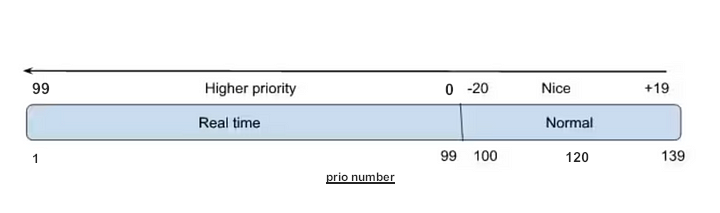

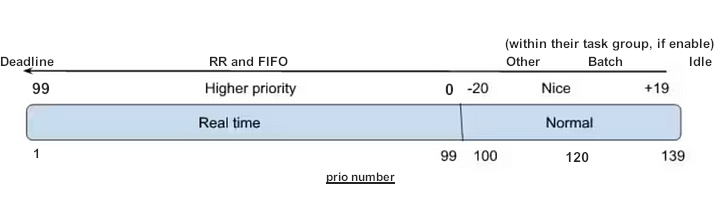

All tasks are assigned a priority (prio number), which, in general,

decides how the scheduling category they fall into will handle them.

The following picture will make more sense as we explain certain details,

just keep it in mind for now.

You can check the current scheduling policy and priority of a process by

taking a look at the /proc pseudo file system.

For example:

> awk '/prio/ || /policy/' /proc/<PID>/sched

policy: 0

prio : 120

The available classes (CLS) of scheduling policies currently available on Linux are as follows. We’ll explain each one separately and how they fit in the big picture.

0:SCHED_NORMALorSCHED_OTHERorTS(Default fair scheduling)1:SCHED_FIFOorFF(First-In-First-Out real-time scheduling)2:SCHED_RRorRR(Round-Robin real-time scheduling)3:SCHED_BATCHorBATCH(intended for non-interactive batch processes)4:SCHED_ISO(Reserved but not implemented yet)5:SCHED_IDLEorIDL(scheduling very low priority processes)6:SCHED_DEADLINEorDLNorSCHED_SPORADIC(sporadic task model real-time deadline scheduling)7:SCHED_EXT(custom scheduler via eBPF)

Fair Policies

As of Linux 6.6, the algorithm guiding how the fair policy works is called EEVDF, the Earliest Eligible Virtual Deadline First scheduling policy. It replaced the venerable CFS, Completely Fair Scheduler, and the O(1) scheduler before it (< 2.6.23).

There are three classes (CLS) of fair policies, the normal/other, the batch, and the idle. They rely on the notion of niceness, the least nice a task is, the more it will be favored and influence the EEVDF policy. Furthermore, the policy manages tasks and adapts to ensure fair progress of runtime between all fair tasks depending on load and other factors. For example, if a task was denied to run, it will be increasingly favored when picking a task at the next scheduling decision.

By default, all tasks fall into the normal/other policy and have

a neutral niceness of 0. The nice value of such tasks usually varies

between -20, the highest priority, and +19, the lowest priority.

The nice value can be modified using nice(2), setpriority(2), or

sched_setattr(2).

Batch scheduling is similar to normal scheduling and is also governed by the nice value and dynamic priorities. However, the difference is that the fair policy will always consider batch tasks to be more CPU intensive and thus add a small penalty to them, they’re mildly disfavored. This is mainly used for workloads that are, as the name implies, batched and noninteractive. It can be used in conjunction with real-time policies to avoid extra preemptions between workloads.

Finally, the idle policy is for extremely low priority jobs. They have

a fixed, unchangeable, niceness value way above +19. “These are very

nice tasks”, and also often used in conjunction with other real-time

policies to avoid conflicts.

Real-Time Policies

There are also three classes of real-time scheduling policies, the

fifo, first-in first-out policy, the rr, or round-robin policy,

and the deadline policy.

As we briefly mentioned, real-time tasks take precedence over fair ones

and have stricter constraints. The internal ordering and precedence

between different real-time tasks part of a policy class is based on

the respective priority values which range from 1 (low) to 99 (high)

and other numbers. Additionally, real-time tasks run in a granular amount

of time within a period, these can be either global values or per-task,

depending on the type of class. The time in which they don’t run within

the period, if there is any, is left for fair tasks. This mechanism

prevents the starvation of resources. Let’s also keep in mind that this

doesn’t change the preemptiveness of Linux’ scheduling.

For fifo and rr classes, the runtime and periods are set globally via tunables in the proc pseudo filesystem. The period is often called the “granular full CPU bandwidth scheduling cycle”.

/proc/sys/kernel/sched_rt_period_us/proc/sys/kernel/sched_rt_runtime_us

The values are in µs, microseconds, the period defaults to 1s and the

runtime defaults to 0.95s. This means that when left to their default

values, real-time tasks will run for 95% of CPU time. The value for the

period ranges from 1 to INT_MAX, and the value for the runtime from

-1 to INT_MAX-1. A value of -1 for the runtime makes it the same as

the period, thus monopolizing the full CPU bandwidth with real-time tasks.

There are other techniques to deal with runaway real-time processes as

we’ll see later in the “limits” section.

Here’s a visual explanation of this:

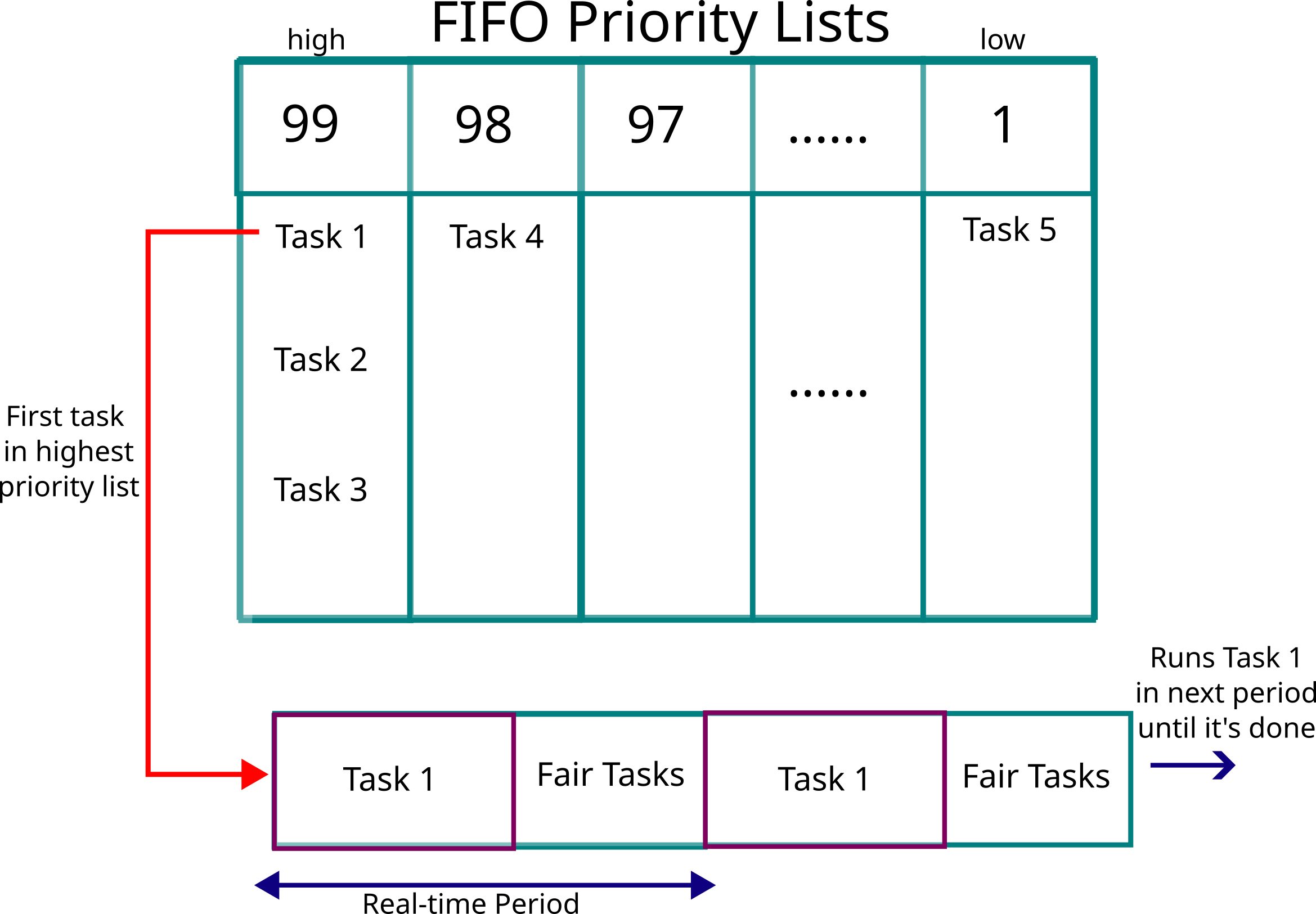

The fifo, first-in first-out class, is a simple scheduling algorithm

without any sort of time slicing, it works on a first come first

served basis. All real-time fifo tasks are categorized based on their

priority value, the higher the value, the more precedence it has. Each

priority category, in the case of fifo, is a list in which new tasks

are appended to its end. The scheduler will sequentially process each

list, from higher to lower priority, and executes each tasks one after

the other in the order in which they arrived.

Naturally, if the priority of a task is changed, it is then moved to

the end of the new priority list it’s now part of.

In general real-time tasks run until they either relinquish the CPU

explicitly, with sched_yield(2), are blocked by a hardware interrupt

I/O request, or whenever a higher priority task needs to run.

That means that a fifo task will run indefinitely in its runtime value,

in a period, and resume execution at the next period. Thus, one should

be cautious that long-running high priority fifo tasks will monopolize

the real-time runtime.

Here’s a visual explanation of fifo scheduling class:

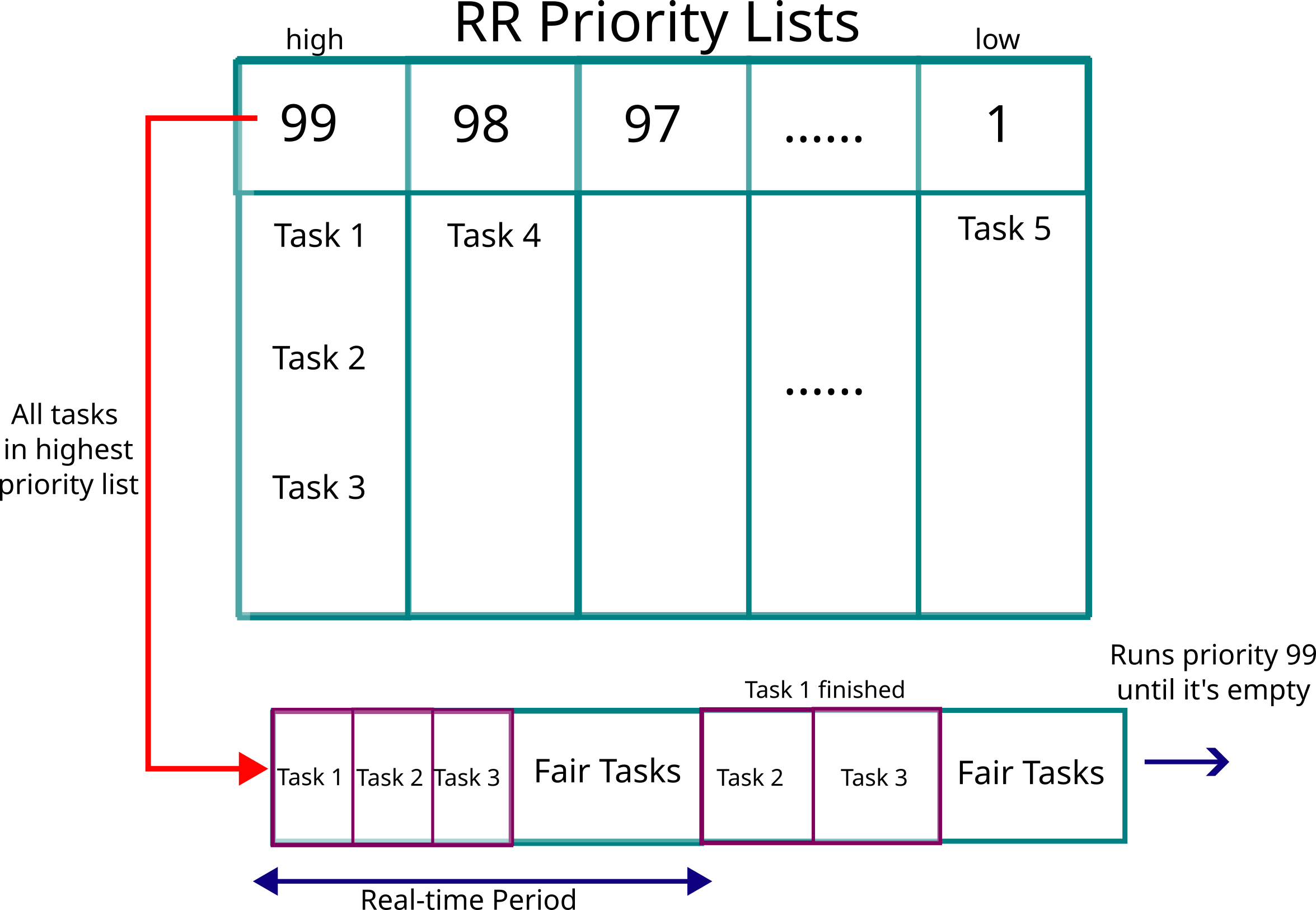

The rr, or round-robin class, is the same as fifo in most ways,

however, the only difference is that tasks that are part of the same

priority list will all receive the same CPU time equally, one after the

other. So instead of waiting for the first task in the highest priority

list, in rr we’ll wait for all tasks of the highest priority list.

The rr algorithm will decide on a quantum chunk of the real-time

runtime, and allocate it sequentially for all tasks of the same priority

list. In other words, it’s a circular queue: a task runs for a quantum

of time, then leaves place for the second task in the list, while the

first task is put to the end of the list. The quantum portion of time,

the slice interval, is dynamically chosen by the algorithm based on

load fluctuation and other factors, it isn’t a fixed value, it changes

as tasks leave and join the rr queue.

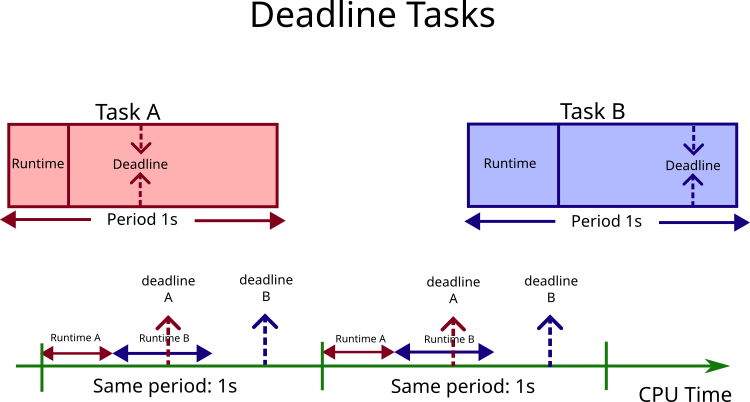

The deadline scheduling class, unlike fifo and rr, doesn’t rely on

global real-time runtime and period, and neither on priority values, but

instead on per-task parameters. The deadline algorithm is a mix of GEDF

(Global Earliest Deadline First) and CBS (Constant Bandwidth Server).

The per-task parameters are as follows:

- Runtime (exec time, or budget): How long the task can run in each period.

- Period: The interval at which the task repeats.

- Deadline: The time by which the task must complete execution.

This all seems familiar apart from the deadline, this new parameter is the one that replaces the priority value: the earliest a task needs to finish running, the more prioritized it is.

This only makes sense when picturing multiple tasks running at the

same time.

Imagine that the audio thread needs to run every 1s for at least 0.2s,

but no later than 0.6s (deadline), otherwise there’s going to be a sound

delay. Along with that, we have the graphic thread that also runs every

1s for at least 0.3s but no later than 0.4s, otherwise there’ll be frame

missing. So, as each 1s period is reached, the scheduler has to decide what

to run first, the audio thread or the graphic thread, it will run the

graphic thread for 0.3s and then the audio thread for 0.2s, a total of

0.5s, and leave the rest of the 1s common period for real-time tasks.

When said another way: “I must finish execution by this time, otherwise

my purpose is defeated.”

For this reason, deadline scheduled tasks take priority over all other scheduling classes. It’s a class that enforces more predictability in execution time rather than just CPU limits.

One thing that is mentioned in the docs, is that the period starts whenever the scheduler wakes up, and that doesn’t necessarily coincide with when the task actually runs. Consequently, one should keep this in mind when choosing values for the parameters. Here’s a visualization of this.

arrival/wakeup absolute deadline

| start time |

| | |

v v v

-----x--------xooooooooooooooooo--------x--------x---

|<-- Runtime ------->|

|<----------- Deadline ----------->|

|<-------------- Period ------------------->|

Furthermore, the values for the parameters of the deadline class are

expressed in nanoseconds, but the current granularity doesn’t accept

anything under 1024, which is around 1.024µs.

Obviously, the following also needs to stay true:

runtime <= deadline <= period

Here’s a visual explanation of the deadline scheduling class, both tasks with the same period for simplicity purposes:

Function Calls

We’ve mentioned a lot of things, but we didn’t say how they’re actually achieved. So let’s fill the gap and list a few boring functions used in the management of scheduling policies.

All the functions used to manage scheduling start with

sched_*. Indeed, we’ve already seen sched_yield that is used by real-time

tasks to deliberately relinquish the processor when they’re done with

their runtime.

The most prominent Linux system calls are sched_setattr(2) and

sched_getattr(2), they can be used to perform all that we mentioned

and a bit more.

int syscall(

SYS_sched_setattr,

pid_t pid,

struct sched_attr *attr,

unsigned int flags);

int syscall(

SYS_sched_getattr,

pid_t pid,

struct sched_attr *attr,

unsigned int size,

unsigned int flags);What they do is either get or set a sched_attr on a process, the

flags part is unused. And this structure looks like this:

struct sched_attr {

u32 size; /*Size of this structure*/

u32 sched_policy; /*Policy (SCHED_*)*/

u64 sched_flags; /*Flags*/

s32 sched_nice; /*Nice value (SCHED_OTHER,

SCHED_BATCH)*/

u32 sched_priority; /*Static priority (SCHED_FIFO,

SCHED_RR)*/

/*For SCHED_DEADLINE*/

u64 sched_runtime;

u64 sched_deadline;

u64 sched_period;

/*Utilization hints*/

u32 sched_util_min;

u32 sched_util_max;

};There’s nothing truly magic about this structure that we haven’t mentioned, the policy is one of the classes:

0:SCHED_NORMALorSCHED_OTHERorTS(Default fair scheduling)1:SCHED_FIFOorFF(First-In-First-Out real-time scheduling)2:SCHED_RRorRR(Round-Robin real-time scheduling)3:SCHED_BATCHorBATCH(intended for non-interactive batch processes)4:SCHED_ISO(Reserved but not implemented yet)5:SCHED_IDLEorIDL(scheduling very low priority processes)6:SCHED_DEADLINEorDLNorSCHED_SPORADIC(sporadic task model real-time deadline scheduling)7:SCHED_EXT(custom scheduler via eBPF)

We already talked about the values for nice, priority, runtime,

deadline, and period too.

What we’re left wondering about are the possible sched_flags, and

what the sched_util_min and sched_util_max do. Here’s the explanation:

SCHED_FLAG_RESET_ON_FORK: As the name implies, children will not inherit the scheduling policy of their parents. This avoids turning the whole system into a real-time mess.SCHED_FLAG_RECLAIM: For tasks using the deadline policy only, another deadline task can reclaim the runtime that isn’t used by another deadline task.SCHED_FLAG_DL_OVERRUN: For tasks using the deadline policy only, it will send a signalSIGXCPUto the process whenever there’s an “overrun/overbudget”. This can be used for debugging purpose to adjust the actual period and runtime of deadline tasks.SCHED_FLAG_UTIL_CLAMP_MIN,SCHED_FLAG_UTIL_CLAMP_MAXalong with values insched_util_minandsched_util_max: The values can be set when the flags are on, these are hints to tell the scheduler how the task will behave over time to make decisions about CPU demands. This can be used to be sure a task will always have enough CPU resources, or, on the opposite, be set aside like a batch task.

The other functions part of the sched_* family are mostly there for

POSIX compatibility and redundant with sched_set/get/attr. However,

it’s nice to know about sched_getaffinity and sched_setaffinity

which are used to give tasks a preference to a particular core (on the

cli taskset(1)).

Here’s the list of other calls:

sched_setscheduler,sched_getschedulersched_setparam,sched_getparamsched_get_priority_max,sched_get_priority_minsched_getcpusched_rr_get_interval

Limits and Task Groups

We’ve seen that there are global settings for rr and fifo

real-time scheduling classes. However, there are other global toggles

to help us limit, put barriers, on how scheduling happens.

There are two main ways to put limits on scheduling: through cgroups,

and through setrlimit(2) and its accompanying family of tools.

The setrlimit, getrlimit, and prlimit (for running processes) are

functions used to control maximum resource usage. The shell built-in

ulimit, the CLI tool prlimit(1), the pam_limits module (conf in

/etc/security/limits.conf), systemd directives, and a couple of other things rely

on it. A particularity of the resource limits is that they’re inherited

by child processes, and child processes have the ability to change their

limits (soft), up to a constrained limit (hard), they have some wiggle

room to change it.

Let’s go over the limits that are of interest to us:

RLIMIT_NICEandRLIMIT_RTPRIO: The ceiling value for the niceness of fair tasks (20 - limit), and the priority of rr or fifo real-time tasks.RLIMIT_CPU: The maximum CPU time in second that a process can hold. When it’s exceeded a signalSIGXCPUis sent to the process. It’s up to the process to chooses how to handle the signal.RLIMIT_RTTIME: Similar toRLIMIT_CPU, this is the upper limit that a real-time scheduling policy can run, in microseconds, and it sendsSIGXCPU(constantly) when the limit is reached.

The

cgroupfs,

being the core tech behind containers and omnipresent with systemd, is

an obvious way to limit resources. It has toggles in cgroups-v2 such as

cpu.weight.nice, cpu.idle, cpu.weight, and cpu.max.

Yet, one extremely important thing to note, is that if the kernel has

autogrouping CONFIG_SCHED_AUTOGROUP and CONFIG_FAIR_GROUP_SCHED

enabled, which it probably has if systemd is installed, then fair

scheduling niceness works differently. In that case, the position

of a task within the cgroup task group is what actually influences

the precedence of the scheduling. It will balance the CPU time based

on this hierarchical task group structure and the weight/niceness of

sibblings. The niceness will not have a global meaning anymore, but a

localized task group one.

This highly depends on the distro and system you have and might make

niceness completely irrelevant since group hierarchy takes precedence.

Under group scheduling, a thread’s nice value has an effect for scheduling decisions only relative to other threads in the same task group. This has some surprising consequences in terms of the traditional semantics of the nice value on UNIX systems. In particular, if autogrouping is enabled (which is the default in various distributions), then employing

setpriority(2)ornice(1)on a process has an effect only for scheduling relative to other processes executed in the same session (typically: the same terminal window).

This means that all the threads in a CPU cgroup form a task group,

if they have the cpu controller enabled which is kind of a default today

with systemd (see cgroup.controllers and cgroup.subtree_control). The

parent of a cgroup task group is the first cgroup parent that has the

cpu controller enabled, which is probably just the corresponding parent

cgroup. So this creates a hierarchy.

Technically, the autogroup feature means that on each new session

(setsid(2)), a new autogroup is created. An autogroup is a unique

string value generated by the kernel. For some systems, that might mean

every time a new terminal window is opened. Keep in mind that autogroups

are temporary, managed, and created by the kernel dynamically,

while cgroups are explicitly managed. This value is displayed via

/proc/<PID>/autogroup. Afteward, once the autogroup is created, all

tasks in the terminal will fall in the same task group, considered as a

single task with its own weight. However, autogroups are only considered

for tasks that fall under the root cpu control group, so it’s not even

looked at for anything under it. To complicate things, this file can

also be written to with a new nice number to override the whole autogroup

scheduling niceness.

> echo 10 > /proc/self/autogroup

And here’s the note indicating that autogroups are only valid under the root CPU controller cgroup.

The use of the cgroups(7) CPU controller to place processes in cgroups other than the root CPU cgroup overrides the effect of autogrouping.

This feature can be toggled via /proc/sys/kernel/sched_autogroup_enabled

or passing the noautogroup kernel param at boot.

This will surprise you:

> grep -H . /proc/*/autogroup 2>/dev/null

/proc/1004/autogroup:/autogroup-86 nice 0

/proc/1007/autogroup:/autogroup-87 nice 0

/proc/1013/autogroup:/autogroup-87 nice 0

/proc/1014/autogroup:/autogroup-87 nice 0

/proc/1018/autogroup:/autogroup-88 nice 0

/proc/1022/autogroup:/autogroup-89 nice 0

/proc/1028/autogroup:/autogroup-89 nice 0

/proc/1038/autogroup:/autogroup-90 nice 0

/proc/1055/autogroup:/autogroup-85 nice 0

Hence, processes 1007, 1013, and 1014 are part of the same

autogroup-87. Yet, this whole autogroup falls within a cgroup

hierarchy of session.slice, which is under user@1000.service,

and under user-1000.slice, which is finally under user.slice. So,

since it’s not under the root cpu cgroup, it will be ignored.

In reality, each task is balanced according to their cgroup cpu controller

weight (cpu.weight), by default equally, each node part of the tree

subsequently getting a part of the CPU pie, each a scheduling entity

and getting its share of the distribution.

The “cpu” controllers regulates distribution of CPU cycles. This controller implements weight and absolute bandwidth limit models for normal scheduling policy and absolute bandwidth allocation model for realtime scheduling policy.

Take a look at the cgroup hierarchy.

> systemd-cgls

CGroup: /user.slice

└─user-1000.slice

├─session-3.scope

... # other

└─user@1000.service

├─app.slice

... # other

├─session.slice

│ ├─at-spi-dbus-bus.service # all under autogroup-87

│ │ ├─1007 /usr/lib/at-spi-bus-launcher

│ │ ├─1013 /usr/bin/dbus-broker-launch ..

│ │ └─1014 dbus-broker --log 4 -...

│ ├─dbus-broker.service

│ │ ├─755 /usr/bin/dbus-broker-launch --scope user

│ │ └─766 dbus-broker --log 4 --...

│ ├─dunst.service

│ │ └─1069 /usr/bin/dunst

... # other

You can check your process’ cgroup via /proc/<PID>/cgroup. For example (credit Megame50):

> grep -H $ /sys/fs/cgroup/${$(</proc/<PID>/cgroup)#0::/}/(../)##cpu.weight.nice(Od:a)

/sys/fs/cgroup/user.slice/cpu.weight.nice:0

/sys/fs/cgroup/user.slice/user-1000.slice/cpu.weight.nice:0

/sys/fs/cgroup/user.slice/user-1000.slice/user@1000.service/cpu.weight.nice:0

/sys/fs/cgroup/user.slice/user-1000.slice/user@1000.service/session.slice/cpu.weight.nice:0

In my opinion, this defeats the purpose of fair scheduling since it’s

the position in the cgroup hierarchy, and the weight (cpu.weight)

between siblings, that take over niceness: the higher it is, the more

precedence it has.

Yet, it might make sense from a desktop perspective, but is highly

confusing.

A global view

Now that we have a good grasp of what fair and real-time scheduling options there are on Linux, let’s review the previous diagram and add more details to it.

The calculation of this generic prio number, or pr, goes something like this:

A process with NI (nice) = -5

has: PR = 100 + 20 + (−5) = 115

A process with NI = 10

has: PR = 100 + 20 + 10 = 130

A real-time process with a priority of 30

has: PR = 100 − 30 = 70

A real-time process with a priority of 99

has: PR = 100 − 99 = 1

The ps tool has so many knobs to inspect different priority numbers. For

example, you can use the following:

"priority" -- (was -20..20, now -100..39)

"intpri" and "opri" (was 39..79, now -40..99)

"pri_foo" -- match up w/ nice values of sleeping processes (-120..19)

"pri_bar" -- makes RT pri show as negative (-99..40)

"pri_baz" -- the kernel's ->prio value, as of Linux 2.6.8 (1..140)

"pri" (was 20..60, now 0..139)

"pri_api" -- match up w/ RT API (-40..99)

> ps -e -L -o pid,class,rtprio,nice,pri,priority,opri,pri_api,pri_bar,pri_baz,pri_foo,comm

PID CLS RTPRIO NI PRI PRI PRI API BAR BAZ FOO COMMAND

1 TS - 0 19 20 80 -21 21 120 0 systemd

2 TS - 0 19 20 80 -21 21 120 0 kthreadd

3 TS - 0 19 20 80 -21 21 120 0 pool_workqueue_release

4 TS - -20 39 0 60 -1 1 100 -20 kworker/R-rcu_gp

5 TS - -20 39 0 60 -1 1 100 -20 kworker/R-sync_wq

6 TS - -20 39 0 60 -1 1 100 -20 kworker/R-slub_flushwq

7 TS - -20 39 0 60 -1 1 100 -20 kworker/R-netns

22 FF 99 - 139 -100 -40 99 -99 0 -120 migration/0

Practical Application

CLI

There are two sets of tools to manage scheduling properties on the command

line: nice/renice and chrt. Both are pretty straight forward.

The command nice is used to launch an executable with a certain

niceness, and renice is used to modify the niceness of an already

running one. Though, as we’ve seen this might only apply within a task

group, depending on the machine’s setup. Here’s an example of a bad sleep:

> nice -n -10 sleep 1000

In the case of a task group scenario, there doesn’t seem to exist

any tool to change the weight or niceness globally. A tool called

reallynice has been written, but

it misunderstood the docs and only changes the niceness of an autogroup,

which doesn’t solve the issue, since the whole autogroup is only nice

vis-à-vis sibblings in the task group and only on the root cgroup.

Hence, someone needs to rewrite the nice utility instead of

making it obsolete. To achieve this, they’ll have to rely on

/sys/fs/cgroup/.../cgroup.stat, count all nr_descendants in it,

get the cpu.weight of all tasks and average it, implying they have

checked that the cpu controller is enabled, and finally writing to

cgroup.procs to move a task to another cgroup (with cpu controller

enabled obviously), or change its cpu.weight or backward compatible

cpu.weight.nice accordingly. This tool should also check autogroups

for the cpu cgroup.

Moreover, someone should also write a tool to actually display the task

group, since it might not match fully the cgroup hierarchy.

Similar to nice, chrt is used to set all other types of

scheduling-related stuff we’ve seen on both newly executed processes

and already running ones. That’s apart from processor affinity, which is

done via taskset(1). Furthermore, chrt can also be used to retrieve

scheduling classes and flags.

Here’s a listing of the attributes of all LWP under PID 1183.

> chrt -a -p 1183

pid 1183's current scheduling policy: SCHED_OTHER

pid 1183's current scheduling priority: 0

pid 1184's current scheduling policy: SCHED_OTHER

pid 1184's current scheduling priority: 0

pid 1185's current scheduling policy: SCHED_RR|SCHED_RESET_ON_FORK

pid 1185's current scheduling priority: 99

And a listing of the minimum and maximum values (which isn’t very useful):

> chrt -m

SCHED_OTHER min/max priority : 0/0

SCHED_FIFO min/max priority : 1/99

SCHED_RR min/max priority : 1/99

SCHED_BATCH min/max priority : 0/0

SCHED_IDLE min/max priority : 0/0

SCHED_DEADLINE min/max priority : 0/0

You can start an idle sleep, in its task group, which is a sweeter sleep,

0 is the priority here which is unused:

> chrt -i 0 sleep 1000

Or change the scheduling of a running task to real-time fifo with priority 30.

> chrt -f -p 30 PID

Just keep in mind that a normal user by default won’t be able to do

whatever they want when it comes to changing these values. They need to

get their limits unlocked via the setrlimit we’ve seen, or have the

capability CAP_SYS_NICE, which contrary to the name allows changing

all types of scheduling properties and not only niceness.

The simplest way to get permission is usually achieved through PAM via

pam_limits. It can either be done on a per-user basis, but often it’s

as simple as adding users to the realtime group since some distros come

with the file /etc/security/limits.d/99-realtime-privileges.conf that

instantly gives access to members of that group to real-time tasks.

@realtime - rtprio 98

@realtime - memlock unlimited

@realtime - nice -11

Unfortunately,

pam_limits

is still missing an “rttime” config (I’ve opened a bug

report). You

can still set it programmatically, via the command line with ulimit

or prlimit, or per service with systemd directives LimitRTTIME

(see systemd.directives(7) and systemd.exec(5)).

RealtimeKit

It’s time to get back to what initially started this article: RealtimeKit, originally developed here but now maintained here.

RealtimeKit, or rtkit, is a D-Bus service that provides an interface to allow normal unprivileged users to request making their tasks either real-time or high-priority (low niceness). It achieves this in the safest and most secure way possible.

It avoids resource starvation/freezing through different nifty

ideas. First, the only real-time policy it sets is rr and with the

SCHED_RESET_ON_FORK flag. Furthermore, it only allows tasks that have

the limit RLIMIT_RTTIME to get real-time priority. On top of that,

it enforces a limit on the maximum number of real-time tasks at a time

per-user, the max range of priority allowed, and how many consecutively

requested tasks within a specific time-frame (burst) are allowed.

All of this is also accompanied by what is called a “canary watchdog”,

which is basically a second thread that is assigned the other policy

class and that is monitored to see whether the system is getting

starved. In case the system is overloaded, and “the canary can’t

sing/cheep”, it demotes real-time tasks by making them turn back to the

other scheduling. That’s achievable even in tight scenario because the

rtkit service assigns itself a higher real-time priority, so it takes

precedence. Think of the canary watchdog as similar to the oom-killer,

but it demotes tasks instead of killing them.

As with many D-Bus services, a big advantage is that permission

checks are centralized through PolicyKit/polkit. The file

/usr/share/polkit-1/actions/org.freedesktop.RealtimeKit1.policy is

indicative of that.

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE policyconfig PUBLIC

"-//freedesktop//DTD PolicyKit Policy Configuration 1.0//EN"

"http://www.freedesktop.org/standards/PolicyKit/1/policyconfig.dtd">

<policyconfig>

<action id="org.freedesktop.RealtimeKit1.acquire-high-priority">

<description>Grant high priority scheduling to a user process</description>

<message>Authentication is required to grant an application high priority scheduling</message>

<defaults>

<allow_any>no</allow_any>

<allow_inactive>yes</allow_inactive>

<allow_active>yes</allow_active>

</defaults>

</action>

<action id="org.freedesktop.RealtimeKit1.acquire-real-time">

<description>Grant realtime scheduling to a user process</description>

<message>Authentication is required to grant an application realtime scheduling</message>

<defaults>

<allow_any>no</allow_any>

<allow_inactive>yes</allow_inactive>

<allow_active>yes</allow_active>

</defaults>

</action>

</policyconfig>As you can see there are two actions that polkit sees, one for high priority and the other for setting real-time:

org.freedesktop.RealtimeKit1.acquire-high-priorityorg.freedesktop.RealtimeKit1.acquire-real-time

That means we can create specific rules to allow what we want, for example:

polkit.addRule(function(action, subject) {

polkit.log("action=" + action);

polkit.log("subject=" + subject);

if (subject.user == "vnm" &&

(action.id.indexOf("org.freedesktop.RealtimeKit1.") == 0) {

return polkit.Result.YES;

}

});That’s fine, it’s all standard polkit and D-Bus, so let’s see what the actual interface looks like and introspect it.

The /usr/share/dbus-1/interfaces/org.freedesktop.RealtimeKit1.xml description:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE node PUBLIC

"-//freedesktop//DTD D-BUS Object Introspection 1.0//EN"

"http://www.freedesktop.org/standards/dbus/1.0/introspect.dtd">

<node>

<interface name="org.freedesktop.RealtimeKit1">

<method name="MakeThreadRealtime">

<arg name="thread" type="t" direction="in"/>

<arg name="priority" type="u" direction="in"/>

</method>

<method name="MakeThreadRealtimeWithPID">

<arg name="process" type="t" direction="in"/>

<arg name="thread" type="t" direction="in"/>

<arg name="priority" type="u" direction="in"/>

</method>

<method name="MakeThreadHighPriority">

<arg name="thread" type="t" direction="in"/>

<arg name="priority" type="i" direction="in"/>

</method>

<method name="MakeThreadHighPriorityWithPID">

<arg name="process" type="t" direction="in"/>

<arg name="thread" type="t" direction="in"/>

<arg name="priority" type="i" direction="in"/>

</method>

<method name="ResetKnown"/>

<method name="ResetAll"/>

<method name="Exit"/>

<property name="RTTimeUSecMax" type="x" access="read"/>

<property name="MaxRealtimePriority" type="i" access="read"/>

<property name="MinNiceLevel" type="i" access="read"/>

</interface>

<interface name="org.freedesktop.DBus.Properties">

<method name="Get">

<arg name="interface" direction="in" type="s"/>

<arg name="property" direction="in" type="s"/>

<arg name="value" direction="out" type="v"/>

</method>

</interface>

<interface name="org.freedesktop.DBus.Introspectable">

<method name="Introspect">

<arg name="data" type="s" direction="out"/>

</method>

</interface>

</node>Or let’s print it out as introspection:

> gdbus introspect \

--system \

--dest org.freedesktop.RealtimeKit1 \

--object-path /org/freedesktop/RealtimeKit1

node /org/freedesktop/RealtimeKit1 {

interface org.freedesktop.RealtimeKit1 {

methods:

MakeThreadRealtime(in t thread,

in u priority);

MakeThreadRealtimeWithPID(in t process,

in t thread,

in u priority);

MakeThreadHighPriority(in t thread,

in i priority);

MakeThreadHighPriorityWithPID(in t process,

in t thread,

in i priority);

ResetKnown();

ResetAll();

Exit();

signals:

properties:

readonly x RTTimeUSecMax = 200000;

readonly i MaxRealtimePriority = 20;

readonly i MinNiceLevel = -15;

};

interface org.freedesktop.DBus.Properties {

methods:

Get(in s interface,

in s property,

out v value);

signals:

properties:

};

interface org.freedesktop.DBus.Introspectable {

methods:

Introspect(out s data);

signals:

properties:

};

};As you can deduce from these, the rtkit has its own set of dynamically

configurable limits, apart from the ones we mentioned. It checks whether

the RLIMIT_RTTIME of the process is lower than its RTTimeUSecMax,

whether the real-time priority is lower than MaxRealtimePriority, and

similarly whether the nice level is less than MinNiceLevel. All good!

We can move our focus to the functionality exposed by the service, the four methods:

MakeThreadRealtime(in t thread, in u priority);

MakeThreadRealtimeWithPID(in t process, in t thread, in u priority);

MakeThreadHighPriority(in t thread, in i priority);

MakeThreadHighPriorityWithPID(in t process, in t thread, in i priority);The signatures are straight forward, the difference between the WithPID

ones is whether you only pass the LWP or the LWP and PID together.

Before we test these on the CLI, we have to give a shout-out to the

desktop portal project, by Flatpak. It offers a proxy for rtkit’s dbus

interface through org.freedesktop.portal.Realtime, which can confuse

certain people.

Have a look at:

> busctl --user list | grep portal

> gdbus introspect \

--session \

--dest org.freedesktop.portal.Desktop \

--object-path /org/freedesktop/portal/desktop

Let’s give all this a spin and see how it goes.

We can start a random task, or assume it’s already running:

> sleep 10000

We get both the LWP and PID of it:

> ps -efL

vnm 1210887 1210860 1210887 0 1 10:44 pts/16 00:00:00 sleep 10000

We make sure of our limits and assign the required ones to the process if needed.

> ulimit -a # this is zsh

-t: cpu time (seconds) unlimited

-f: file size (blocks) unlimited

-d: data seg size (kbytes) unlimited

-s: stack size (kbytes) 8192

-c: core file size (blocks) unlimited

-m: resident set size (kbytes) unlimited

-u: processes 21259

-n: file descriptors 1024

-l: locked-in-memory size (kbytes) unlimited

-v: address space (kbytes) unlimited

-x: file locks unlimited

-i: pending signals 21259

-q: bytes in POSIX msg queues 819200

-e: max nice 31

-r: max rt priority 150000

-N 15: rt cpu time (microseconds) unlimited

# unlimited rt cpu time, we need it to be at least < 200000

> prlimit --rttime=1500 --pid 1210887

# Verify it's set

> prlimit --rttime --pid 1210887

RESOURCE DESCRIPTION SOFT HARD UNITS

RTTIME timeout for real-time tasks 1500 1500 microsecsThe right limits are set, we can play around with it safely now:

> gdbus call \

--system \

--dest org.freedesktop.RealtimeKit1 \

--object-path '/org/freedesktop/RealtimeKit1' \

--method org.freedesktop.RealtimeKit1.MakeThreadRealtimeWithPID \

1210887 1210887 10

()

> chrt -a -p 1210887

pid 1210887's current scheduling policy: SCHED_RR|SCHED_RESET_ON_FORK

pid 1210887's current scheduling priority: 10

> gdbus call \

--system \

--dest org.freedesktop.RealtimeKit1 \

--object-path '/org/freedesktop/RealtimeKit1' \

--method org.freedesktop.RealtimeKit1.MakeThreadHighPriorityWithPID \

1210887 1210887 10

()

> chrt -a -p 1210887

pid 1210887's current scheduling policy: SCHED_OTHER|SCHED_RESET_ON_FORK

pid 1210887's current scheduling priority: 0

# value above limitations 20

> gdbus call

--system

--dest org.freedesktop.RealtimeKit1

--object-path '/org/freedesktop/RealtimeKit1'

--method org.freedesktop.RealtimeKit1.MakeThreadRealtimeWithPID \

1210887 1210887 25

Error: GDBus.Error:org.freedesktop.DBus.Error.AccessDenied: Operation not permittedThat’s about it, pretty simple and useful, right? So why haven’t we heard of it more, where is it actually used? The answer: pipewire. At least pipewire is the only prominent software that I’ve seen that relies on rtkit.

You can snoop the interface to make sure:

> busctl monitor org.freedesktop.RealtimeKit1

So why didn’t it choose to rely on pam_limits or systemd units

directives such as CPUSchedulingPolicy, CPUSchedulingPriority,

and CPUSchedulingResetOnFork? In theory, it can, and some distros

do that, such as Ubuntu that has this file installed these days

25-pw-rlimits.conf.

# This file was installed by PipeWire project for its libpipewire-module-rt.so

# It is up to the distribution/user to create the @pipewire group and to add the

# relevant users to the group.

#

# PipeWire will fall back to the RTKit DBus service when the user is not able to

# acquire RT priorities with rlimits.

#

# If the group is not automatically created, the match rule will never be true

# and this file will have no effect.

#

@pipewire - rtprio 95

@pipewire - nice -19

@pipewire - memlock 4194304As the comment makes clear, these are up to the distros to set these things up, if they don’t want to rely on rtkit.

The module-rt of pipewire, libpipewire-module-rt(7) offers a conf

that will call rtkit or the equivalent desktop portal.

{ name = libpipewire-module-rt

args = {

nice.level = -18

rt.prio = 21

rt.time.soft = 150000

rt.time.hard = 150000

rlimits.enabled = true

rtportal.enabled = false

rtkit.enabled = true

uclamp.min = 0

uclamp.max = 1024

}

flags = [ ifexists nofail ]

}Checking the logs makes it clear of what happens:

[I][88908.575246] pw.conf | [ conf.c: 1143 pw_conf_section_for_each()] handle config '/home/vnm/.config/pipewire/pipewire.conf' section 'context.modules'

[I][88908.575299] pw.module | [ impl-module.c: 156 pw_context_load_module()] 0x5a6555c6b280: name:libpipewire-module-rt args:{

nice.level = -18

rt.prio = 21

rt.time.soft = 150000

rt.time.hard = 150000

rlimits.enabled = true

rtportal.enabled = false

rtkit.enabled = true

uclamp.min = 0

uclamp.max = 1024

}

[I][88908.575715] mod.rt | [ module-rt.c: 936 rtkit_get_bus()] Portal Realtime disabled

[I][88908.579359] mod.rt | [ module-rt.c: 600 set_nice()] clamped nice level -18 to -15

[I][88908.579608] mod.rt | [ module-rt.c: 621 set_nice()] main thread nice level set to -15

[I][88908.581415] mod.rt | [ module-rt.c: 808 do_make_realtime()] clamping requested priority 21 for thread 1164268 between 1 and 20

[I][88908.581562] mod.rt | [ module-rt.c: 818 do_make_realtime()] acquired realtime priority 20 for thread 1164268 using RTKit

And the tracking from rtkit side logs:

rtkit-daemon[1162195]: Successfully made thread 1163392 of process 1163389 owned by '1000' RT at priority 20.

rtkit-daemon[1162195]: Supervising 2 threads of 1 processes of 1 users.

That’s it, now you get what rtkit does, and understand most of what you need to know about real-time scheduling to be dangerous with it.

Simulating Real-Time

A big question that arises after all this is what’s the best way to test real-time tasks, and see what the scheduling policies do.

The best way is probably through cpuset(7) and helping tools such as

cset(1). However, the cpuset needs to be enabled in the kernel and

appear in /proc/filesystems, so it might not be available by default

on all machines.

It has the ability to create a confined set of processors. So when

combining it with stress test tools such as stress-ng, it allows seeing

at a glance how the real-time policy class will affect the CPU usage.

For example, assigning the rr class to two tasks with the same priority will show something like this:

> top -p 1234,1235

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

1234 vnm -3 0 45784 5880 3500 R 48,7 0,0 0:12.24 stress-ng

1235 vnm -3 0 45784 5880 3500 R 46,7 0,0 0:12.25 stress-ng

Or when we start a ff class task (default runtime 0.95s and period 1s)

with an other task:

$ top -p 1234,1235

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

1234 vnm rt 0 45780 5896 3512 R 95,0 0,0 2:11.35 stress-ng

1235 vnm 20 0 45780 5988 3616 R 5,0 0,0 2:09.65 stress-ng

Interesting stuff, right?

Exploring Further

Obviously, we’ve only grazed the surface of things related to the CPU scheduler, but there is also another important scheduler on Linux: the IO scheduler. This would need its own article.

We’ve also skipped over many of the kernel-related scheduler

configurations. Think of CONFIG_SCHED_SMT, CONFIG_CGROUP_SCHED,

etc.. And certain system tunables like kernel.sched_base_slice,

kernel.sched_features, and kernel.sched_tunable_scaling.

A file we didn’t cover is /sys/kernel/debug/sched/debug, which has

ton of tiny details about the scheduling behavior.

Finally, we also didn’t touch custom schedulers, SCHED_EXT, ones

that can be implemented through eBPF. Which you can read about on

sched-ext.com.

Conclusion

CPU scheduling and RealtimeKit are some fascinating tech, I hope you’ve

learned as much as I did by reading this article. We’ve seen a lot of

things, from reviewing the multitasking theories, having an overview

of the unified scheduler and the different scheduling policy classes,

and finally dabbling with RealtimeKit and D-Bus.

This should come in handy the next time you think of tuning your system

for responsiveness.

Have a wonderful day!

With love, Patrick Louis written in Lebanon

Resources

- https://man7.org/linux/man-pages/man7/sched.7.html

- https://www.uninformativ.de/blog/postings/2024-08-03/0/POSTING-en.html

- https://man7.org/linux/man-pages/man2/setpriority.2.html

- https://www.kernel.org/doc/Documentation/scheduler/sched-deadline.rst

- https://www.kernel.org/doc/Documentation/scheduler/sched-rt-group.rst

- https://medium.rest/query-by-url?urlPost=https%3A%2F%2Fmedium.com%2F%2540ahmedmansouri%2Funderstanding-process-priority-and-nice-in-linux-67d259f3e2f8

- https://www.thegeekstuff.com/2013/11/linux-process-and-threads/

- https://unix.stackexchange.com/questions/472300/is-a-light-weight-process-attached-to-a-kernel-thread-in-linux

- https://www.baeldung.com/linux/process-vs-thread

- https://www.baeldung.com/linux/real-time-process-scheduling

- https://www.baeldung.com/linux/managing-processors-availability

- https://man7.org/linux/man-pages/man7/cpuset.7.html

- https://manpages.org/cset-set

- https://www.kernel.org/doc/Documentation/scheduler/sched-rt-group.txt

- https://stackoverflow.com/questions/344203/maximum-number-of-threads-per-process-in-linux#344292

- https://www.man7.org/linux/man-pages/man7/pthreads.7.html

- https://www.zdnet.com/article/20-years-later-real-time-linux-makes-it-to-the-kernel-really/

- https://gitlab.com/procps-ng/procps/-/issues/111

- https://superuser.com/questions/286752/unix-ps-l-priority#286761

- https://wiki.archlinux.org/title/Improving_performance

- https://lwn.net/Articles/925371/

- https://kernelnewbies.org/Linux_6.6#New_task_scheduler:_EEVDF

- https://sched-ext.com/

- https://github.com/torvalds/linux/commit/f0e1a0643a59bf1f922fa209cec86a170b784f3f

- https://www.man7.org/linux/man-pages/man2/getrlimit.2.html

- https://venam.net/blog/unix/2023/02/28/access_control.html#ulimit-rlimit-and-sysctl-tunables

- https://flatpak.github.io/xdg-desktop-portal/

- https://flatpak.github.io/xdg-desktop-portal/docs/doc-org.freedesktop.portal.Realtime.html

- https://stackoverflow.com/questions/1009577/selecting-a-linux-i-o-scheduler#1010562

- https://wiki.ubuntu.com/Kernel/Reference/IOSchedulers

If you want to have a more in depth discussion I'm always available by email or irc.

We can discuss and argue about what you like and dislike, about new ideas to consider, opinions, etc..

If you don't feel like "having a discussion" or are intimidated by emails

then you can simply say something small in the comment sections below

and/or share it with your friends.